TRI Author: Adrien Gaidon

All Authors: Cesar Roberto de Souza, Adrien Gaidon, Yohann Cabon, Naila Murray, Antonio Manuel Lopez

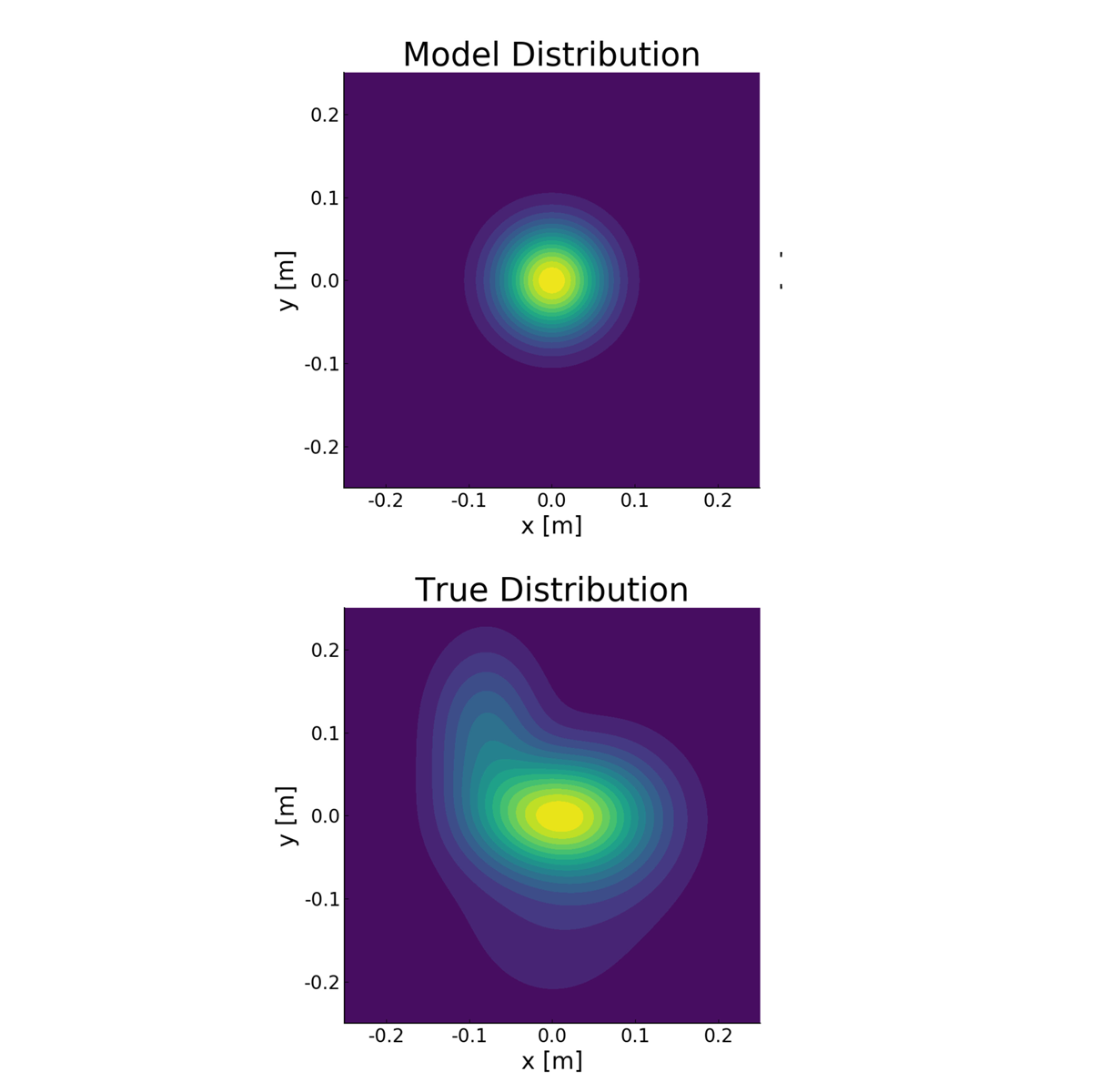

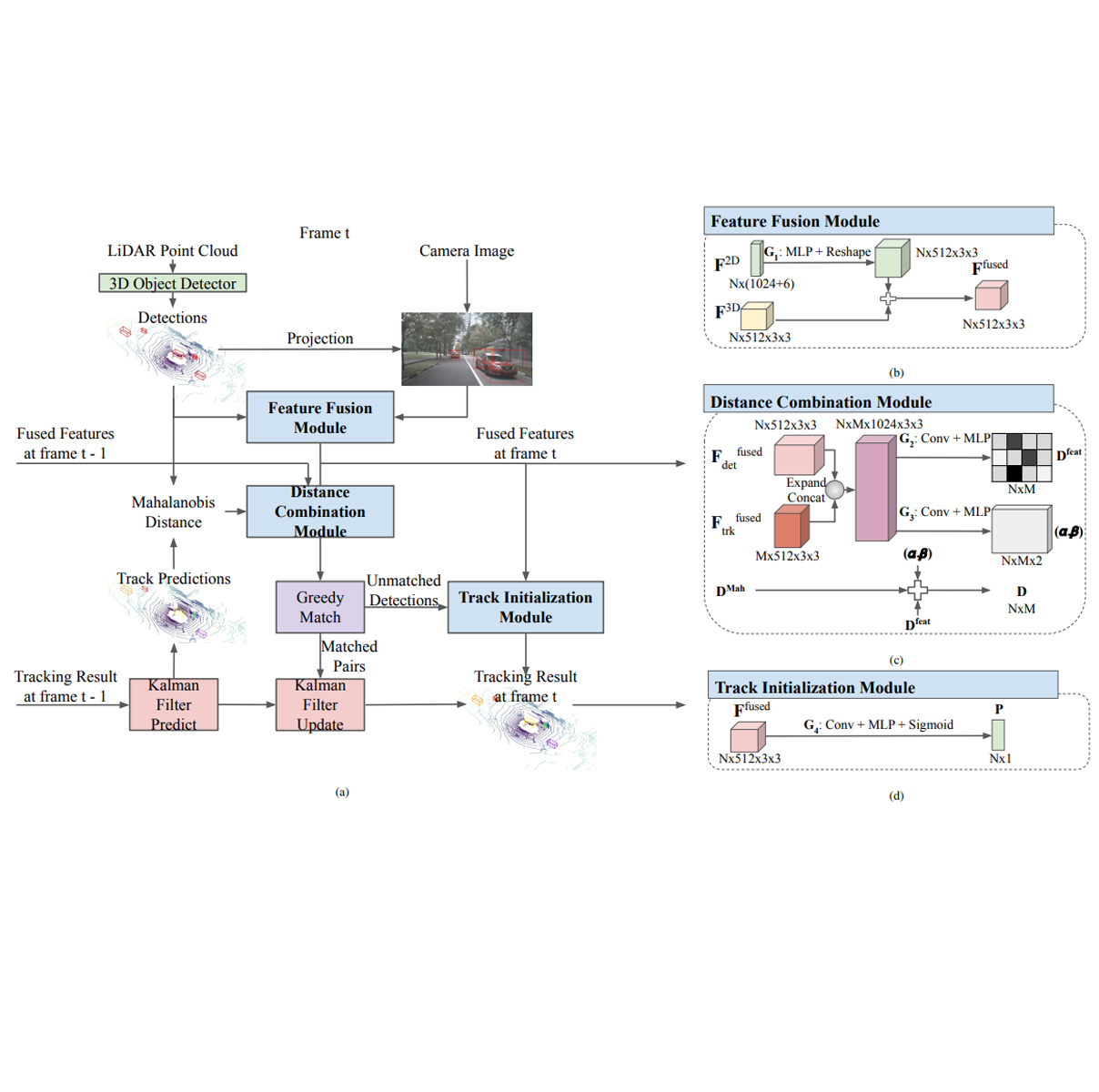

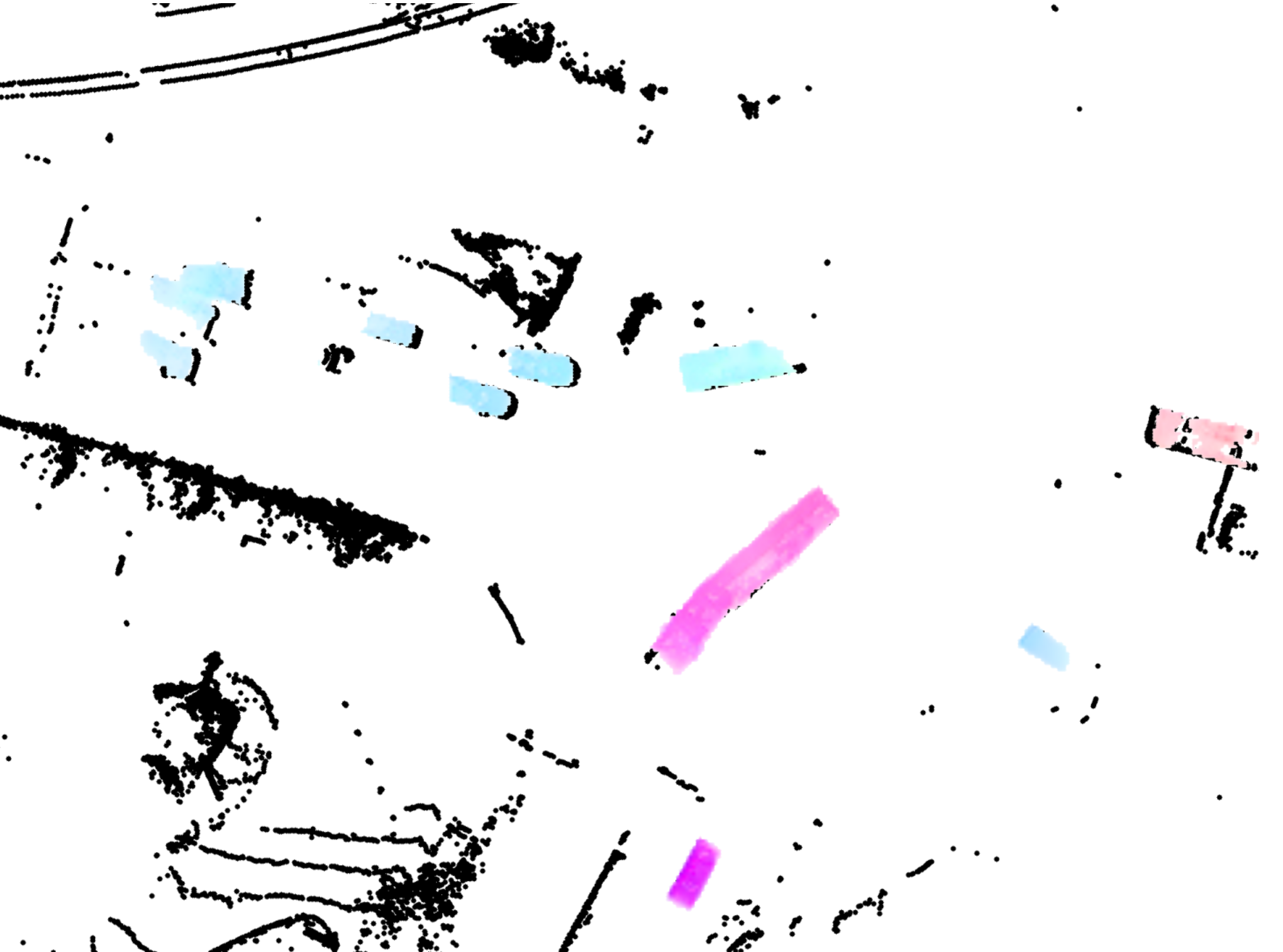

Deep video action recognition models have been highly successful in recent years but require large quantities of manually-annotated data, which are expensive and laborious to obtain. In this work, we investigate the generation of synthetic training data for video action recognition, as synthetic data have been successfully used to supervise models for a variety of other computer vision tasks. We propose an interpretable parametric generative model of human action videos that relies on procedural generation, physics models and other components of modern game engines. With this model we generate a diverse, realistic, and physically plausible dataset of human action videos, called PHAV for “Procedural Human Action Videos”. PHAV contains a total of 39,982 videos, with more than 1000 examples for each of 35 action categories. Our video generation approach is not limited to existing motion capture sequences: 14 of these 35 categories are procedurally-defined synthetic actions. In addition, each video is represented with 6 different data modalities, including RGB, optical flow and pixel-level semantic labels. These modalities are generated almost simultaneously using the Multiple Render Targets feature of modern GPUs. In order to leverage PHAV, we introduce a deep multi-task (i.e. that considers action classes from multiple datasets) representation learning architecture that is able to simultaneously learn from synthetic and real video datasets, even when their action categories differ. Our experiments on the UCF-101 and HMDB-51 benchmarks suggest that combining our large set of synthetic videos with small real-world datasets can boost recognition performance. Our approach also significantly outperforms video representations produced by fine-tuning state-of-the-art unsupervised generative models of videos. Read More

Citation: de Souza, César Roberto, Adrien Gaidon, Yohann Cabon, Naila Murray, and Antonio Manuel López. "Generating Human Action Videos by Coupling 3D Game Engines and Probabilistic Graphical Models." International Journal of Computer Vision (2019): 1-32.