TRI Authors: Adrien Gaidon, Rares Ambrus, Guy Rosman, Wolfram Burgard.

All Authors: Buhler, Andreas, Adrien Gaidon, Andrei Cramariuc, Rares Ambrus, Guy Rosman, Wolfram Burgard.

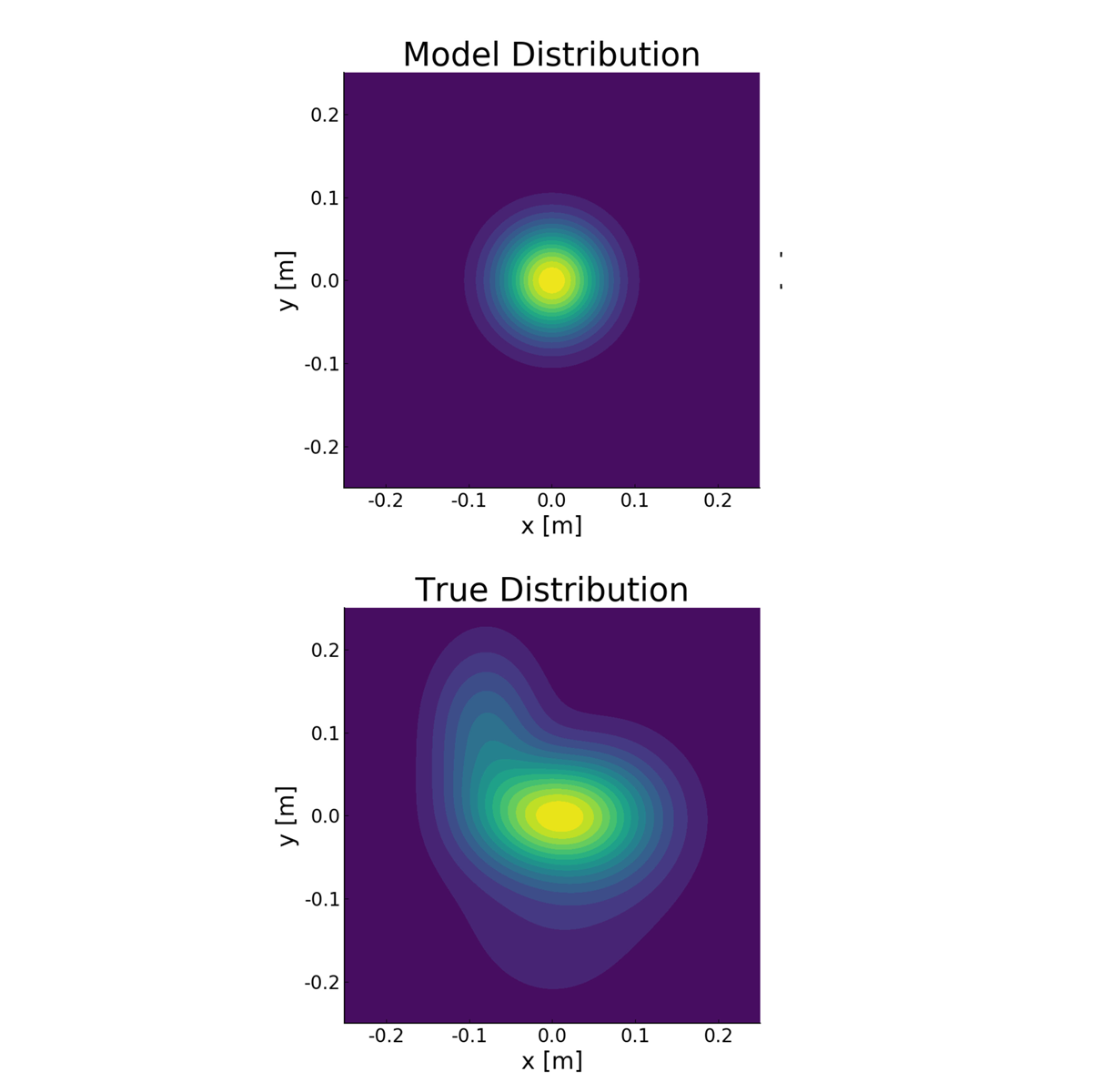

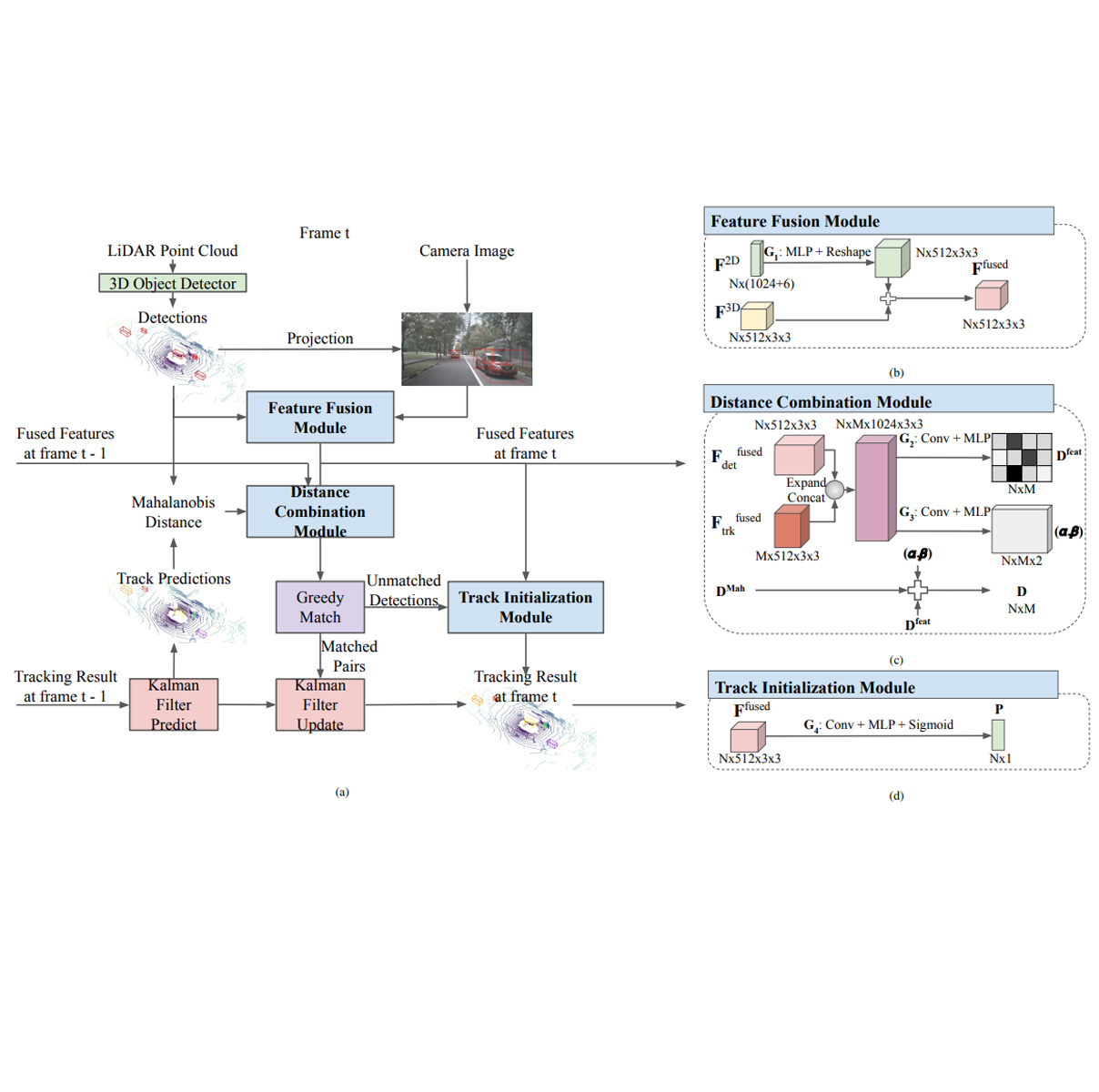

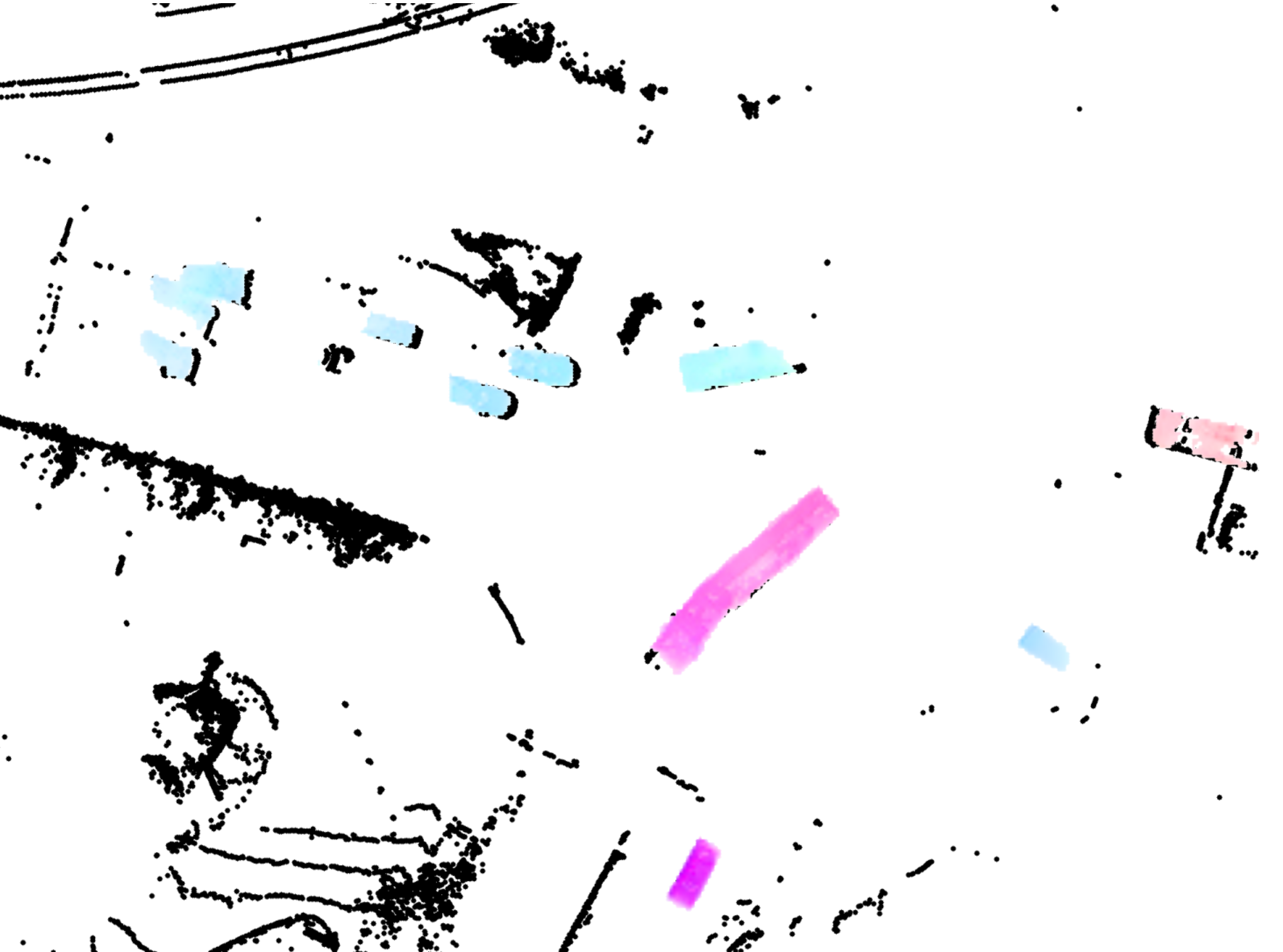

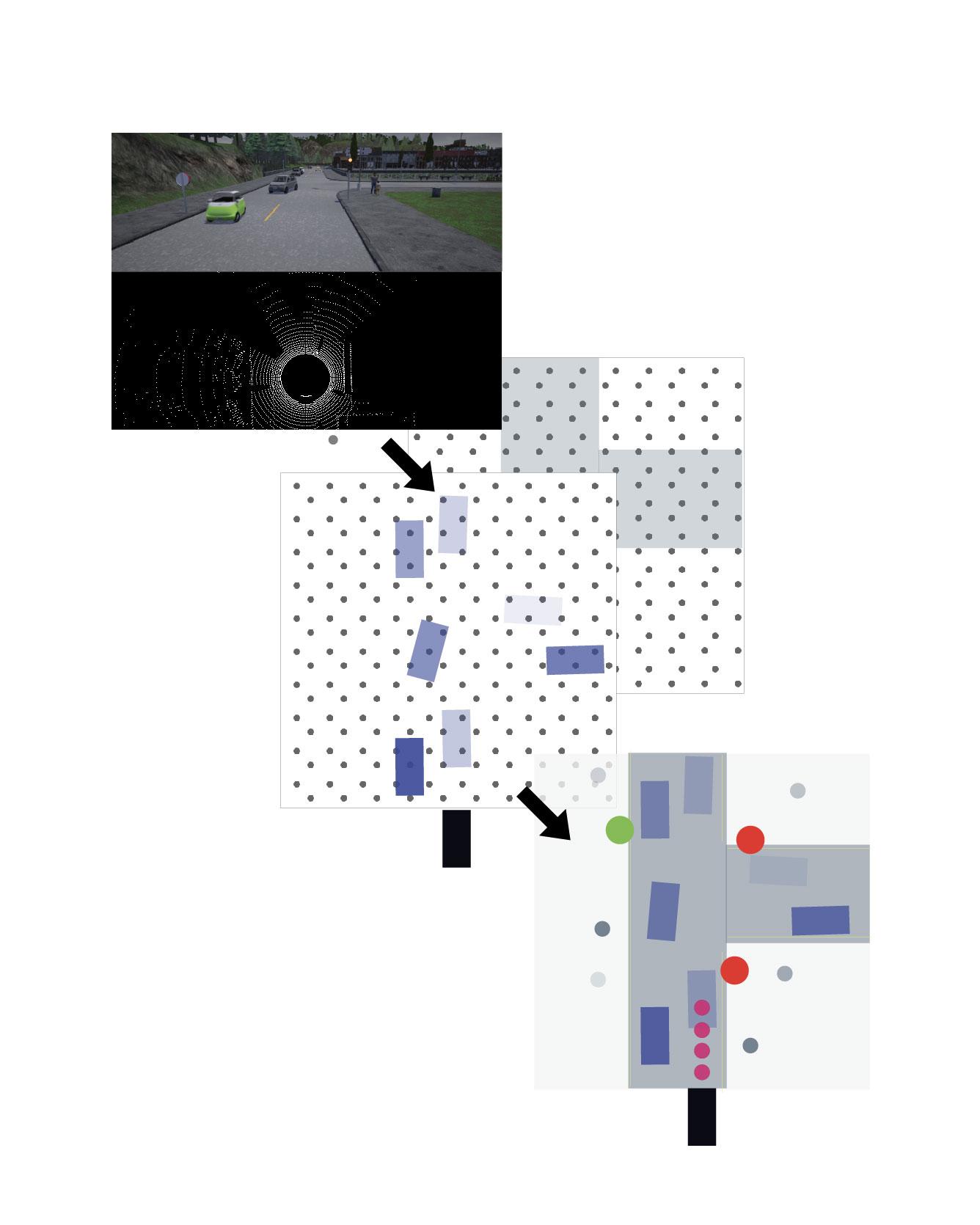

Safe autonomous driving requires robust detection of other traffic participants. However, robust does not mean perfect, and safe systems typically minimize missed detections at the expense of a higher false positive rate. This results in conservative and yet potentially dangerous behavior such as avoiding imaginary obstacles. In the context of behavioral cloning, perceptual errors at training time can lead to learning difficulties or wrong policies, as expert demonstrations might be inconsistent with the perceived world state. In this work, we propose a behavioral cloning approach that can safely leverage imperfect perception without being conservative. Our core contribution is a novel representation of perceptual uncertainty for learning to plan. We propose a new probabilistic birds-eye-view semantic grid to encode the noisy output of object perception systems. We then leverage expert demonstrations to learn an imitative driving policy using this probabilistic representation. Using the CARLA simulator, we show that our approach can safely overcome critical false positives that would otherwise lead to catastrophic failures or conservative behavior. Read More

Citation: Buhler, Andreas, Adrien Gaidon, Andrei Cramariuc, Rares Ambrus, Guy Rosman, Wolfram Burgard. "Driving Through Ghosts: Behavioral Cloning with False Positives." To appear in International Conference on Intelligent Robots and Systems (IROS) 2020.