TRI Author: Wadim Kehl

All Authors: Fabian Manhardt, Wadim Kehl, Nassir Navab, Federico Tombari

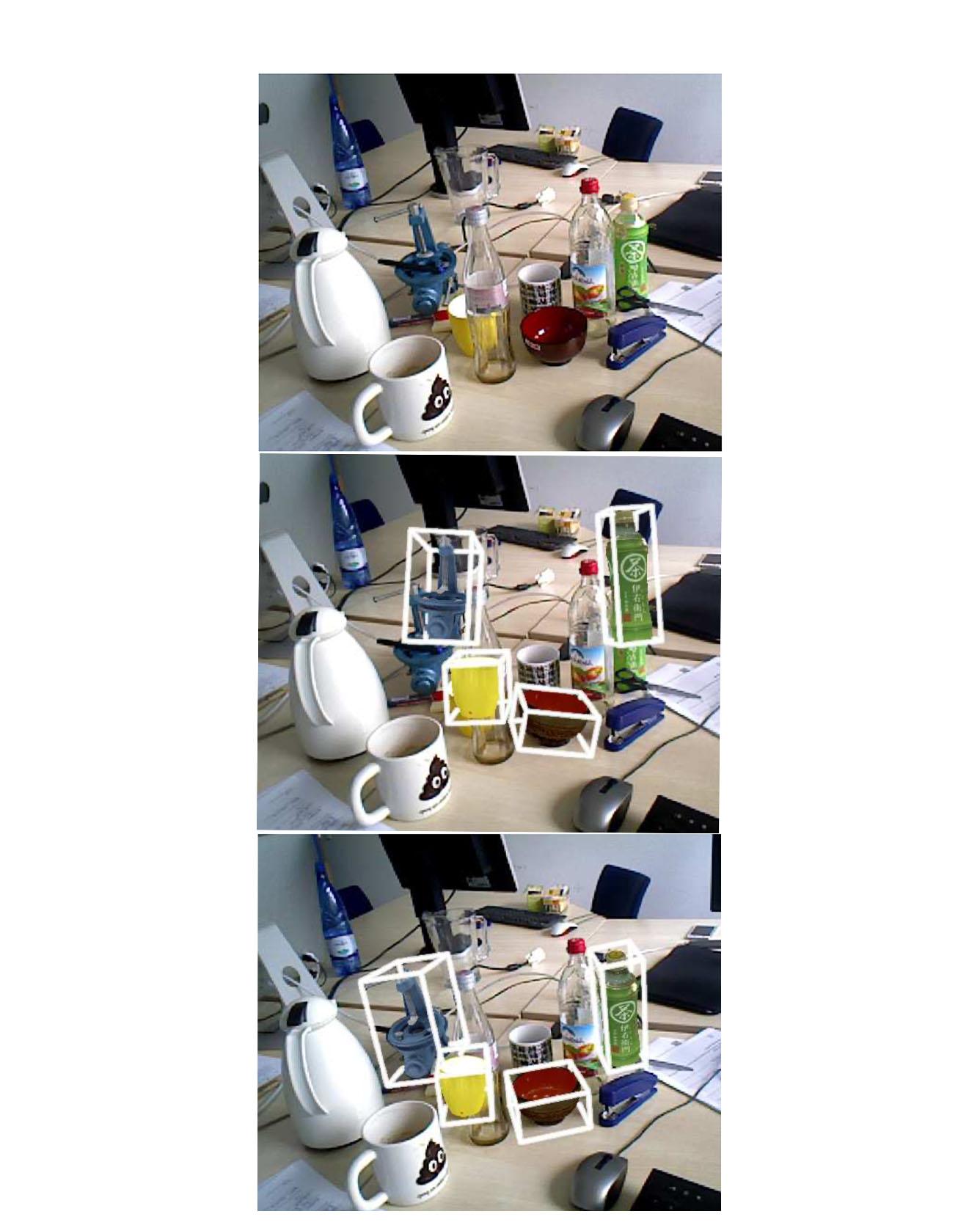

We present a novel approach for model-based 6D pose refinement in color data. Building on the established idea of contour-based pose tracking, we teach a deep neural network to predict a translational and rotational update. At the core, we propose a new visual loss that drives the pose update by aligning object contours, thus avoiding the definition of any explicit appearance model. In contrast to previous work our method is correspondence-free, segmentation-free, can handle occlusion and is agnostic to geometrical symmetry as well as visual ambiguities. Additionally, we observe a strong robustness towards rough initialization. The approach can run in real-time and produces pose accuracies that come close to 3D ICP without the need for depth data. Furthermore, our networks are trained from purely synthetic data and will be published together with the refinement code to ensure reproducibility. Read More

Citation: Manhardt, Fabian, Wadim Kehl, Nassir Navab, and Federico Tombari. "Deep model-based 6d pose refinement in rgb." In Proceedings of the European Conference on Computer Vision (ECCV), pp. 800-815. 2018.