Featured Publications

All Publications

TRI Author: Simon Stent

All Authors: Xishuai Peng, Ruirui Liu, Yi Lu Murphey, Simon Stent, Yuanxiang Li

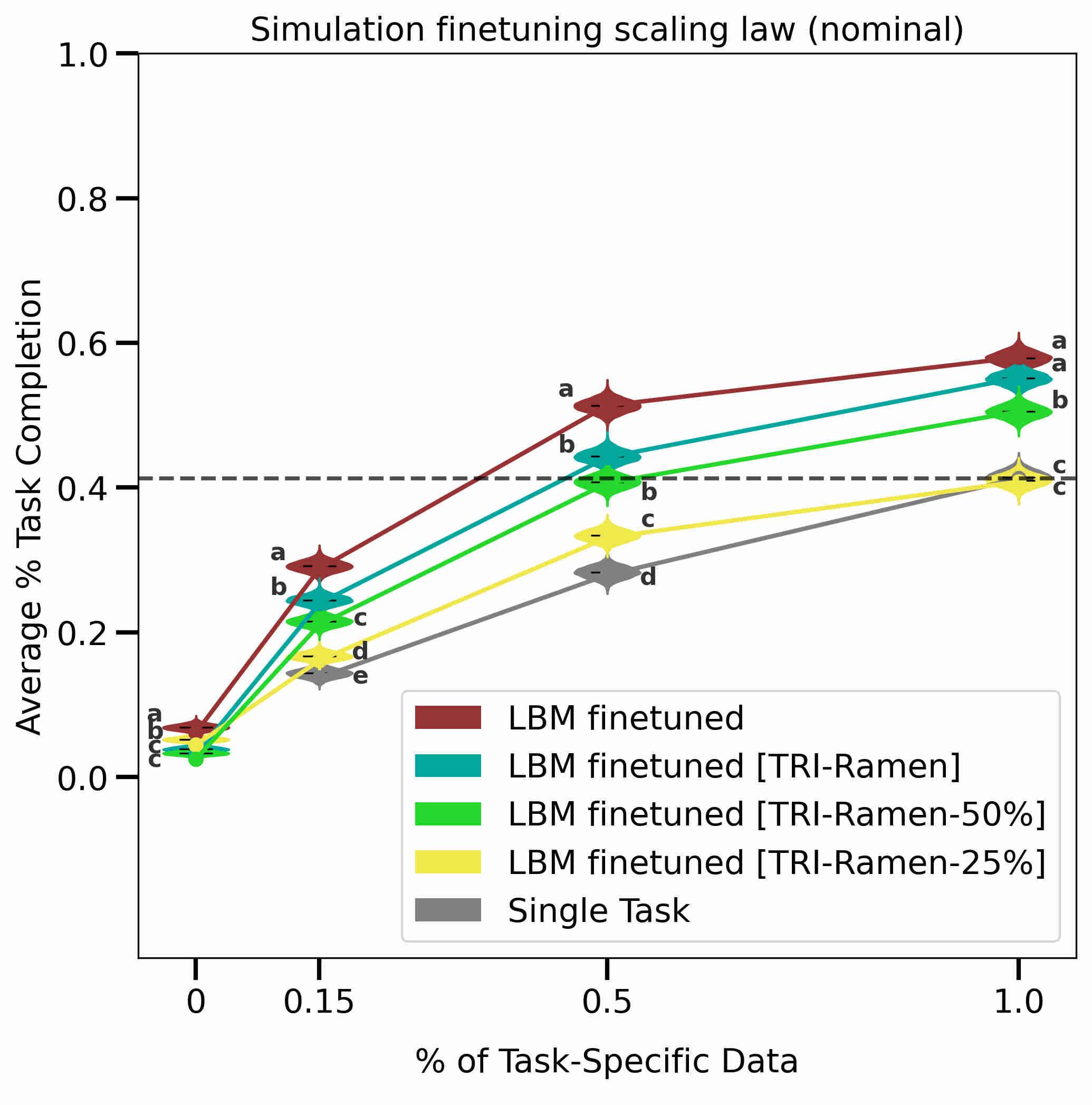

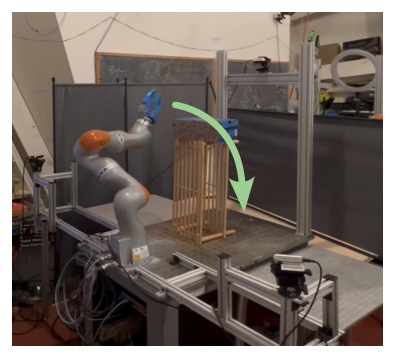

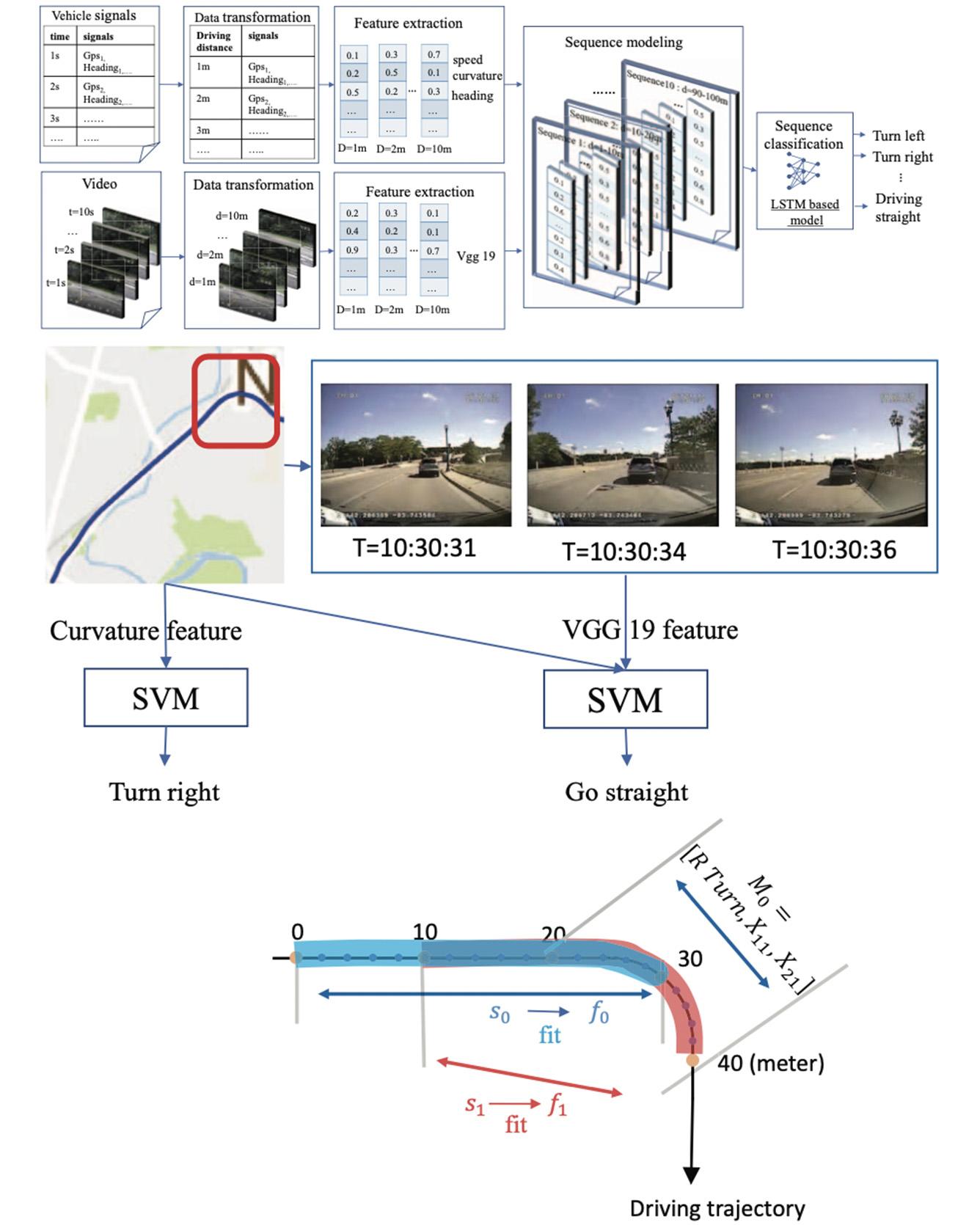

Driving maneuver detection is one of the most challenging tasks in Advanced Driver Assistance Systems (ADAS). Research has shown that the early notification of improper driving maneuvers is helpful to avoid fatalities and serious accidents. In this paper, we introduce a driver maneuvering detection (DMD) system. The DMD system contains three major computational components, distance based representation of driving context, combined features of vehicle trajectory and VGG-19 network features extracted from the video images of vehicle front view, and a Long Short-Term Memory (LSTM)-based neural network model to learn sequence knowledge in driving maneuvering events. We show through experiments that the DMD system is capable of learning the latent features of five different classes of driving maneuvers and achieving significantly better performance than traditional classification methods on real-world driving trips. Read More

Citation: Peng, Xishuai, Ruirui Liu, Yi Lu Murphey, Simon Stent, and Yuanxiang Li. "Driving maneuver detection via sequence learning from vehicle signals and video images." In 2018 24th International Conference on Pattern Recognition (ICPR), pp. 1265-1270. IEEE, 2018.

TRI Author: Hongkai Dai

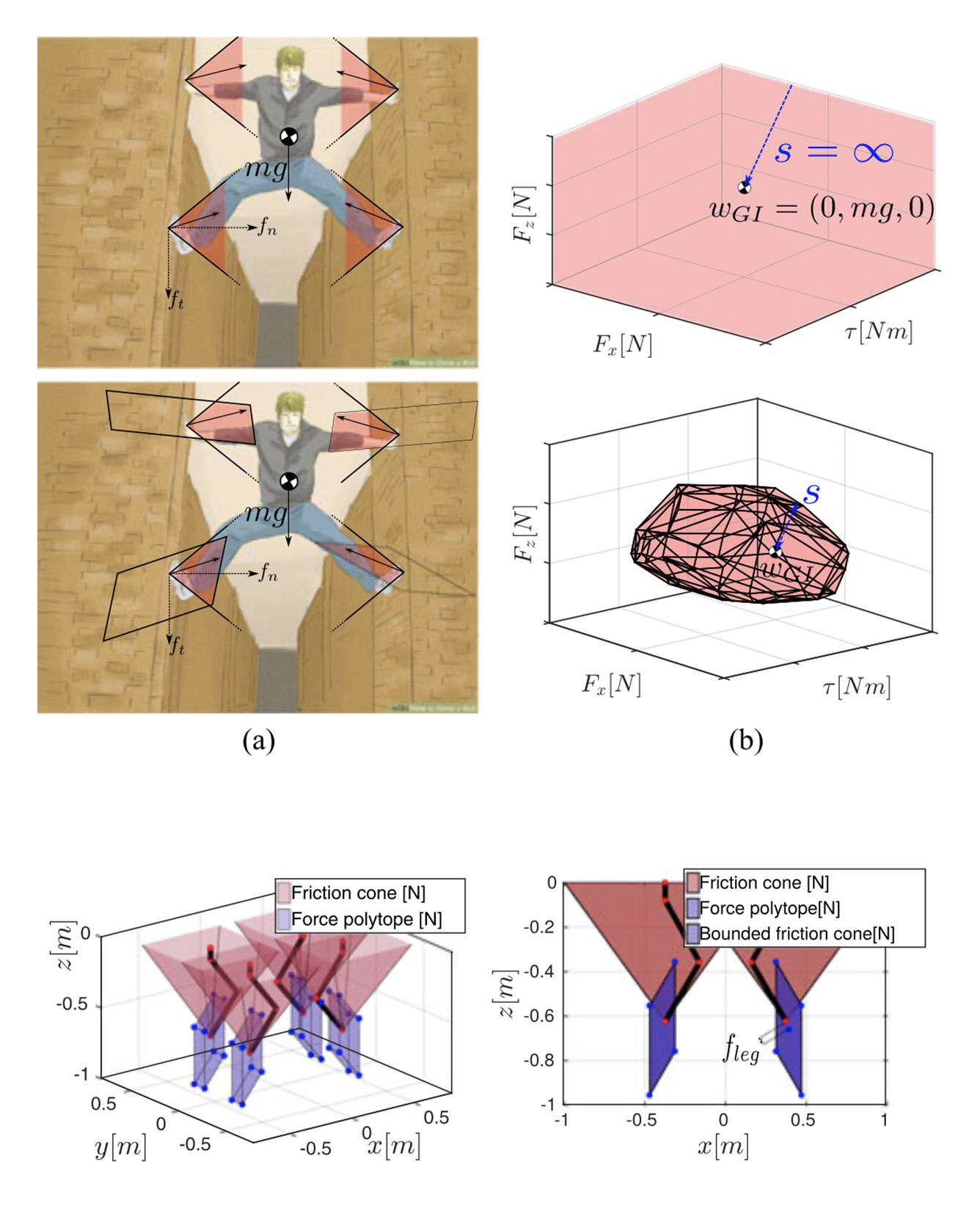

All Authors: Romeo Orsolino, Michele Focchi, Carlos Mastalli, Hongkai Dai, Darwin G. Caldwell and Claudio Semini

Motion planning in multicontact scenarios has recently gathered interest within the legged robotics community, however actuator force/torque limits are rarely considered. We believe that these limits gain paramount importance when the complexity of the terrains to be traversed increases. We build on previous research from the field of robotic grasping to propose two new six-dimensional bounded polytopes named the Actuation Wrench Polytope (AWP) and the Feasible Wrench Polytope (FWP). We define theAWP as the set of all the wrenches that a robot can generate while considering its actuation limits. This considers the admissible contact forces that the robot can generate given its current configuration and actuation capabilities. The Contact Wrench Cone (CWC) instead includes features of the environment such as the contact normal or the friction coefficient. The intersection of the AWP and of the CWC results in a convex polytope, the FWP, which turns out to be more descriptive of the real robot capabilities than existing simplified models, while maintaining the same compact representation. We explain how to efficiently compute the vertex-description of the FWP that is then used to evaluate a feasibility factor that we adapted from the field of robotic grasping. This allows us to optimize for robustness to external disturbance wrenches. Based on this, we present an implementation of a motion planner for our quadruped robot HyQ that provides online Center of Mass trajectories that are guaranteed to be statically stable and actuation-consistent. Read More

Citation: Orsolino, Romeo, Michele Focchi, Carlos Mastalli, Hongkai Dai, Darwin G. Caldwell, and Claudio Semini. "Application of wrench-based feasibility analysis to the online trajectory optimization of legged robots." IEEE Robotics and Automation Letters 3, no. 4 (2018): 3363-3370.

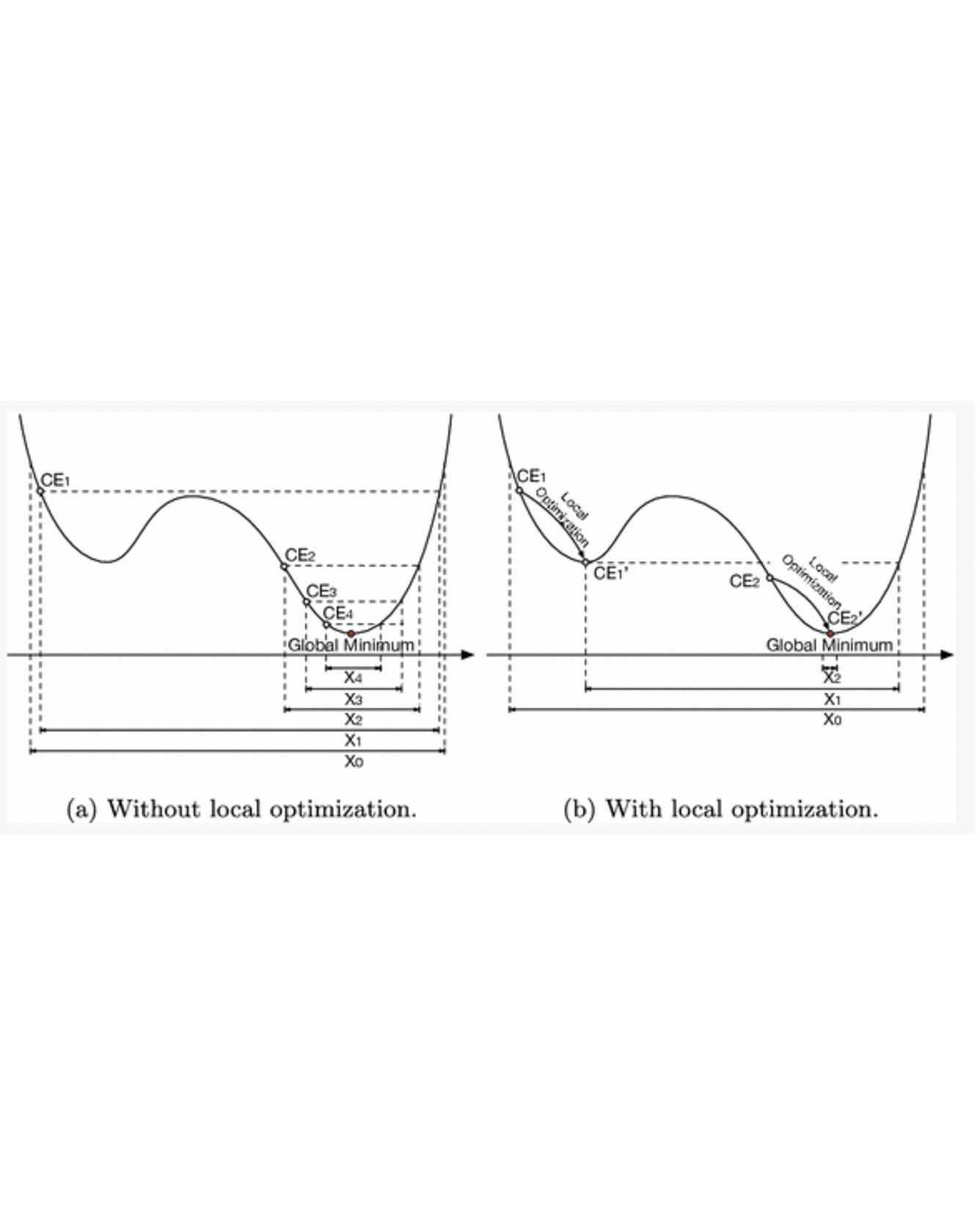

TRI Author: Soonho Kong All Authors: Soonho Kong, Armando Solar-Lezama, Sicun Gao We propose δ-complete decision procedures for solving satisfiability of nonlinear SMT problems over real numbers that contain universal quantification and a wide range of nonlinear functions. The methods combine interval constraint propagation, counterexample-guided synthesis, and numerical optimization. In particular, we show how to handle the interleaving of numerical and symbolic computation to ensure delta-completeness in quantified reasoning. We demonstrate that the proposed algorithms can handle various challenging global optimization and control synthesis problems that are beyond the reach of existing solvers. Read more

Citation: Kong, Soonho, Armando Solar-Lezama, and Sicun Gao. "Delta-decision procedures for exists-forall problems over the reals." In International Conference on Computer Aided Verification, pp. 219-235. Springer, Cham, 2018.

TRI Author: Adrien Gaidon

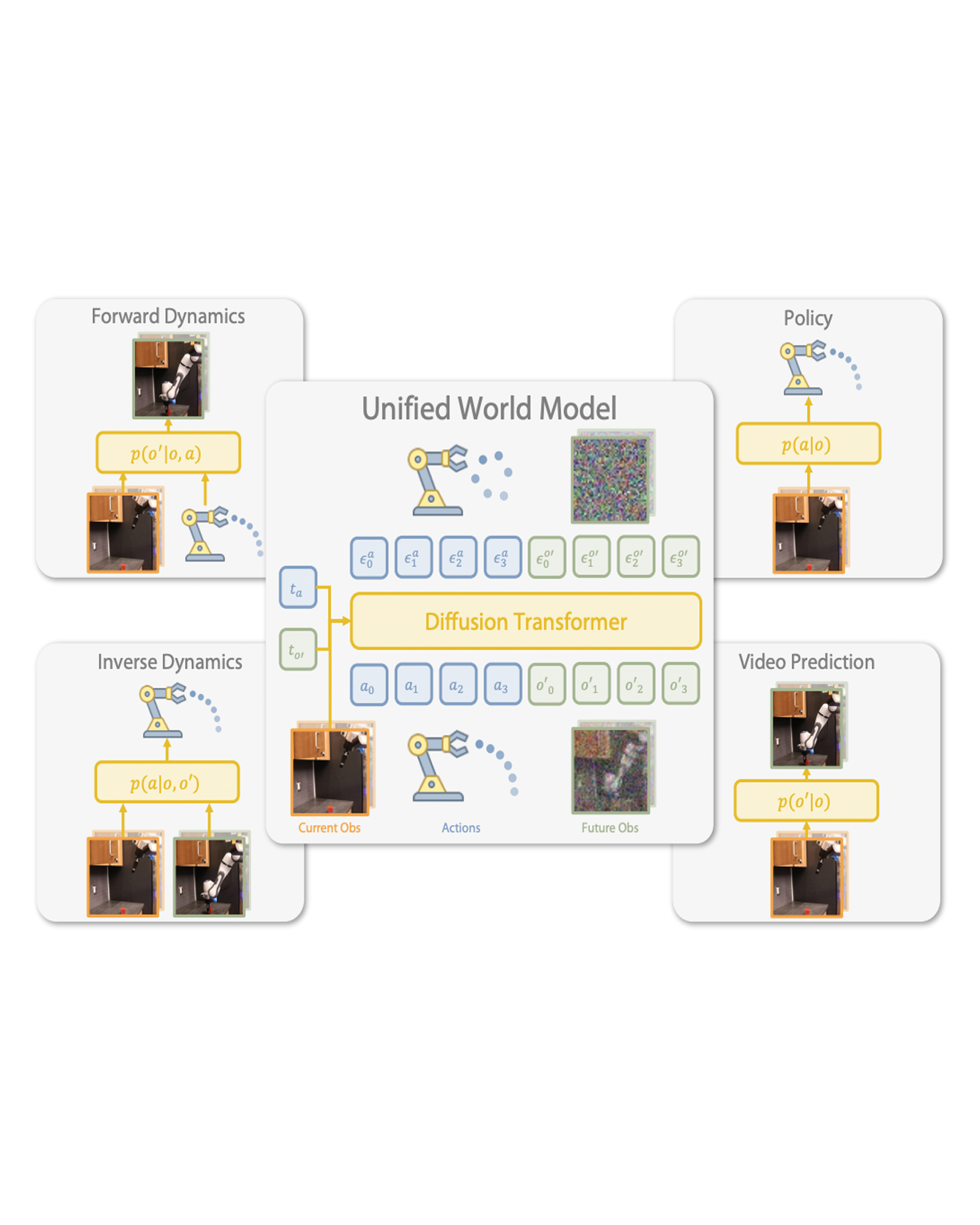

All Authors: Adrien Gaidon, Antonio Lopez, Florent Perronnin

The recent successes in many visual recognition tasks, such as image classification, object detection, and semantic segmentation can be attributed in large part to three factors: (i) advances in end-to-end trainable deep learning models (LeCun 2015), (ii) the progress of computing hardware, and (iii) the introduction of increasingly larger labeled datasets such as PASCAL VOC (Everingham et al. 2010), KITTI (Geiger et al. 2012), ImageNet (Russakovsky et al. 2015), MS-COCO (Lin et al. 2014), and Cityscapes (Cordts et al. 2016), among others. In fact, recent results (Sun et al. 2017; Hestness et al. 2017) indicate that the reliability of current visual models might not be limited by the algorithms themselves but by the type and amount of supervised data available. Therefore, to tackle more challenging tasks, such as video scene understanding, progress is needed not only on the algorithmic and hardware fronts but also on the data front, both for learning and quantitative evaluation. However, acquiring and densely labeling a large visual dataset with ground truth information (e.g. semantic labels, depth, optical flow) for each new problem is not a scalable alternative. Read More

Citation: Gaidon, Adrien, Antonio Lopez, and Florent Perronnin. "The reasonable effectiveness of synthetic visual data." International Journal of Computer Vision 126, no. 9 (2018): 899-901.

TRI Author: Hongkai Dai

All Authors: Bernardo Aceituno-Cabezas, Carlos Mastalli, Hongkai Dai, Michele Focchi, Andreea Radulescu, Darwin G. Caldwell, Jose Cappelletto, Juan C. Grieco, Gerardo Fernandez-Lopez, Claudio Semini

Traditional motion planning approaches for multilegged locomotion divide the problem into several stages, such as contact search and trajectory generation. However, reasoning about contacts and motions simultaneously is crucial for the generation of complex whole-body behaviors. Currently, coupling theses problems has required either the assumption of a fixed gait sequence and flat terrain condition, or nonconvex optimization with intractable computation time. In this letter, we propose a mixed-integer convex formulation to plan simultaneously contact locations, gait transitions, and motion, in a computationally efficient fashion. In contrast to previous works, our approach is not limited to flat terrain nor to a prespecified gait sequence. Instead, we incorporate the friction cone stability margin, approximate the robot's torque limits, and plan the gait using mixed-integer convex constraints. We experimentally validated our approach on the HyQ robot by traversing different challenging terrains, where nonconvexity and flat terrain assumptions might lead to suboptimal or unstable plans. Our method increases the motion robustness while keeping a low computation time. Read More

Citation: Aceituno-Cabezas, Bernardo, Carlos Mastalli, Hongkai Dai, Michele Focchi, Andreea Radulescu, Darwin G. Caldwell, José Cappelletto, Juan C. Grieco, Gerardo Fernández-López, and Claudio Semini. "Simultaneous contact, gait, and motion planning for robust multilegged locomotion via mixed-integer convex optimization." IEEE Robotics and Automation Letters 3, no. 3 (2017): 2531-2538.

TRI Authors: Kuan-Hui Lee, Yusuke Kanzawa, Matthew Derry

All Authors: Kuan-Hui Lee, Yusuke Kanzawa, Matthew Derry, and Michael R. James

This paper proposes the Permutation Matrix Track Association (PMTA) algorithm to support track-to-track, multi-sensor data fusion for multiple targets in an autonomous driving system. In this system, measurement data from different sensor modalities (LIDAR, radar, and vision) is processed by object trackers operating on each sensor modality independently to create the tracks of the objects. The proposed approach fuses the object track lists from each tracker, first by associating the tracks within each track list, followed by a state estimation (filtering) step. The eventual output is the unified tracks of the objects provided for further autonomous driving processing, such as path and motion planning. The permutation matrix track association (PMTA) algorithm considers both spatial and temporal information to associate object tracks from different sensor modalities. Experimental results show that the proposed approach improves not only the performance of the multipletarget track-to-track fusion, but also stability and robustness in the resulting speed control and decision making in the autonomous driving system. Read More

Citation: Lee, Kuan-Hui, Yusuke Kanzawa, Matthew Derry, and Michael R. James. "Multi-target track-to-track fusion based on permutation matrix track association." In 2018 IEEE Intelligent Vehicles Symposium (IV), pp. 465-470. IEEE, 2018.

TRI Author: Wadim Kehl All Authors: Miroslava Slavcheva, Wadim Kehl, Nassir Navab, Slobodan Ilic We tackle the task of dense 3D reconstruction from RGB-D data. Contrary to the majority of existing methods, we focus not only on trajectory estimation accuracy, but also on reconstruction precision. The key technique is SDF-2-SDF registration, which is a correspondence-free, symmetric, dense energy minimization method, performed via the direct voxel-wise difference between a pair of signed distance fields. It has a wider convergence basin than traditional point cloud registration and cloud-to-volume alignment techniques. Furthermore, its formulation allows for straightforward incorporation of photometric and additional geometric constraints. We employ SDF-2-SDF registration in two applications. First, we perform small-to-medium scale object reconstruction entirely on the CPU. To this end, the camera is tracked frame-to-frame in real time. Then, the initial pose estimates are refined globally in a lightweight optimization framework, which does not involve a pose graph. We combine these procedures into our second, fully real-time application for larger-scale object reconstruction and SLAM. It is implemented as a hybrid system, whereby tracking is done on the GPU, while refinement runs concurrently over batches on the CPU. To bound memory and runtime footprints, registration is done over a fixed number of limited-extent volumes, anchored at geometry-rich locations. Extensive qualitative and quantitative evaluation of both trajectory accuracy and model fidelity on several public RGB-D datasets, acquired with various quality sensors, demonstrates higher precision than related techniques. Read more

Citation: Slavcheva, Miroslava, Wadim Kehl, Nassir Navab, and Slobodan Ilic. "Sdf-2-sdf registration for real-time 3d reconstruction from rgb-d data." International Journal of Computer Vision 126, no. 6 (2018): 615-636.

TRI Author: Ryan M. Eustice

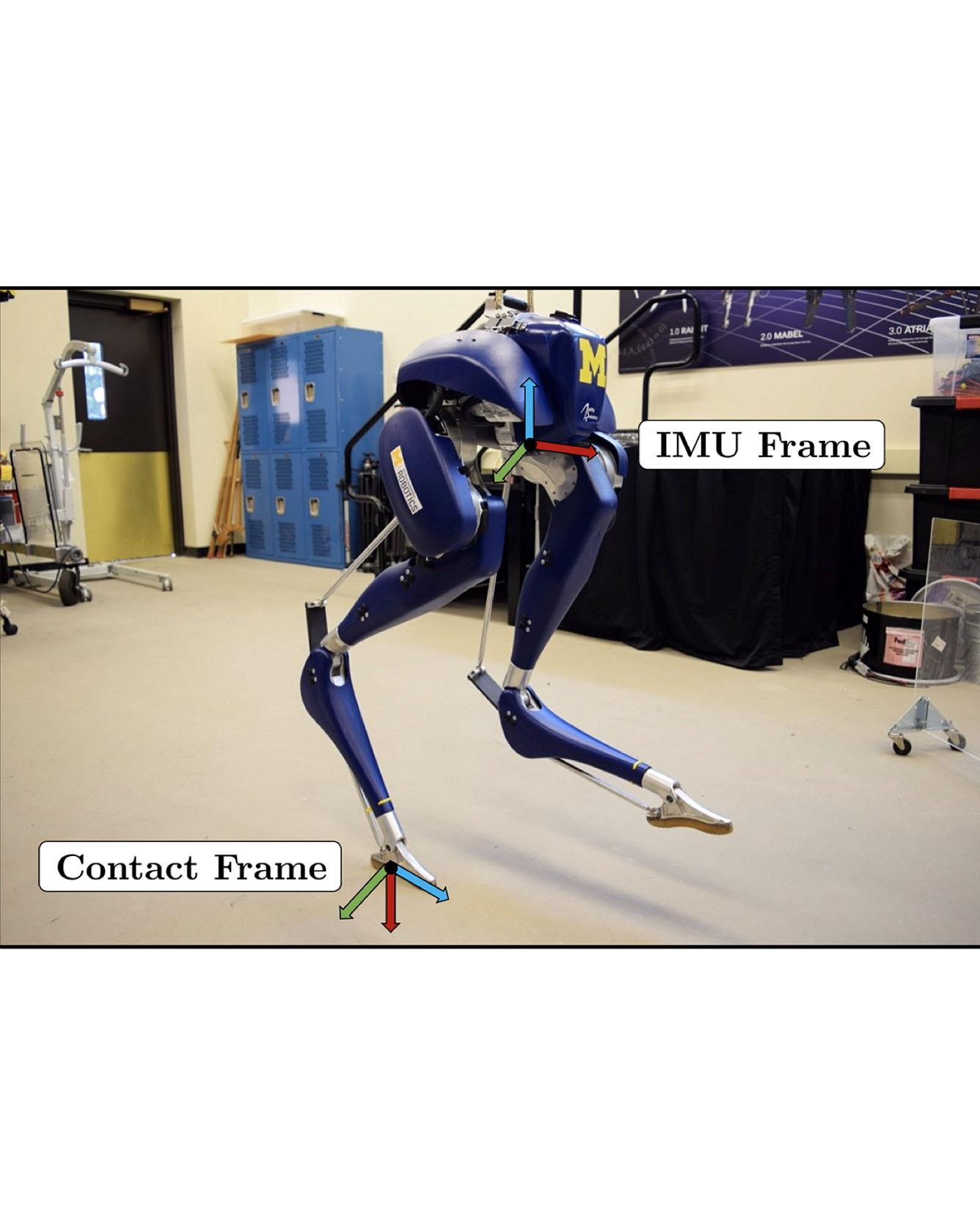

All Authors: Ross Hartley, Maani Ghaffari Jadidi, Ryan M. Eustice, Jessy W. Grizzle

This paper derives a contact-aided inertial navigation observer for a 3D bipedal robot using the theory of invariant observer design. Aided inertial navigation is fundamentally a nonlinear observer design problem; thus, current solutions are based on approximations of the system dynamics, such as an Extended Kalman Filter (EKF), which uses a system's Jacobian linearization along the current best estimate of its trajectory. On the basis of the theory of invariant observer design by Barrau and Bonnabel, and in particular, the Invariant EKF (InEKF), we show that the error dynamics of the point contact-inertial system follows a log-linear autonomous differential equation; hence, the observable state variables can be rendered convergent with a domain of attraction that is independent of the system's trajectory. Due to the log-linear form of the error dynamics, it is not necessary to perform a nonlinear observability analysis to show that when using an Inertial Measurement Unit (IMU) and contact sensors, the absolute position of the robot and a rotation about the gravity vector (yaw) are unobservable. We further augment the state of the developed InEKF with IMU biases, as the online estimation of these parameters has a crucial impact on system performance. We evaluate the convergence of the proposed system with the commonly used quaternion-based EKF observer using a Monte-Carlo simulation. In addition, our experimental evaluation using a Cassie-series bipedal robot shows that the contact-aided InEKF provides better performance in comparison with the quaternion-based EKF as a result of exploiting symmetries present in the system dynamics. Read More

Citation: Hartley, Ross, Maani Ghaffari, Ryan M. Eustice, and Jessy W. Grizzle. "Contact-aided invariant extended Kalman filtering for robot state estimation." The International Journal of Robotics Research 39, no. 4 (2020): 402-430.

TRI Authors: Jeff Walls, Ryan Eustice

All Authors: Hartley, R., Mangelson, J. Gan, L., Ghaffari Jadidi, M., Walls, J., Eustice, R., Grizzle, J.

State-of-the-art robotic perception systems have achieved sufficiently good performance using Inertial Measurement Units (IMUs), cameras, and nonlinear optimization techniques, that they are now being deployed as technologies. However, many of these methods rely significantly on vision and often fail when visual tracking is lost due to lighting or scarcity of features. This paper presents a state-estimation technique for legged robots that takes into account the robot's kinematic model as well as its contact with the environment. We introduce forward kinematic factors and preintegrated contact factors into a factor graph framework that can be incrementally solved in real-time. The forward kinematic factor relates the robot's base pose to a contact frame through noisy encoder measurements. The preintegrated contact factor provides odometry measurements of this contact frame while accounting for possible foot slippage. Together, the two developed factors constrain the graph optimization problem allowing the robot's trajectory to be estimated. The paper evaluates the method using simulated and real sensory IMU and kinematic data from experiments with a Cassie-series robot designed by Agility Robotics. These preliminary experiments show that using the proposed method in addition to IMU decreases drift and improves localization accuracy, suggesting that its use can enable successful recovery from a loss of visual tracking. Read more

Citation: Hartley, Ross, Josh Mangelson, Lu Gan, Maani Ghaffari Jadidi, Jeffrey M. Walls, Ryan M. Eustice, and Jessy W. Grizzle. "Legged robot state-estimation through combined forward kinematic and preintegrated contact factors." In 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 1-8. IEEE, 2018.

TRI Author: Simon Stent

All Authors: Pablo F. Alcantarilla and Simon Stent and German Ros and Roberto Arroyo and Riccardo Gherardi

We propose a system for performing structural change detection in street-view videos captured by a vehicle-mounted monocular camera over time. Our approach is motivated by the need for more frequent and efficient updates in the large-scale maps used in autonomous vehicle navigation. Our method chains a multi-sensor fusion SLAM and fast dense 3D reconstruction pipeline, which provide coarsely registered image pairs to a deep Deconvolutional Network (DN) for pixel-wise change detection. We investigate two DN architectures for change detection, the first one is based on the idea of stacking contraction and expansion blocks while the second one is based on the idea of Fully Convolutional Networks. To train and evaluate our networks we introduce a new urban change detection dataset which is an order of magnitude larger than existing datasets and contains challenging changes due to seasonal and lighting variations. Our method outperforms existing literature on this dataset, which we make available to the community, and an existing panoramic change detection dataset, demonstrating its wide applicability. Read More

Citation: Alcantarilla, Pablo F., Simon Stent, German Ros, Roberto Arroyo, and Riccardo Gherardi. "Street-view change detection with deconvolutional networks." Autonomous Robots 42, no. 7 (2018): 1301-1322.