TRI Authors: V. Guizilini, R. Ambrus, S. Pillai, A. Raventos, A. Gaidon

All Authors: V. Guizilini, R. Ambrus, S. Pillai, A. Raventos, A. Gaidon

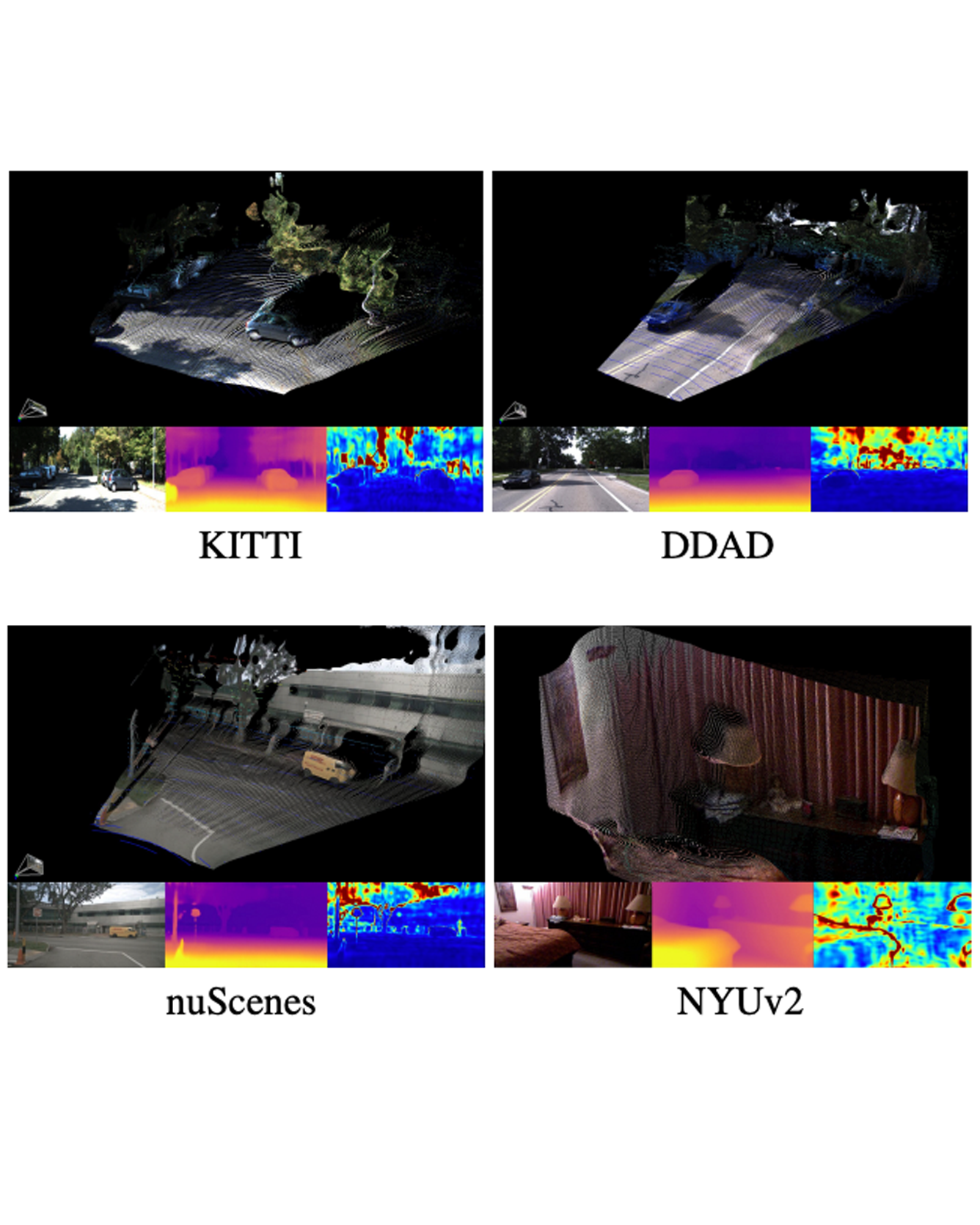

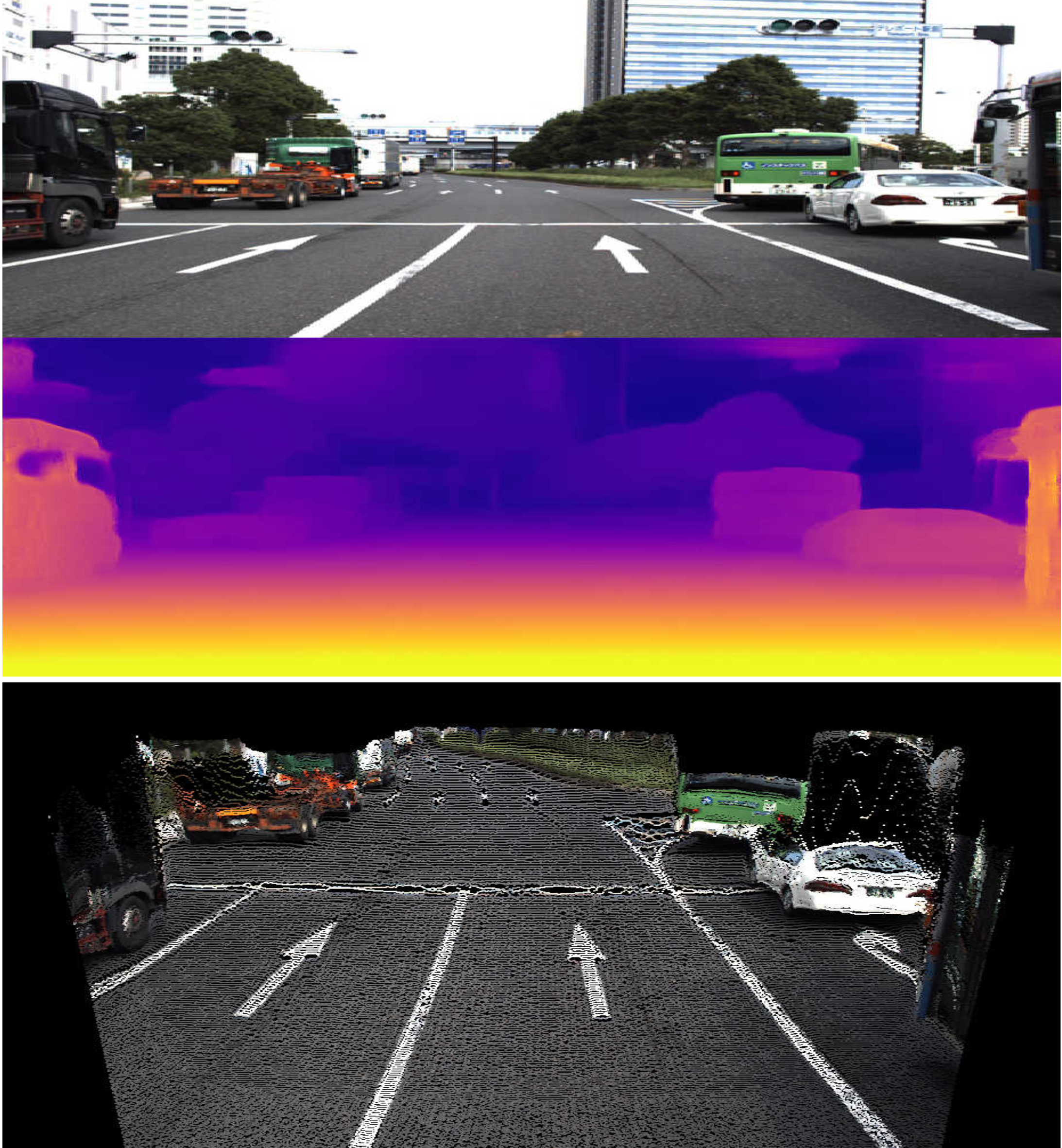

Although cameras are ubiquitous, robotic platforms typically rely on active sensors like LiDAR for direct 3D perception. In this work, we propose a novel self-supervised monocular depth estimation method combining geometry with a new deep network, PackNet, learned only from unlabeled monocular videos. Our architecture leverages novel symmetrical packing and unpacking blocks to jointly learn to compress and decompress detail-preserving representations using 3D convolutions. Although self-supervised, our method outperforms other self, semi, and fully supervised methods on the KITTI benchmark. The 3D inductive bias in PackNet enables it to scale with input resolution and number of parameters without overfitting, generalizing better on out-of-domain data such as the NuScenes dataset. Furthermore, it does not require large-scale supervised pretraining on ImageNet and can run in real-time. Finally, we release DDAD (Dense Depth for Automated Driving), a new urban driving dataset with more challenging and accurate depth evaluation, thanks to longer-range and denser ground-truth depth generated from high-density LiDARs mounted on a fleet of self-driving cars operating world-wide. Read More

Citation: Guizilini, Vitor, Rares Ambrus, Sudeep Pillai, and Adrien Gaidon. "Packnet-sfm: 3d packing for self-supervised monocular depth estimation." CVPR, 2020,