Featured Publications

All Publications

TRI Author: Adrien Gaidon

All Authors: Felipe Codevilla and Eder Santana and Antonio M. López and Adrien Gaidon

Driving requires reacting to a wide variety of complex environment conditions and agent behaviors. Explicitly modeling each possible scenario is unrealistic. In contrast, imitation learning can, in theory, leverage data from large fleets of human-driven cars. Behavior cloning in particular has been successfully used to learn simple visuomotor policies end-to-end, but scaling to the full spectrum of driving behaviors remains an unsolved problem. In this paper, we propose a new benchmark to experimentally investigate the scalability and limitations of behavior cloning. We show that behavior cloning leads to state-of-the-art results, executing complex lateral and longitudinal maneuvers, even in unseen environments, without being explicitly programmed to do so. However, we confirm some limitations of the behavior cloning approach: some well-known limitations (e.g., dataset bias and overfitting), new generalization issues (e.g., dynamic objects and the lack of a causal modeling), and training instabilities, all requiring further research before behavior cloning can graduate to real-world driving. The code, dataset, benchmark, and agent studied in this paper can be found at github.com/felipecode/coiltraine/blob/master/docs/exploring_limitations.md Read more

Citation: Codevilla, Felipe, Eder Santana, Antonio M. López, and Adrien Gaidon. "Exploring the limitations of behavior cloning for autonomous driving." In Proceedings of the IEEE International Conference on Computer Vision, pp. 9329-9338. 2019.

TRI Author: Wadim Kehl

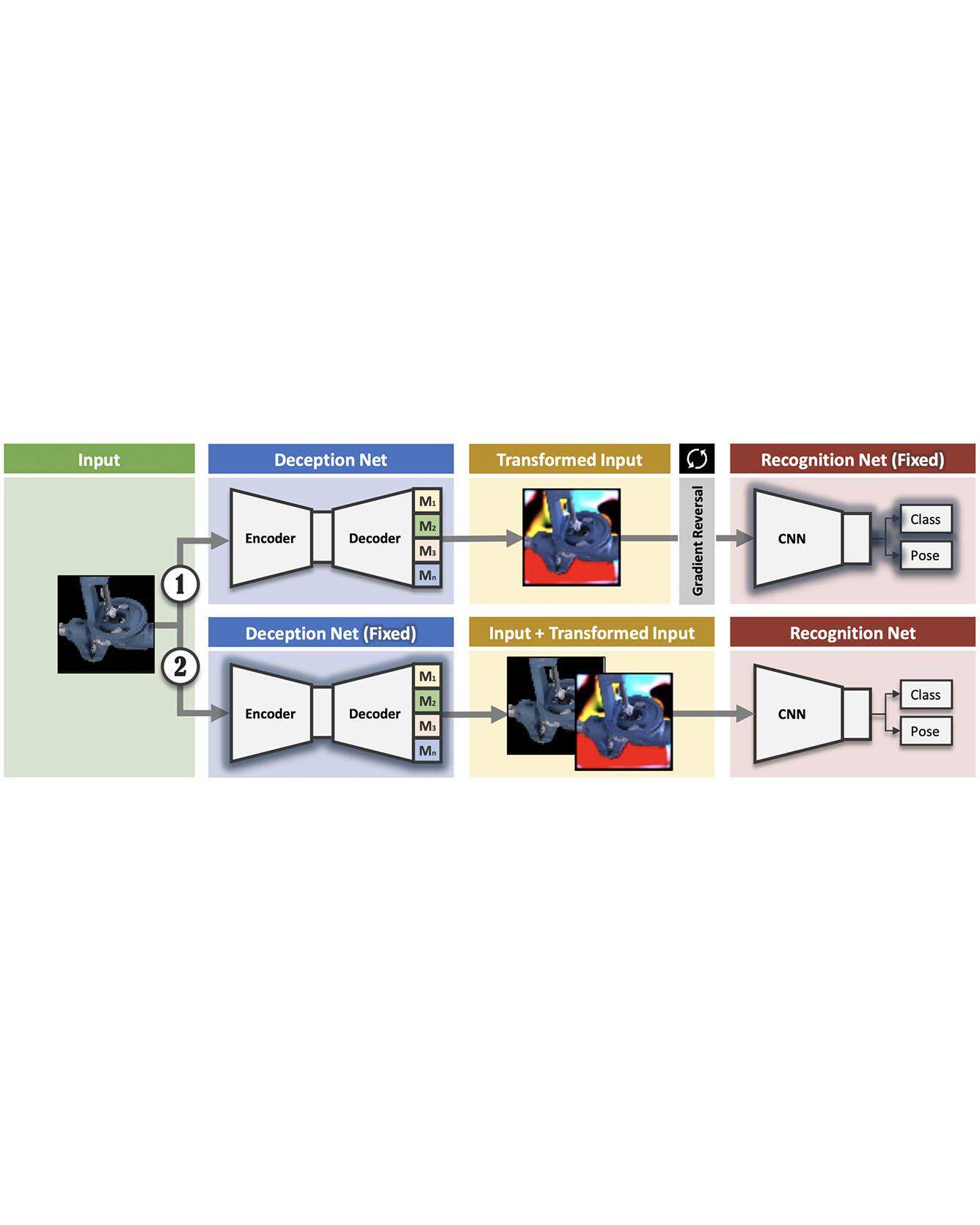

All Authors: Sergey Zakharov, Wadim Kehl, Slobodan Ilic

We present a novel approach to tackle domain adaptation between synthetic and real data. Instead, of employing "blind" domain randomization, i.e., augmenting synthetic renderings with random backgrounds or changing illumination and colorization, we leverage the task network as its own adversarial guide toward useful augmentations that maximize the uncertainty of the output. To this end, we design a min-max optimization scheme where a given task competes against a special deception network to minimize the task error subject to the specific constraints enforced by the deceiver. The deception network samples from a family of differentiable pixel-level perturbations and exploits the task architecture to find the most destructive augmentations. Unlike GAN-based approaches that require unlabeled data from the target domain, our method achieves robust mappings that scale well to multiple target distributions from source data alone. We apply our framework to the tasks of digit recognition on enhanced MNIST variants, classification and object pose estimation on the Cropped LineMOD dataset as well as semantic segmentation on the Cityscapes dataset and compare it to a number of domain adaptation approaches, thereby demonstrating similar results with superior generalization capabilities. Read More

Citation: Zakharov, Sergey, Wadim Kehl, and Slobodan Ilic. "Deceptionnet: Network-driven domain randomization." In Proceedings of the IEEE International Conference on Computer Vision, pp. 532-541. 2019.

SHARE

TRI Author: Adrien Gaidon

All Authors: Cesar Roberto de Souza, Adrien Gaidon, Yohann Cabon, Naila Murray, Antonio Manuel Lopez

Deep video action recognition models have been highly successful in recent years but require large quantities of manually-annotated data, which are expensive and laborious to obtain. In this work, we investigate the generation of synthetic training data for video action recognition, as synthetic data have been successfully used to supervise models for a variety of other computer vision tasks. We propose an interpretable parametric generative model of human action videos that relies on procedural generation, physics models and other components of modern game engines. With this model we generate a diverse, realistic, and physically plausible dataset of human action videos, called PHAV for “Procedural Human Action Videos”. PHAV contains a total of 39,982 videos, with more than 1000 examples for each of 35 action categories. Our video generation approach is not limited to existing motion capture sequences: 14 of these 35 categories are procedurally-defined synthetic actions. In addition, each video is represented with 6 different data modalities, including RGB, optical flow and pixel-level semantic labels. These modalities are generated almost simultaneously using the Multiple Render Targets feature of modern GPUs. In order to leverage PHAV, we introduce a deep multi-task (i.e. that considers action classes from multiple datasets) representation learning architecture that is able to simultaneously learn from synthetic and real video datasets, even when their action categories differ. Our experiments on the UCF-101 and HMDB-51 benchmarks suggest that combining our large set of synthetic videos with small real-world datasets can boost recognition performance. Our approach also significantly outperforms video representations produced by fine-tuning state-of-the-art unsupervised generative models of videos. Read More

Citation: de Souza, César Roberto, Adrien Gaidon, Yohann Cabon, Naila Murray, and Antonio Manuel López. "Generating Human Action Videos by Coupling 3D Game Engines and Probabilistic Graphical Models." International Journal of Computer Vision (2019): 1-32.

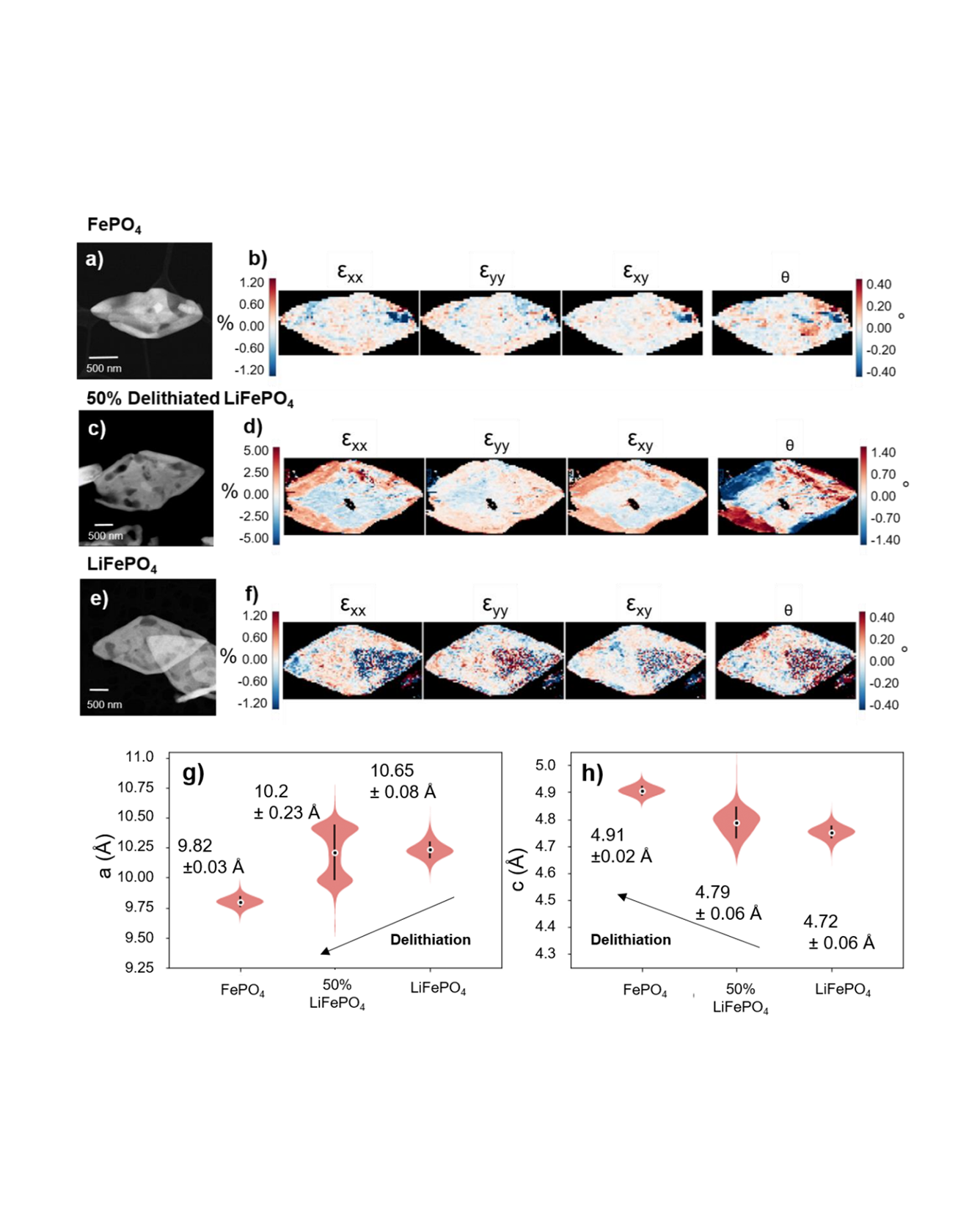

Phase separation is an important factor for many Li-ion battery materials as it significantly impacts the capacity and cycle life of a battery. Forming Li-rich and Li-poor domains, phase separation induces changes to the composition and microstructure of these materials [1]. READ MORE

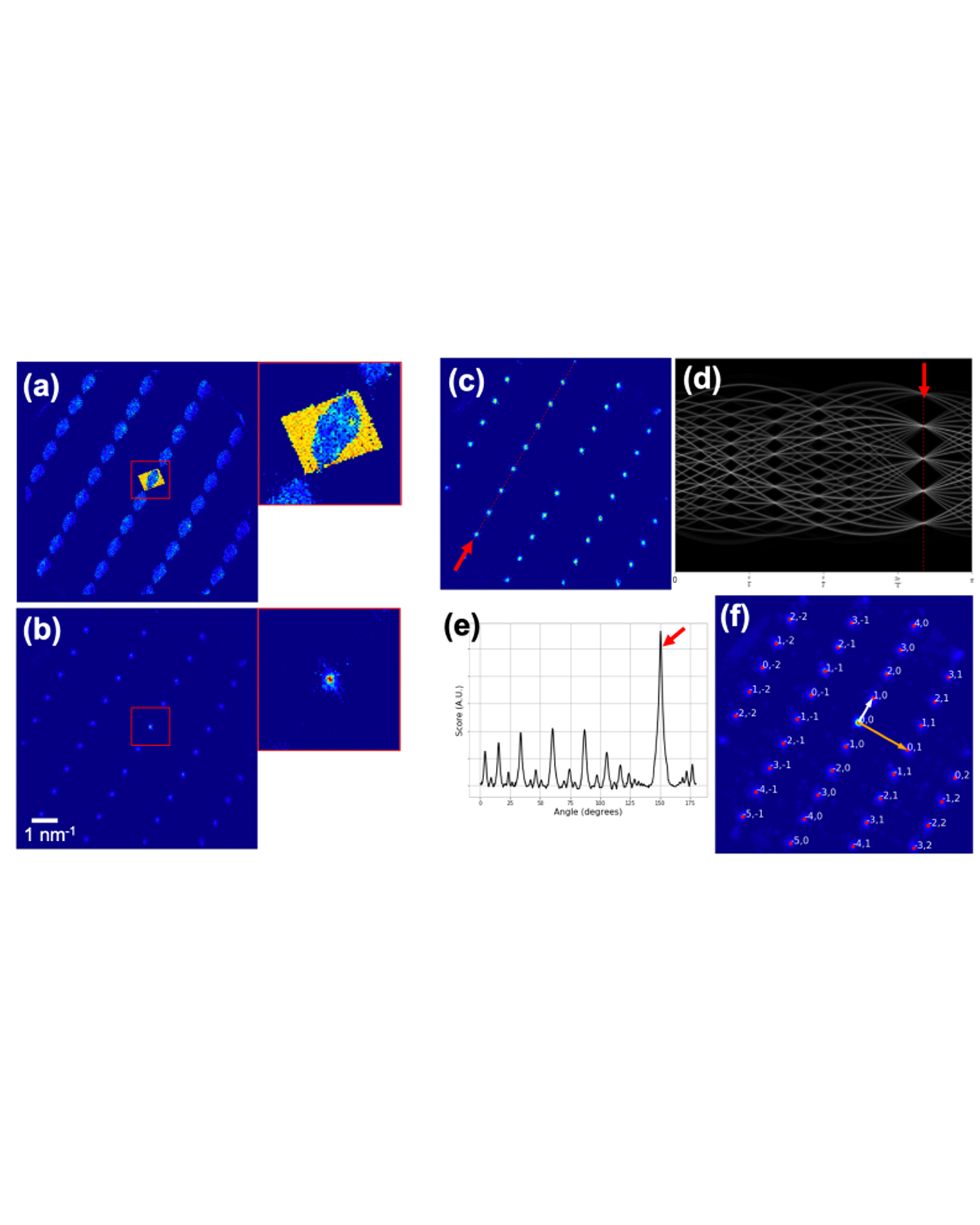

py4DSTEM is a free, open source, python-based data analysis package for the emerging field of fourdimensional scanning transmission electron microscopy (4D-STEM). In 4D-STEM, the electron beam is rastered across a sample and a diffraction pattern is collected at each scan position, yielding highly information-rich datasets which can be used to virtually recreate images that map sample orientation, local lattice parameters, strain, and short/medium range ordering, or to reconstruct the electrostatic potential itself [1]. These datasets can easily reach tens or even hundreds of GB in size, and almost always require significant computational processing to extract useful results. The aim of py4DSTEM is to make 4DSTEM data analysis easy and accessible for everyone [2]. READ MORE

TRI Authors: Nikos Arechiga, Soonho Kong

All Authors: Sicun Gao, James Kapinski, Jyotirmoy Deshmukh, Nima Roohi, Armando Solar-Lezama, Nikos Arechiga, and Soonho Kong

We formulate numerically-robust inductive proof rules for unbounded stability and safety properties of continuous dynamical systems. These induction rules robustify standard notions of Lyapunov functions and barrier certificates so that they can tolerate small numerical errors. In this way, numerically-driven decision procedures can establish a sound and relative-complete proof system for unbounded properties of very general nonlinear systems. We demonstrate the effectiveness of the proposed rules for rigorously verifying unbounded properties of various nonlinear systems, including a challenging powertrain control model. Read More

Citation: Gao, Sicun, James Kapinski, Jyotirmoy Deshmukh, Nima Roohi, Armando Solar-Lezama, Nikos Arechiga, and Soonho Kong. "Numerically-Robust Inductive Proof Rules for Continuous Dynamical Systems." In International Conference on Computer Aided Verification, pp. 137-154. Springer, Cham, 2019.

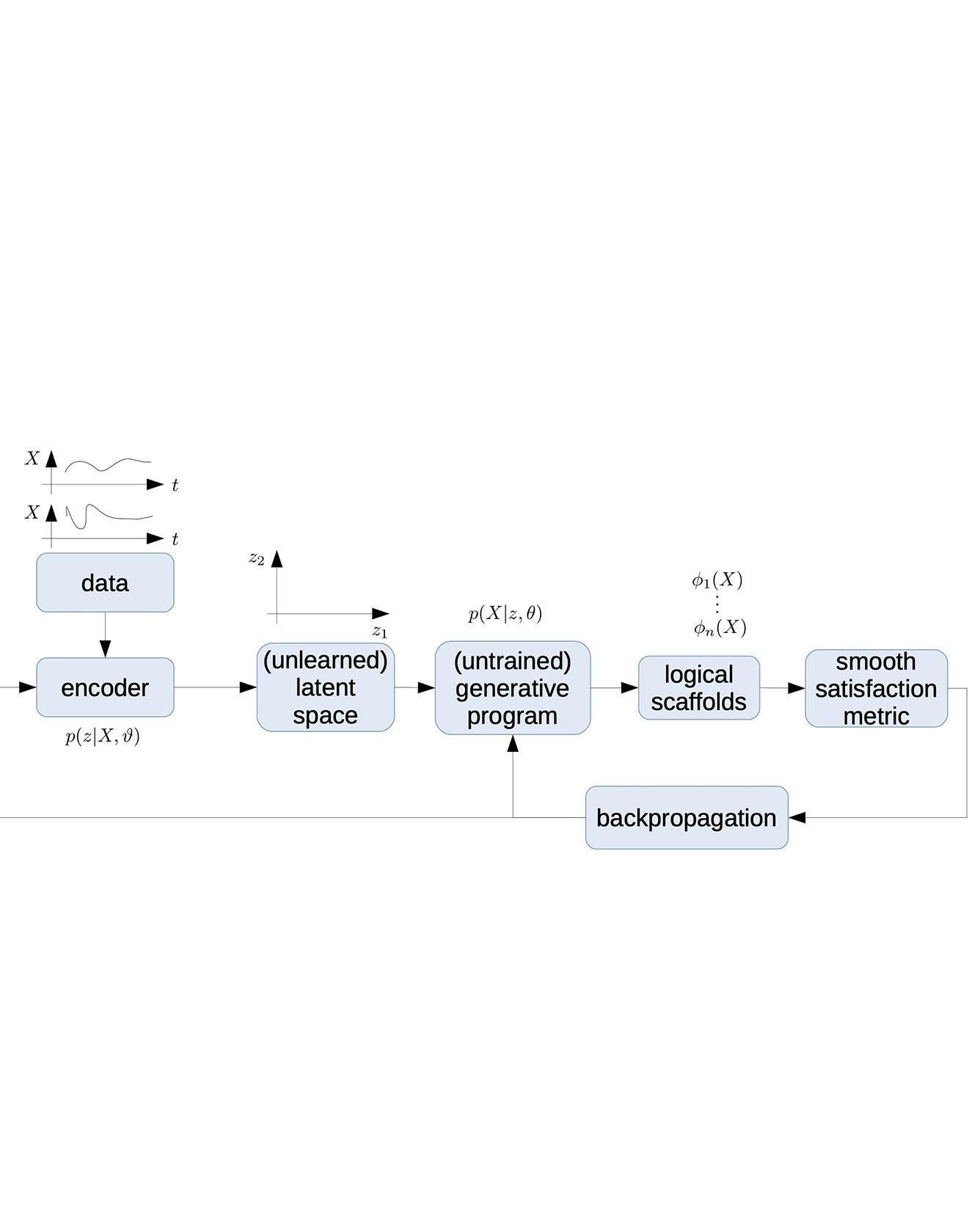

TRI Authors: Nikos Arechiga, Jonathan DeCastro, Soonho Kong All Authors: Nikos Arechiga, Jonathan DeCastro, Soonho Kong, Karen Leung We describe the concept of logical scaffolds, which can be used to improve the quality of software that relies on AI components. We explain how some of the existing ideas on runtime monitors for perception systems can be seen as a specific instance of logical scaffolds. Furthermore, we describe how logical scaffolds may be useful for improving AI programs beyond perception systems, to include general prediction systems and agent behavior models. Read More Citation: Arechiga, Nikos, Jonathan DeCastro, Soonho Kong, and Karen Leung. "Better AI through Logical Scaffolding." In FoMLaS 2019 Workshop at CAV 2019, (2019).

TRI Author: Soonho Kong

All Authors: Calvin Huang, Soonho Kong, Sicun Gao, and Damien Zufferey

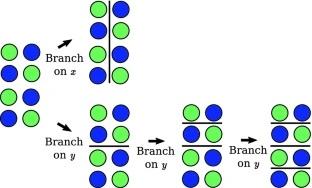

Interval Constraint Propagation (ICP) is a powerful method for solving general nonlinear constraints over real numbers. ICP uses interval arithmetic to prune the space of potential solutions and, when the constraint propagation fails, divides the space into smaller regions and continues recursively. The original goal is to find paving boxes of all solutions to a problem. Already when the whole domain needs to be considered, branching methods do matter much. However, recent applications of ICP in decision procedures over the reals need only a single solution. Consequently, variable ordering in branching operations becomes even more important.

In this work, we compare three different branching heuristics for ICP. The first method, most commonly used, splits the problem in the dimension with the largest lower and upper bound. The two other types of branching methods try to exploit an integration of analytical/numerical properties of real functions and search-based methods. The second method, called smearing, uses gradient information of constraints to choose variables that have the highest local impact on pruning. The third method, lookahead branching, designs a measure function to compare the effect of all variables on pruning operations in the next several steps.

We evaluate the performance of our methods on over 11,000 benchmarks from various sources. While the different branching methods exhibit significant differences on larger instance, none is consistently better. This shows the need for further research on branching heuristics when ICP is used to find an unique solution rather than all solutions. Read more

Citation: Huang, Calvin, Soonho Kong, Sicun Gao, and Damien Zufferey. "Evaluating Branching Heuristics in Interval Constraint Propagation for Satisfiability." In International Workshop on Numerical Software Verification, pp. 85-100. Springer, Cham, 2019.

TRI Authors: Jonathan DeCastro, Soonho Kong

All Authors: Daniel Jackson, Jonathan DeCastro, Soonho Kong, Dimitrios Koutentakis, Angela Leong Feng Ping, Armando Solar-Lezama, Mike Wang, Xin Zhang

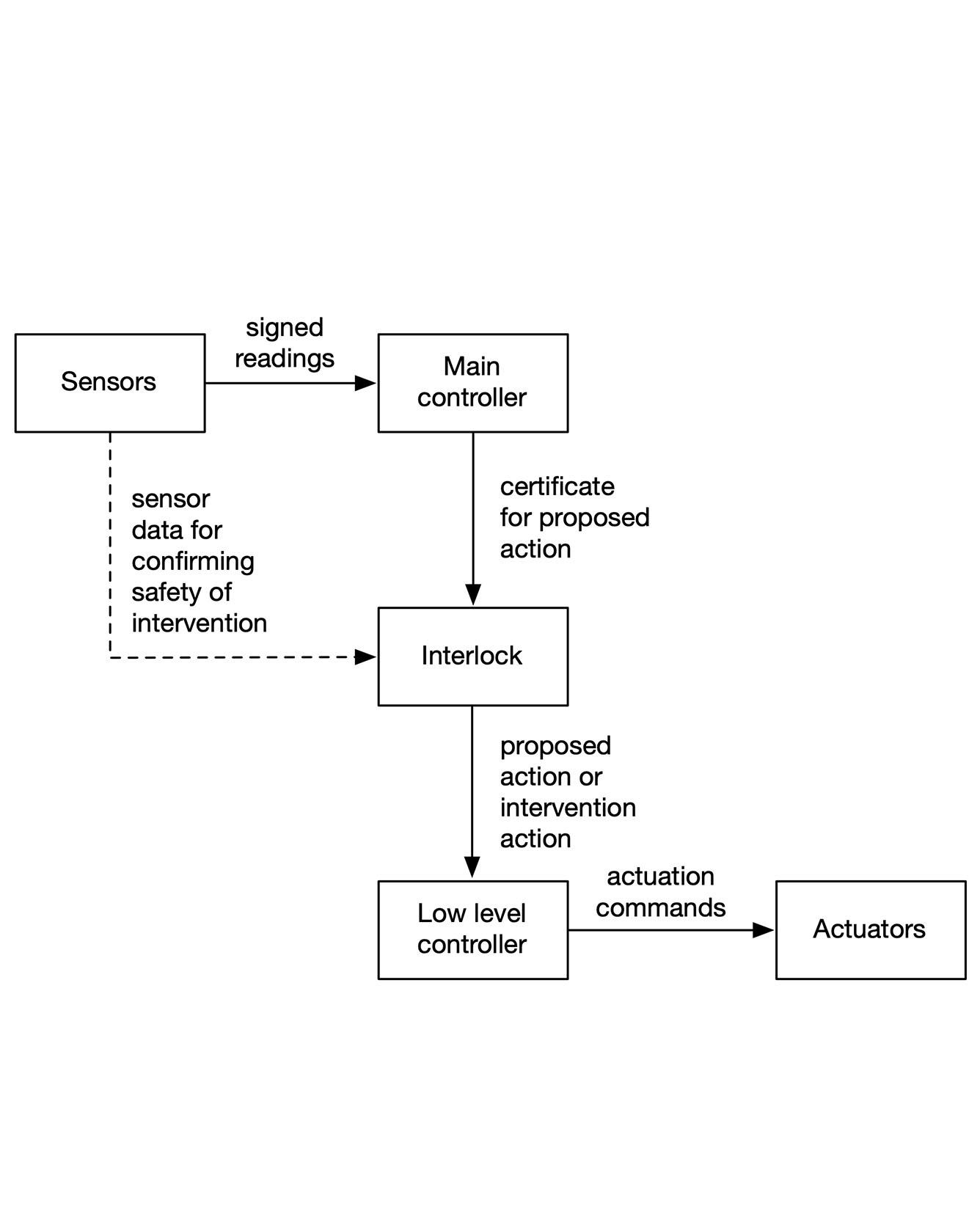

Certified control is a new architectural pattern for achieving high assurance of safety in autonomous cars. As with a traditional safety controller or interlock, a separate component oversees safety and intervenes to prevent safety violations. Tis component (along with sensors and actuators) comprises a trusted base that can ensure safety even if the main controller fails. But in certified control, the interlock does not use the sensors directly to determine when to intervene. Instead, the main controller is given the responsibility of presenting the interlock with a certificate that provides evidence that the proposed next action is safe. Te interlock checks this certificate, and intervenes only if the check fails. Because generating such a certificate is usually much harder than checking one, the interlock can be smaller and simpler than the main controller, and thus assuring its correctness is more feasible. Read More

Citation: Jackson, Daniel, Jonathan DeCastro, Soonho Kong, Dimitrios Koutentakis, Angela Leong Feng Ping, Armando Solar-Lezama, Mike Wang, and Xin Zhang. "Certified Control for Self-Driving Cars," in DARS 2019 Workshop at CAV 2019

TRI Authors: Wadim Kehl, Adrien Gaidon

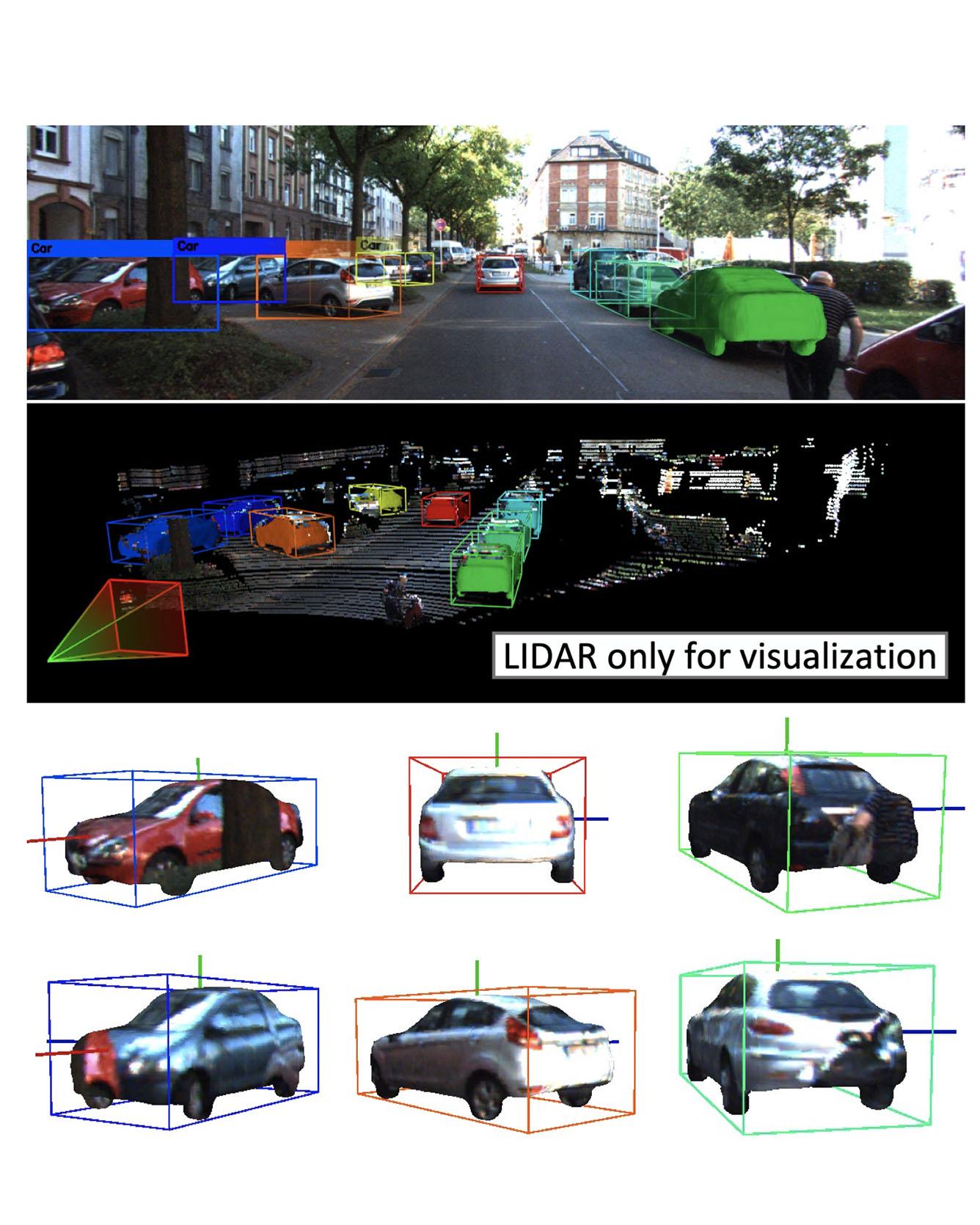

All Authors: Fabian Manhardt, Wadim Kehl, Adrien Gaidon

We present a deep learning method for end-to-end monocular 3D object detection and metric shape retrieval. We propose a novel loss formulation by lifting 2D detection, orientation, and scale estimation into 3D space. Instead of optimizing these quantities separately, the 3D instantiation allows to properly measure the metric misalignment of boxes. We experimentally show that our 10D lifting of sparse 2D Regions of Interests (RoIs) achieves great results both for 6D pose and recovery of the textured metric geometry of instances. This further enables 3D synthetic data augmentation via inpainting recovered meshes directly onto the 2D scenes. We evaluate on KITTI3D against other strong monocular methods and demonstrate that our approach doubles the AP on the 3D pose metrics on the official test set, defining the new state of the art. Read More

Citation: Manhardt, Fabian, Wadim Kehl, and Adrien Gaidon. "Roi-10d: Monocular lifting of 2d detection to 6d pose and metric shape." In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2069-2078. 2019.