Featured Publications

All Publications

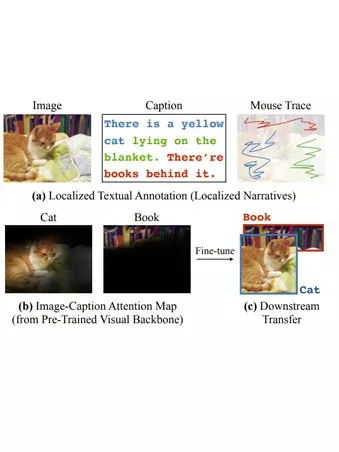

Computer vision tasks such as object detection and semantic/instance segmentation rely on the painstaking annotation of large training datasets. In this paper, we propose LocTex that takes advantage of the low-cost localized textual annotations (i.e., captions and synchronized mouseover gestures) to reduce the annotation effort. We introduce a contrastive pre-training framework between images and captions, and propose to supervise the cross-modal attention map with rendered mouse traces to provide coarse localization signals. Our learned visual features capture rich semantics (from free-form captions) and accurate localization (from mouse traces), which are very effective when transferred to various downstream vision tasks. Compared with ImageNet supervised pre-training, LocTex can reduce the size of the pre-training dataset by 10× or the target dataset by 2× while achieving comparable or even improved performance on COCO instance segmentation. When provided with the same amount of annotations, LocTex achieves around 4% higher accuracy than the previous state-of-the-art “vision+language” pre-training approach on the task of PASCAL VOC image classification. READ MORE

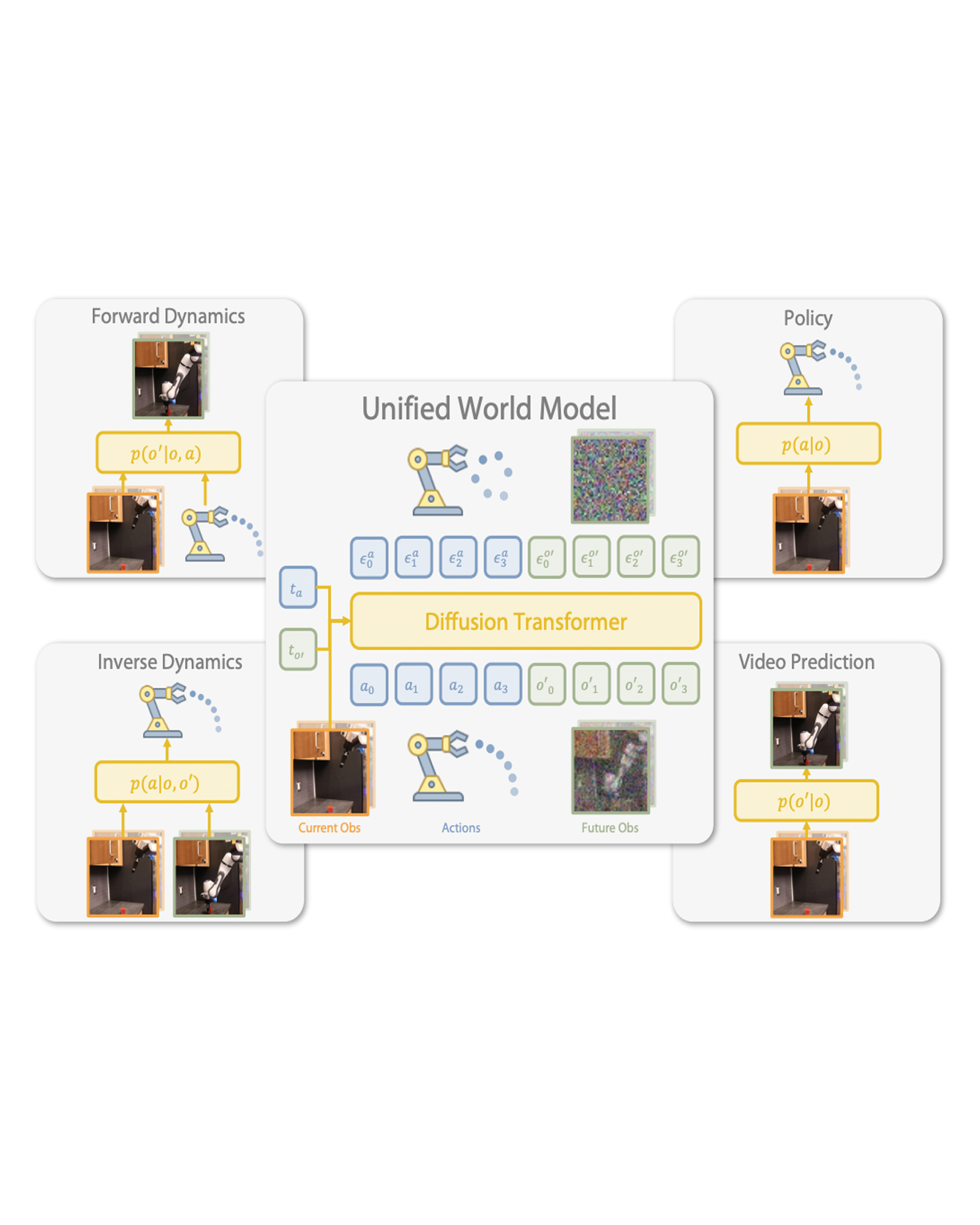

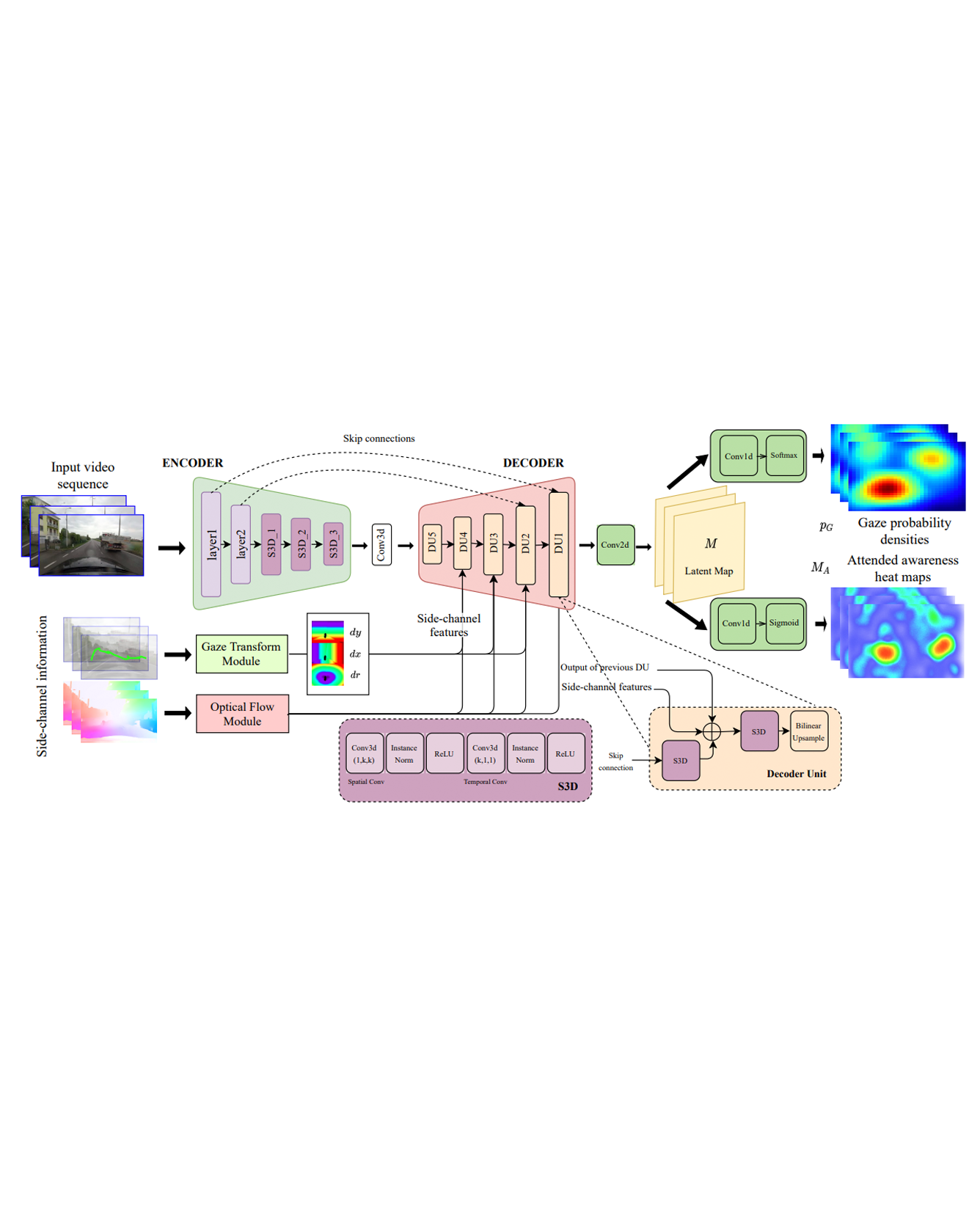

We propose a computational model to estimate a person’s attended awareness of their environment. We define “attended awareness” to be those parts of a potentially dynamic scene which a person has attended to in recent history and which they are still likely to be physically aware of. Our model takes as input scene information in the form of a video and noisy gaze estimates, and outputs visual saliency, a refined gaze estimate and an estimate of the person’s attended awareness. In order to test our model, we capture a new dataset with a high-precision gaze tracker including 24.5 hours of gaze sequences from 23 subjects attending to videos of driving scenes. The dataset also contains third-party annotations of the subjects’ attended awareness based on observations of their scan path. Our results show that our model is able to reasonably estimate attended awareness in a controlled setting, and in the future could potentially be extended to real egocentric driving data to help enable more effective ahead-of-time warnings in safety systems and thereby augment driver performance. We also demonstrate our model’s effectiveness on the tasks of saliency, gaze calibration and denoising, using both our dataset and an existing saliency dataset. We make our model and dataset available at https://github.com/ToyotaResearchInstitute/att-aware/. READ MORE

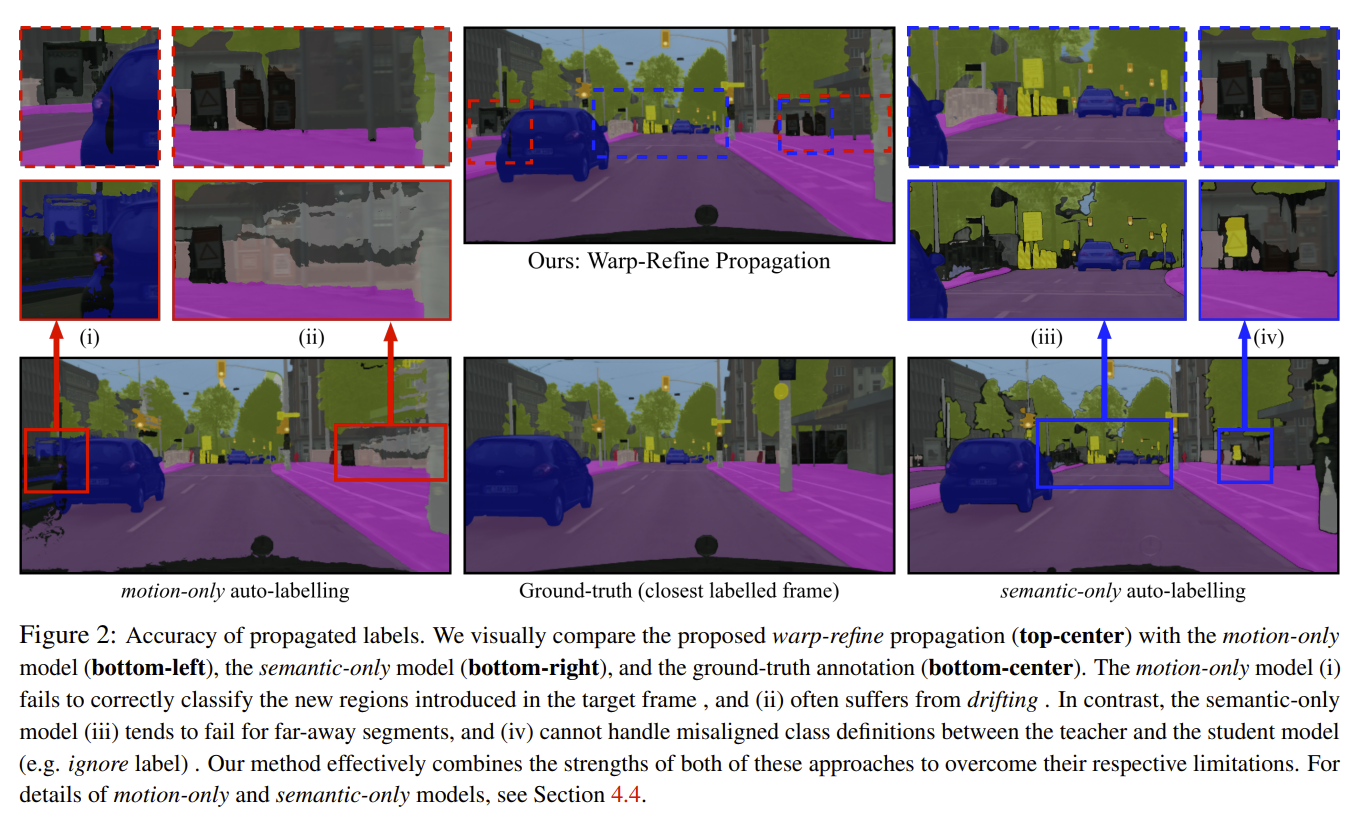

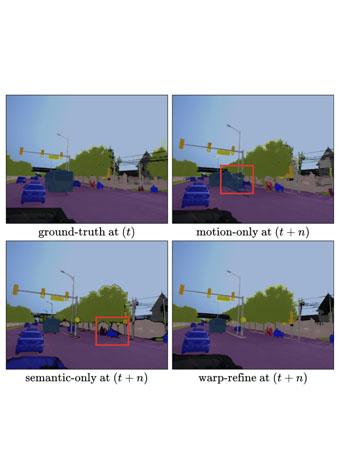

Deep learning models for semantic segmentation rely on expensive, large-scale, manually annotated datasets. Labelling is a tedious process that can take hours per image. Automatically annotating video sequences by propagating sparsely labeled frames through time is a more scalable alternative. In this work, we propose a novel label propagation method, termed Warp-Refine Propagation, that combines semantic cues with geometric cues to efficiently auto-label videos. Our method learns to refine geometrically-warped labels and infuse them with learned semantic priors in a semi-supervised setting by leveraging cycle consistency across time. We quantitatively show that our method improves label-propagation by a noteworthy margin of 13.1 mIoU on the ApolloScape dataset. Furthermore, by training with the auto-labelled frames, we achieve competitive results on three semantic-segmentation benchmarks, improving the state-of-the-art by a large margin of 1.8 and 3.61 mIoU on NYU-V2 and KITTI, while matching the current best results on Cityscapes. READ MORE

The ability to reliably estimate physiological signals from video is a powerful tool in low-cost, pre-clinical health monitoring. In this work we propose a new approach to remote photoplethysmography (rPPG) – the measurement of blood volume changes from observations of a person's face or skin. Similar to current state-of-the-art methods for rPPG, we apply neural networks to learn deep representations with invariance to nuisance image variation. In contrast to such methods, we employ a fully self-supervised training approach, which has no reliance on expensive ground truth physiological training data. Our proposed method uses contrastive learning with a weak prior over the frequency and temporal smoothness of the target signal of interest. We evaluate our approach on four rPPG datasets, showing that comparable or better results can be achieved compared to recent supervised deep learning methods but without using any annotation. In addition, we incorporate a learned saliency resampling module into both our unsupervised approach and supervised baseline. We show that by allowing the model to learn where to sample the input image, we can reduce the need for hand-engineered features while providing some interpretability into the model's behavior and possible failure modes. We release code for our complete training and evaluation pipeline to encourage reproducible progress in this exciting new direction. In addition, we used our proposed approach as the basis of our winning entry to the ICCV 2021 Vision 4 Vitals Workshop Challenge. READ MORE

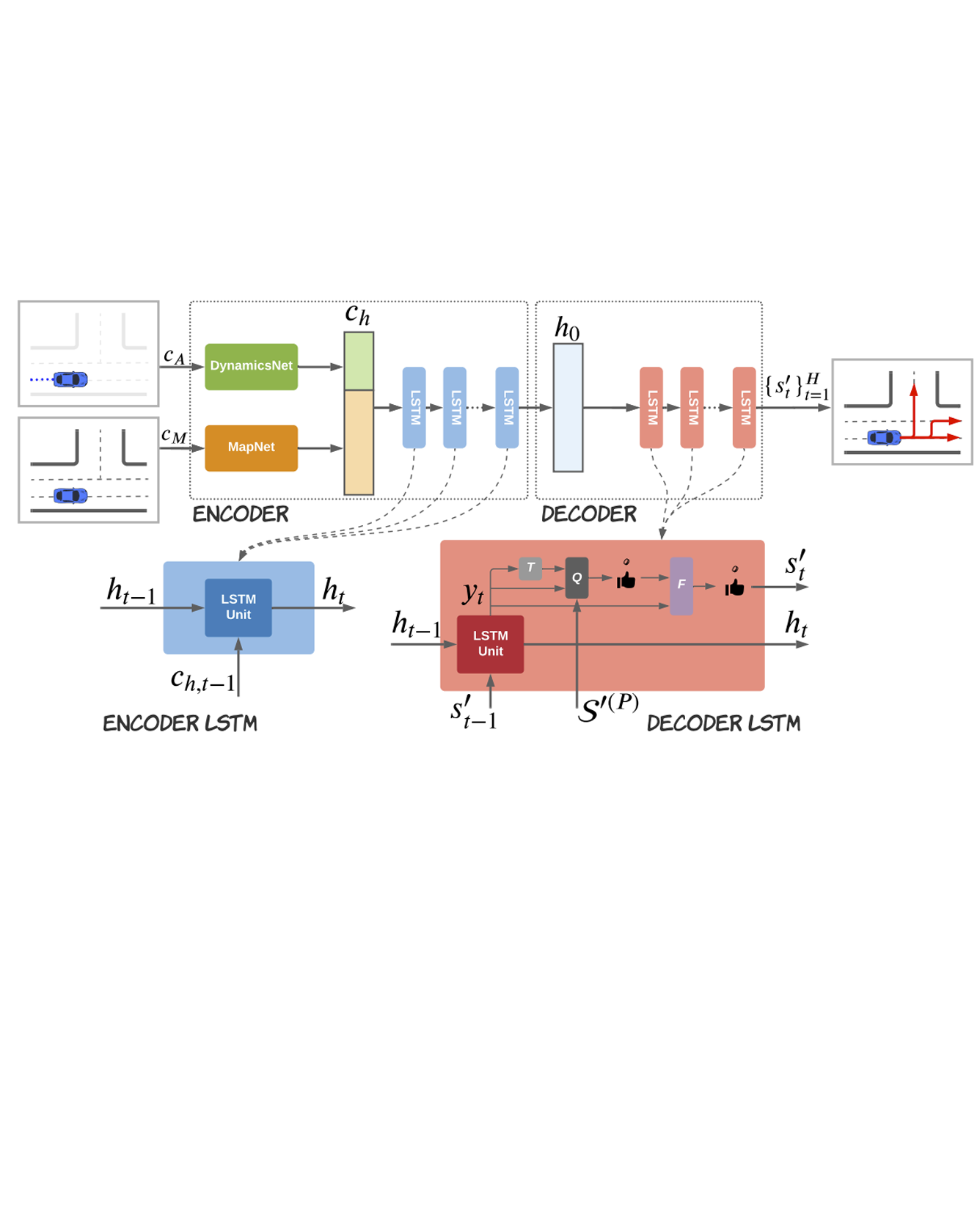

Modeling multi-modal high-level intent is important for ensuring diversity in trajectory prediction. Existing approaches explore the discrete nature of human intent before predicting continuous trajectories, to improve accuracy and support explainability. However, these approaches often assume the intent to remain fixed over the prediction horizon, which is problematic in practice, especially over longer horizons. To overcome this limitation, we introduce HYPER, a general and expressive hybrid prediction framework that models evolving human intent. By modeling traffic agents as a hybrid discrete-continuous system, our approach is capable of predicting discrete intent changes over time. We learn the probabilistic hybrid model via a maximum likelihood estimation problem and leverage neural proposal distributions to sample adaptively from the exponentially growing discrete space. The overall approach affords a better trade-off between accuracy and coverage. We train and validate our model on the Argoverse dataset, and demonstrate its effectiveness through comprehensive ablation studies and comparisons with state-of-the-art models. READ MORE

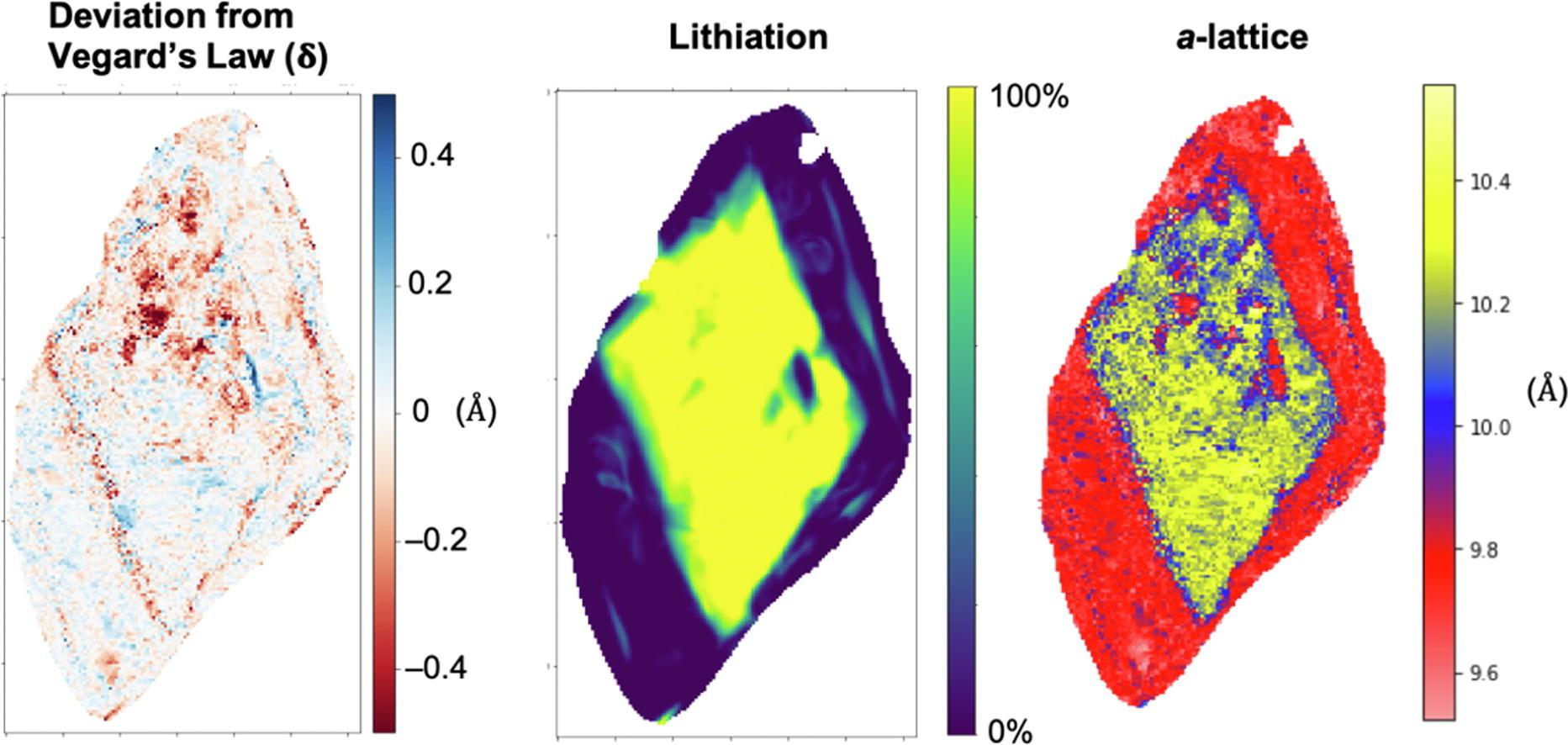

Lithium iron phosphate (LixFePO4), a cathode material used in rechargeable Li-ion batteries, phase separates upon de/lithiation under equilibrium. The interfacial structure and chemistry within these cathode materials affects Li-ion transport, and therefore battery performance. Correlative imaging of LixFePO4 was performed using four-dimensional scanning transmission electron microscopy (4D-STEM), scanning transmission X-ray microscopy (STXM), and X-ray ptychography in order to analyze the local structure and chemistry of the same particle set. Over 50,000 diffraction patterns from 10 particles provided measurements of both structure and chemistry at a nanoscale spatial resolution (16.6–49.5 nm) over wide (several micron) fields-of-view with statistical robustness. LixFePO4 particles at varying stages of delithiation were measured to examine the evolution of structure and chemistry as a function of delithiation. In lithiated and delithiated particles, local variations were observed in the degree of lithiation even while local lattice structures remained comparatively constant, and calculation of linear coefficients of chemical expansion suggest pinning of the lattice structures in these populations. Partially delithiated particles displayed broadly core–shell-like structures, however, with highly variable behavior both locally and per individual particle that exhibited distinctive intermediate regions at the interface between phases, and pockets within the lithiated core that correspond to FePO4 in structure and chemistry. The results provide insight into the LixFePO4 system, subtleties in the scope and applicability of Vegard’s law (linear lattice parameter-composition behavior) under local versus global measurements, and demonstrate a powerful new combination of experimental and analytical modalities for bridging the crucial gap between local and statistical characterization. READ MORE

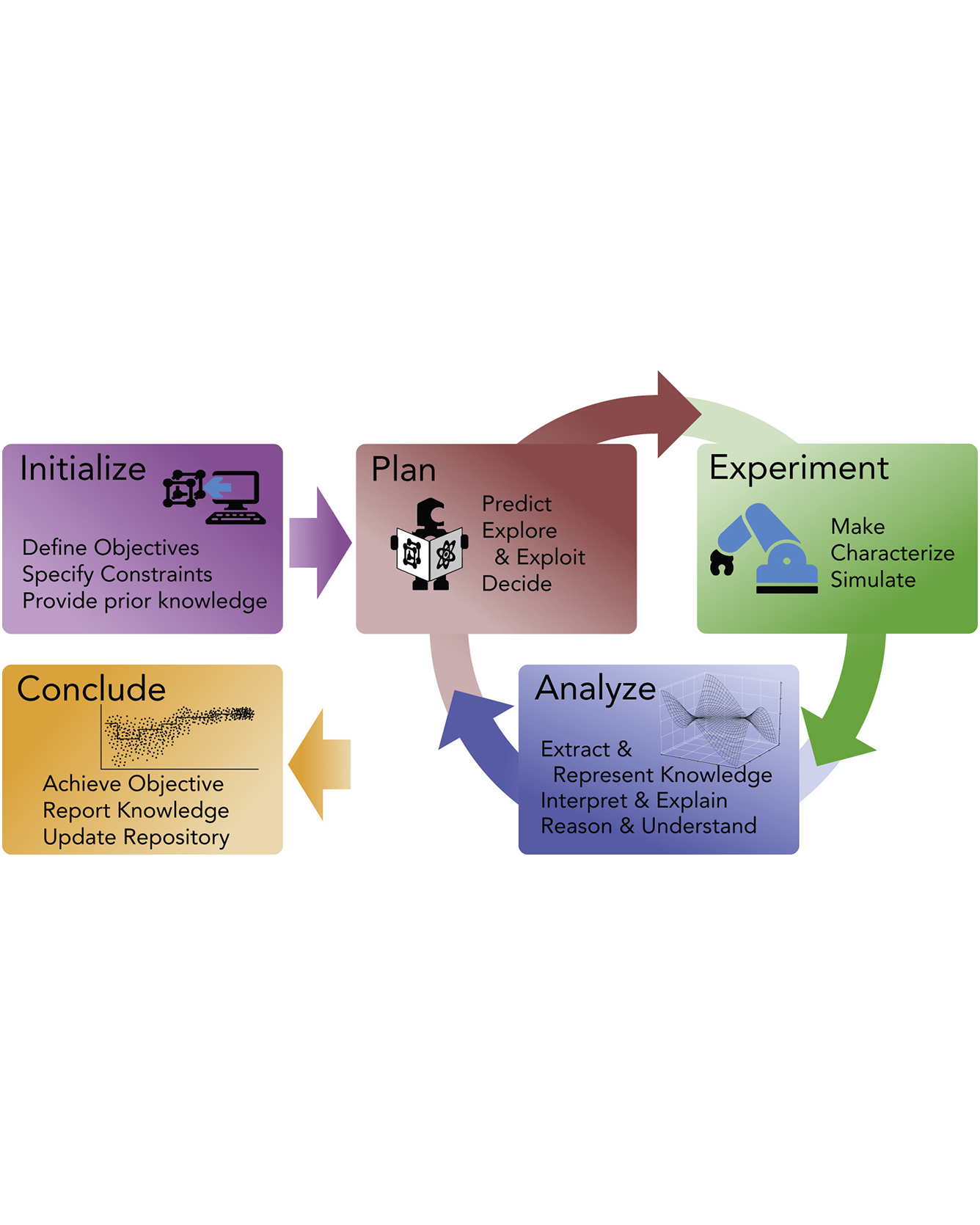

Solutions to many of the world's problems depend upon materials research and development. However, advanced materials can take decades to discover and decades more to fully deploy. Humans and robots have begun to partner to advance science and technology orders of magnitude faster than humans do today through the development and exploitation of closed-loop, autonomous experimentation systems. This review discusses the specific challenges and opportunities related to materials discovery and development that will emerge from this new paradigm. Our perspective incorporates input from stakeholders in academia, industry, government laboratories, and funding agencies. We outline the current status, barriers, and needed investments, culminating with a vision for the path forward. We intend the article to spark interest in this emerging research area and to motivate potential practitioners by illustrating early successes. We also aspire to encourage a creative reimagining of the next generation of materials science infrastructure. To this end, we frame future investments in materials science and technology, hardware and software infrastructure, artificial intelligence and autonomy methods, and critical workforce development for autonomous research. READ MORE

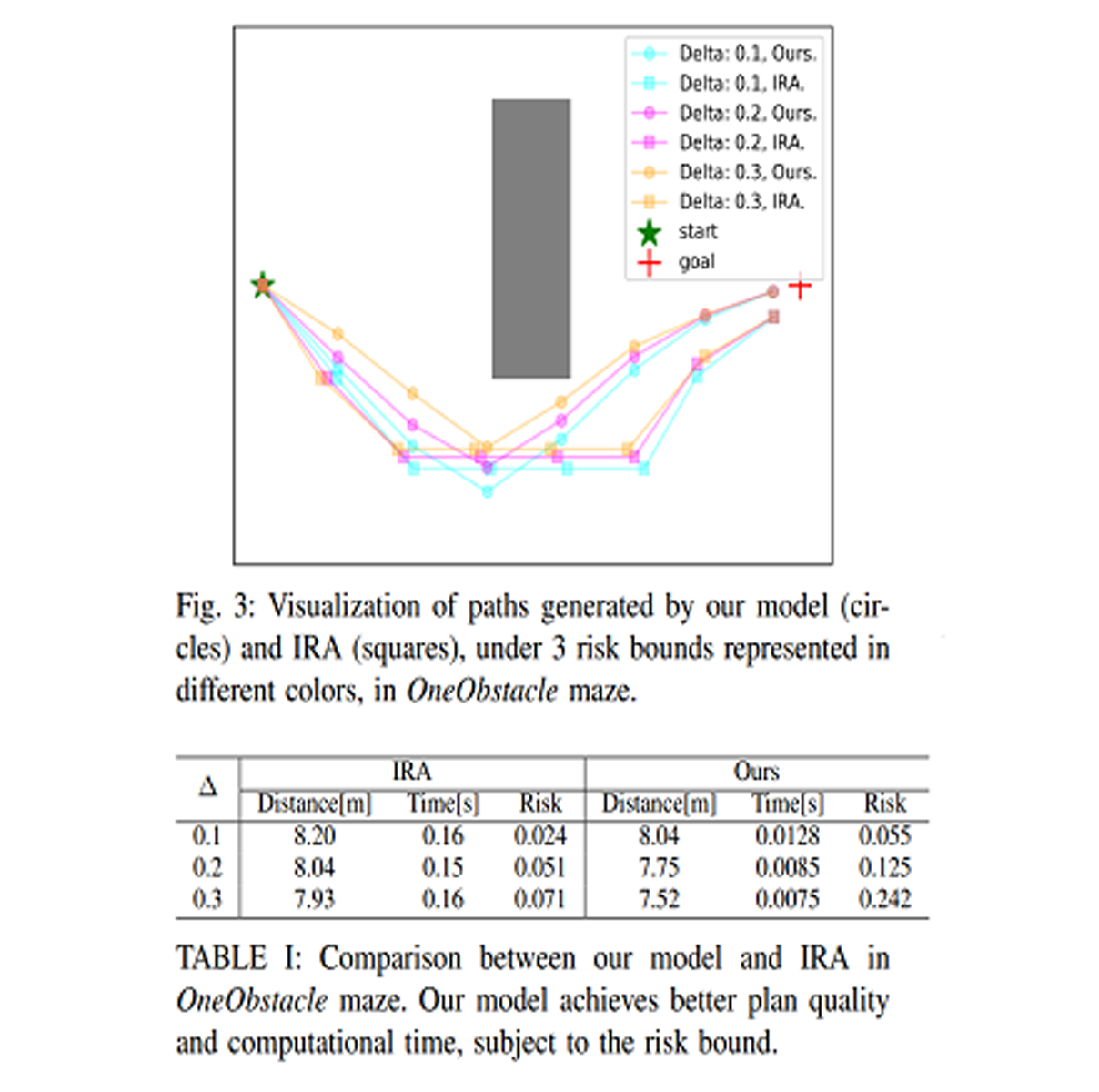

Risk-bounded motion planning is an important yet difficult problem for safety-critical tasks. While existing mathematical programming methods offer theoretical guarantees in the context of constrained Markov decision processes, they either lack scalability in solving larger problems or produce conservative plans. Recent advances in deep reinforcement learning improve scalability by learning policy networks as function approximators. In this paper, we propose an extension of soft actor critic model to estimate the execution risk of a plan through a risk critic and produce risk-bounded policies efficiently by adding an extra risk term in the loss function of the policy network. We define the execution risk in an accurate form, as opposed to approximating it through a summation of immediate risks at each time step that leads to conservative plans. Our proposed model is conditioned on a continuous spectrum of risk bounds, allowing the user to adjust the risk-averse level of the agent on the fly. Through a set of experiments, we show the advantage of our model in terms of both computational time and plan quality, compared to a state-of-the-art mathematical programming baseline, and validate its performance in more complicated scenarios, including nonlinear dynamics and larger state space. READ MORE

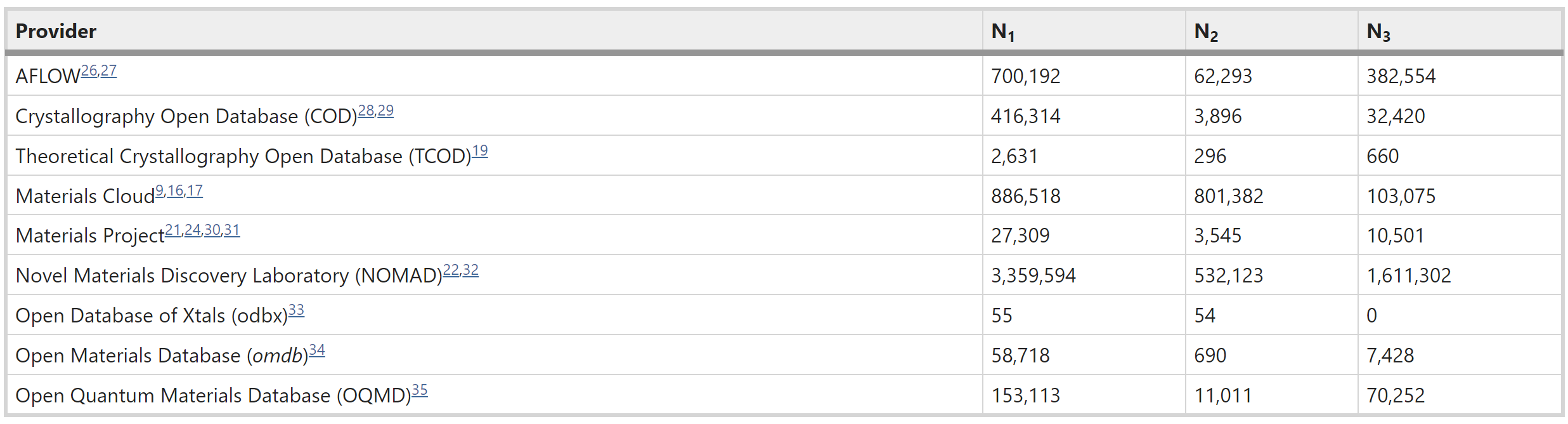

The Open Databases Integration for Materials Design (OPTIMADE) consortium has designed a universal application programming interface (API) to make materials databases accessible and interoperable. We outline the first stable release of the specification, v1.0, which is already supported by many leading databases and several software packages. We illustrate the advantages of the OPTIMADE API through worked examples on each of the public materials databases that support the full API specification. READ MORE

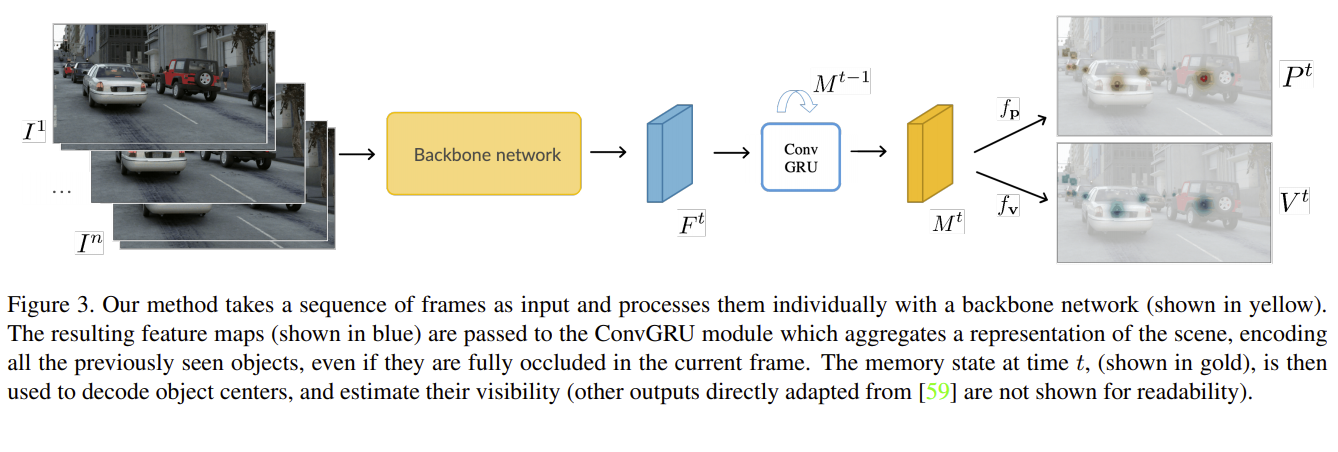

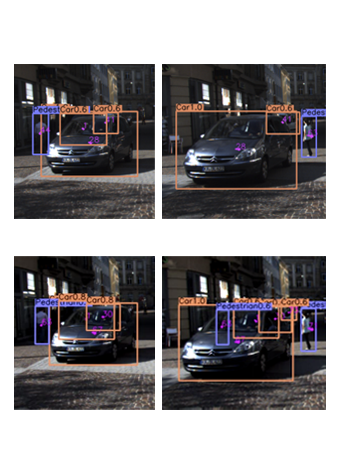

Tracking by detection, the dominant approach for online multi-object tracking, alternates between localization and re-identification steps. As a result, it strongly depends on the quality of instantaneous observations, often failing when objects are not fully visible. In contrast, tracking in humans is underlined by the notion of object permanence: once an object is recognized, we are aware of its physical existence and can approximately localize it even under full occlusions. In this work, we introduce an end-to-end trainable approach for joint object detection and tracking that is capable of such reasoning. We build on top of the recent CenterTrack architecture, which takes pairs of frames as input, and extend it to videos of arbitrary length. To this end, we augment the model with a spatio-temporal, recurrent memory module, allowing it to reason about object locations and identities in the current frame using all the previous history. It is, however, not obvious how to train such an approach. We study this question on a new, large-scale, synthetic dataset for multi-object tracking, which provides ground truth annotations for invisible objects, and propose several approaches for supervising tracking behind occlusions. Our model, trained jointly on synthetic and real data, outperforms the state of the art on KITTI, and MOT17 datasets thanks to its robustness to occlusions. READ MORE