Featured Publications

All Publications

TRI Authors: W. Kehl, A. Bhargava, A. Gaidon

All Authors: S. Zakharov, W. Kehl, A. Bhargava, A. Gaidon

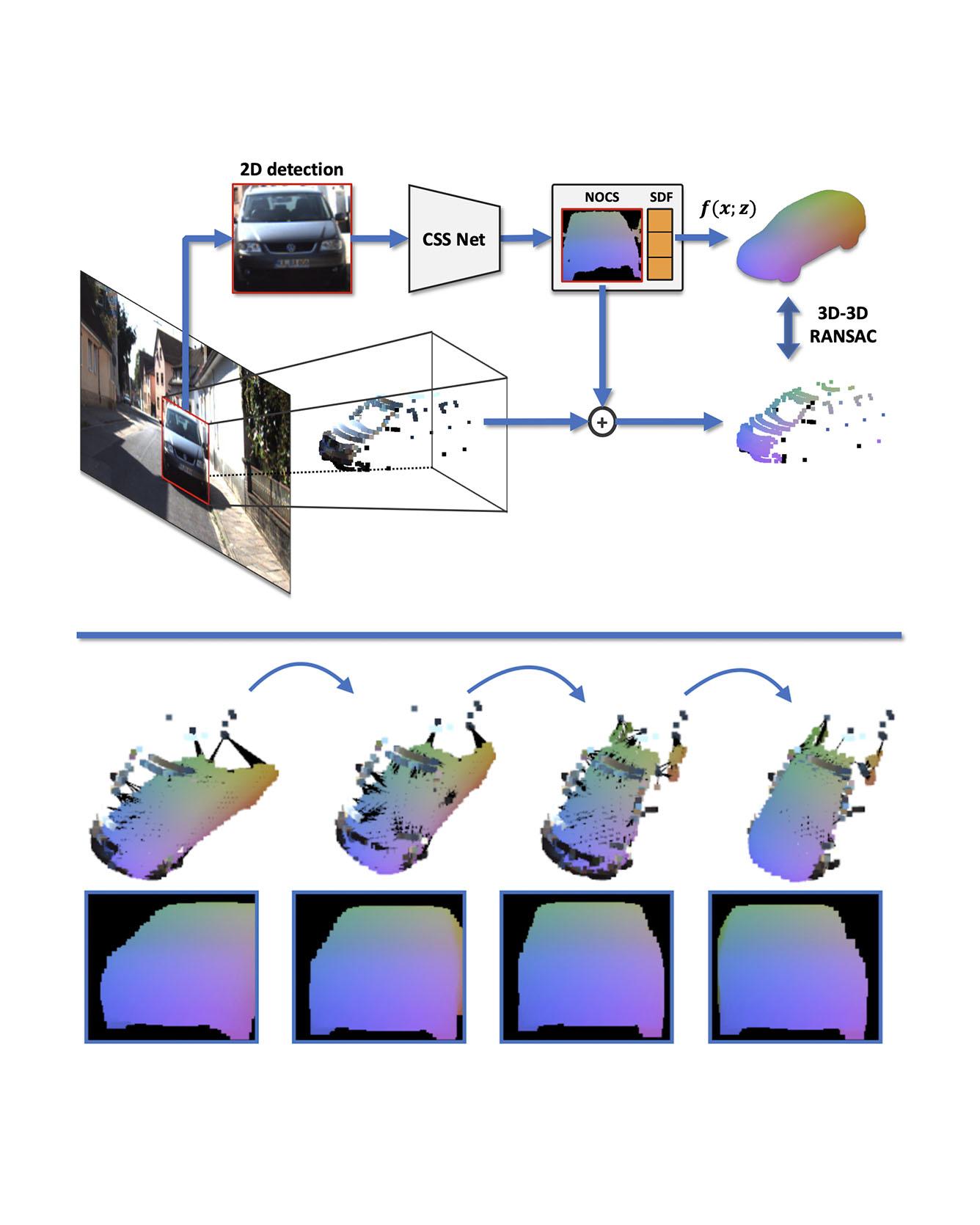

We present an automatic annotation pipeline to recover 9D cuboids and 3D shapes from pre-trained off-the-shelf 2D detectors and sparse LIDAR data. Our autolabeling method solves an ill-posed inverse problem by considering learned shape priors and optimizing geometric and physical parameters. To address this challenging problem, we apply a novel differentiable shape renderer to signed distance fields (SDF), leveraged together with normalized object coordinate spaces (NOCS). Initially trained on synthetic data to predict shape and coordinates, our method uses these predictions for projective and geometric alignment over real samples. Moreover, we also propose a curriculum learning strategy, iteratively retraining on samples of increasing difficulty in subsequent self-improving annotation rounds. Our experiments on the KITTI3D dataset show that we can recover a substantial amount of accurate cuboids, and that these autolabels can be used to train 3D vehicle detectors with state-of-the-art results. Read More

Citation: Zakharov, Sergey, Wadim Kehl, Arjun Bhargava, and Adrien Gaidon. "Autolabeling 3D Objects with Differentiable Rendering of SDF Shape Priors." CVPR, 2020.

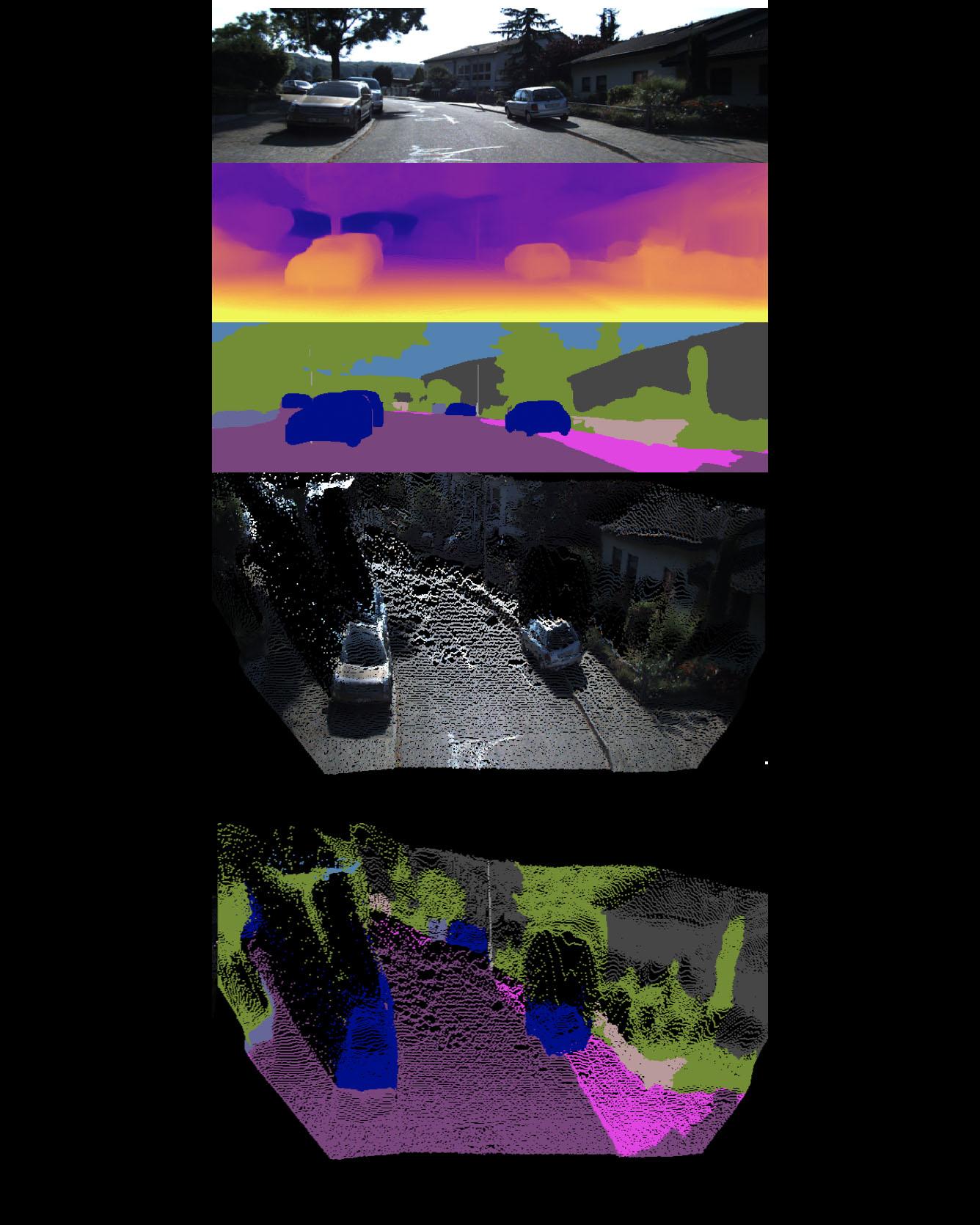

TRI Authors: V. Guizilini, R. Ambrus, S. Pillai, A. Raventos, A. Gaidon

All Authors: V. Guizilini, R. Ambrus, S. Pillai, A. Raventos, A. Gaidon

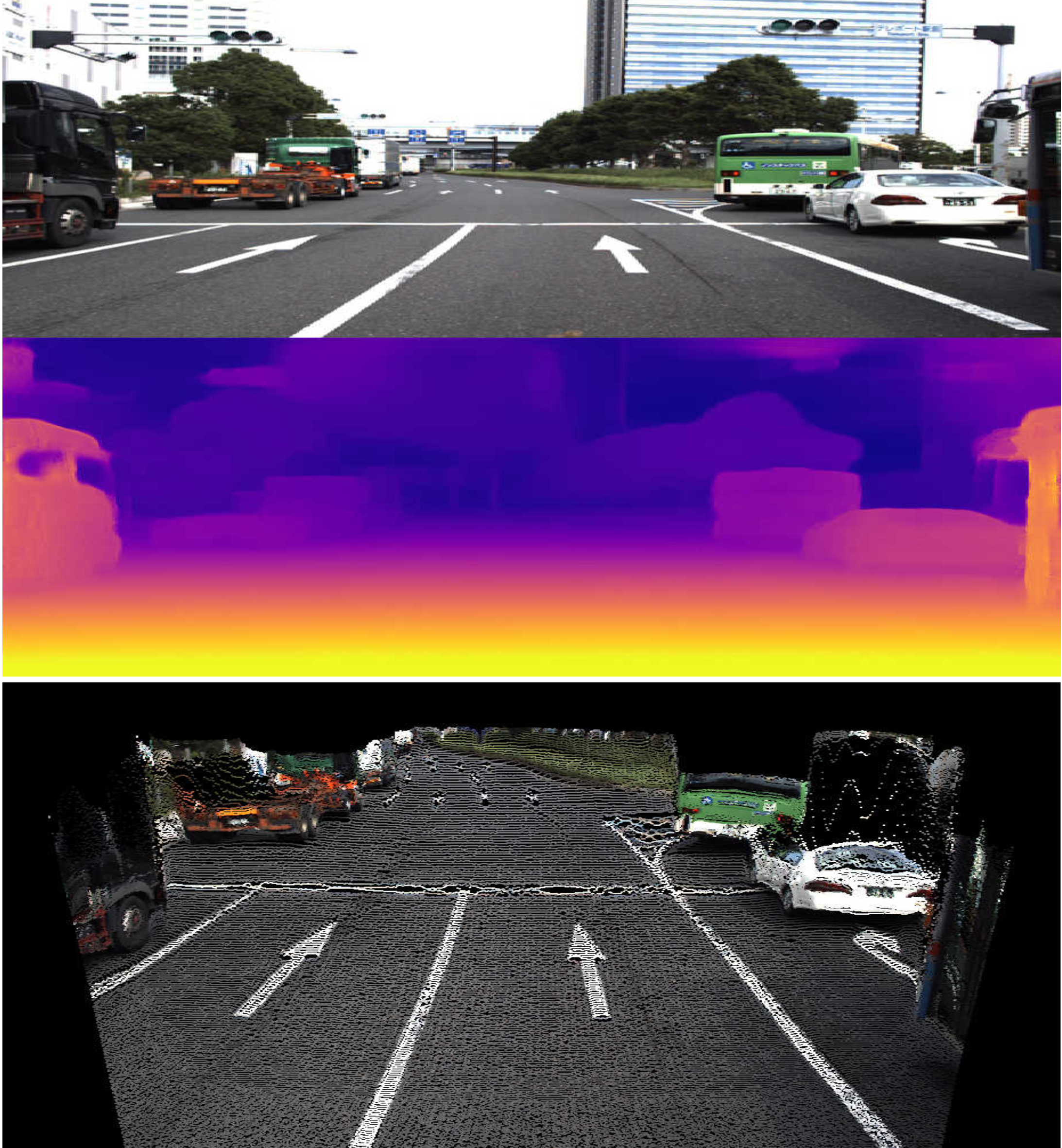

Although cameras are ubiquitous, robotic platforms typically rely on active sensors like LiDAR for direct 3D perception. In this work, we propose a novel self-supervised monocular depth estimation method combining geometry with a new deep network, PackNet, learned only from unlabeled monocular videos. Our architecture leverages novel symmetrical packing and unpacking blocks to jointly learn to compress and decompress detail-preserving representations using 3D convolutions. Although self-supervised, our method outperforms other self, semi, and fully supervised methods on the KITTI benchmark. The 3D inductive bias in PackNet enables it to scale with input resolution and number of parameters without overfitting, generalizing better on out-of-domain data such as the NuScenes dataset. Furthermore, it does not require large-scale supervised pretraining on ImageNet and can run in real-time. Finally, we release DDAD (Dense Depth for Automated Driving), a new urban driving dataset with more challenging and accurate depth evaluation, thanks to longer-range and denser ground-truth depth generated from high-density LiDARs mounted on a fleet of self-driving cars operating world-wide. Read More

Citation: Guizilini, Vitor, Rares Ambrus, Sudeep Pillai, and Adrien Gaidon. "Packnet-sfm: 3d packing for self-supervised monocular depth estimation." CVPR, 2020,

Hydrogen peroxide is a valuable chemical oxidant with a wide range of applications in a variety of industrial processes, especially in water sanitization. Electrochemical synthesis of hydrogen peroxide (H2O2) through a two-electron oxygen reduction reaction (2e-ORR) or a two-electron water oxidation reaction (2e-WOR) has emerged as an appealing process for onsite production of this chemically valuable oxidant. On-site produced H2O2 can be applied for wastewater treatment in remote locations or any applications where H2O2 is needed as an oxidizing agent. This Review studies the theoretical efforts in understanding the challenges in catalysis for electrochemical synthesis of H2O2 as well as providing design principles for more efficient catalyst materials. READ MORE

TRI Authors: KH Lee,A. Gaidon

All Authors: B. Liu, E. Adeli, Z. Cao, KH Lee, A. Shenoi, A. Gaidon, JC Niebles

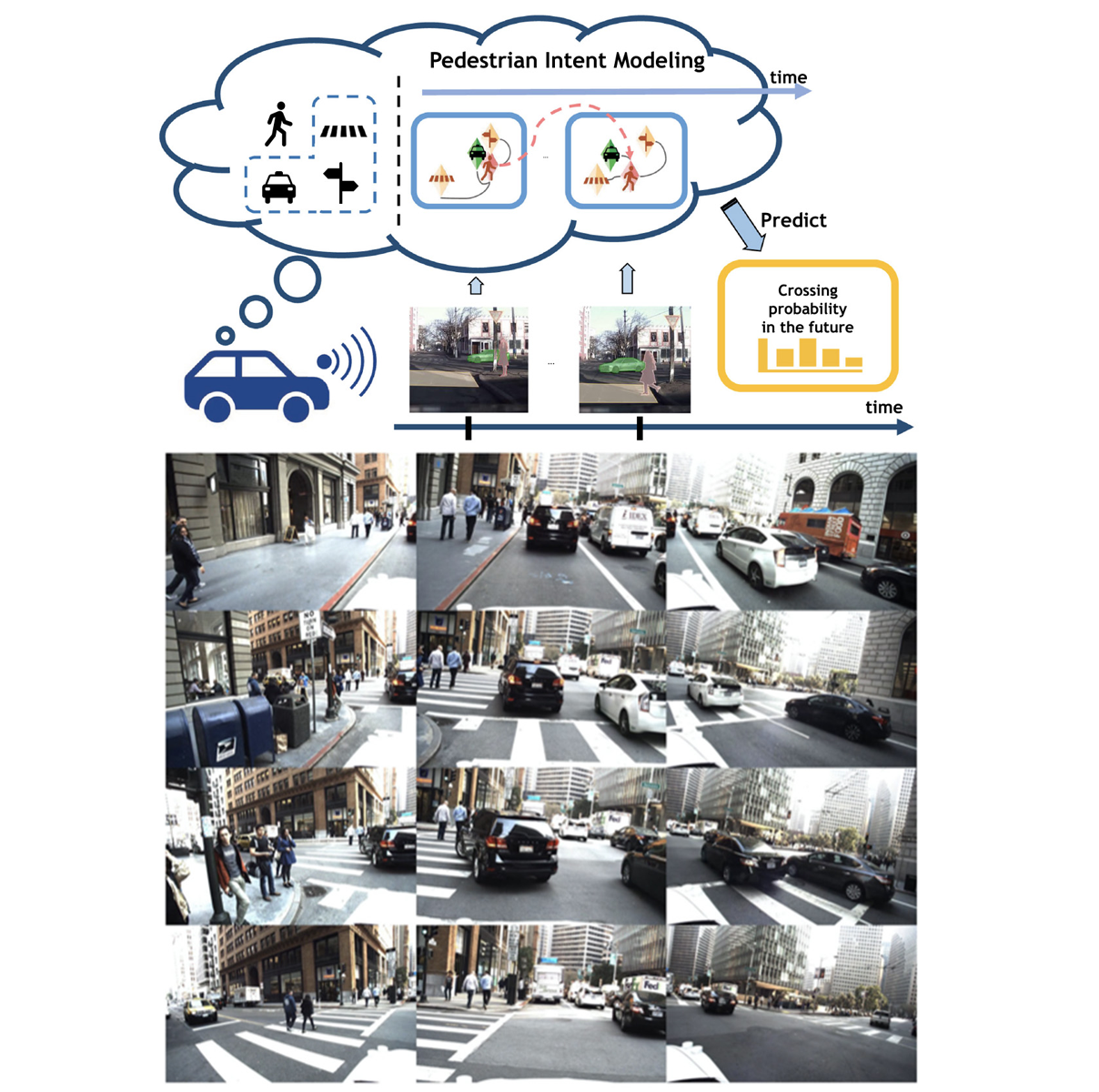

Reasoning over visual data is a desirable capability for robotics and vision-based applications. Such reasoning enables forecasting the next events or actions in videos. In recent years, various models have been developed based on convolution operations for prediction or forecasting, but they lack the ability to reason over spatiotemporal data and infer the relationships of different objects in the scene. In this letter, we present a framework based on graph convolution to uncover the spatiotemporal relationships in the scene for reasoning about pedestrian intent. A scene graph is built on top of segmented object instances within and across video frames. Pedestrian intent, defined as the future action of crossing or not-crossing the street, is very crucial piece of information for autonomous vehicles to navigate safely and more smoothly. We approach the problem of intent prediction from two different perspectives and anticipate the intention-to-cross within both pedestrian-centric and location-centric scenarios. In addition, we introduce a new dataset designed specifically for autonomous-driving scenarios in areas with dense pedestrian populations: the Stanford-TRI Intent Prediction (STIP) dataset. Our experiments on STIP and another benchmark dataset show that our graph modeling framework is able to predict the intention-to-cross of the pedestrians with an accuracy of 79.10% on STIP and 79.28% on Joint Attention for Autonomous Driving (JAAD) dataset up to one second earlier than when the actual crossing happens. These results outperform baseline and previous work. Read More

Citation: Liu, Bingbin, Ehsan Adeli, Zhangjie Cao, Kuan-Hui Lee, Abhijeet Shenoi, Adrien Gaidon, and Juan Carlos Niebles. "Spatiotemporal Relationship Reasoning for Pedestrian Intent Prediction." IEEE Robotics and Automation Letters 5, no. 2 (2020): 3485-3492.

TRI Authors: Jiexiong Tang (intern), Hanme Kim, Vitor Guizilini, Sudeep Pillai, Rares Ambrus

All Authors: Jiexiong Tang, Hanme Kim, Vitor Guizilini, Sudeep Pillai, Rares Ambrus

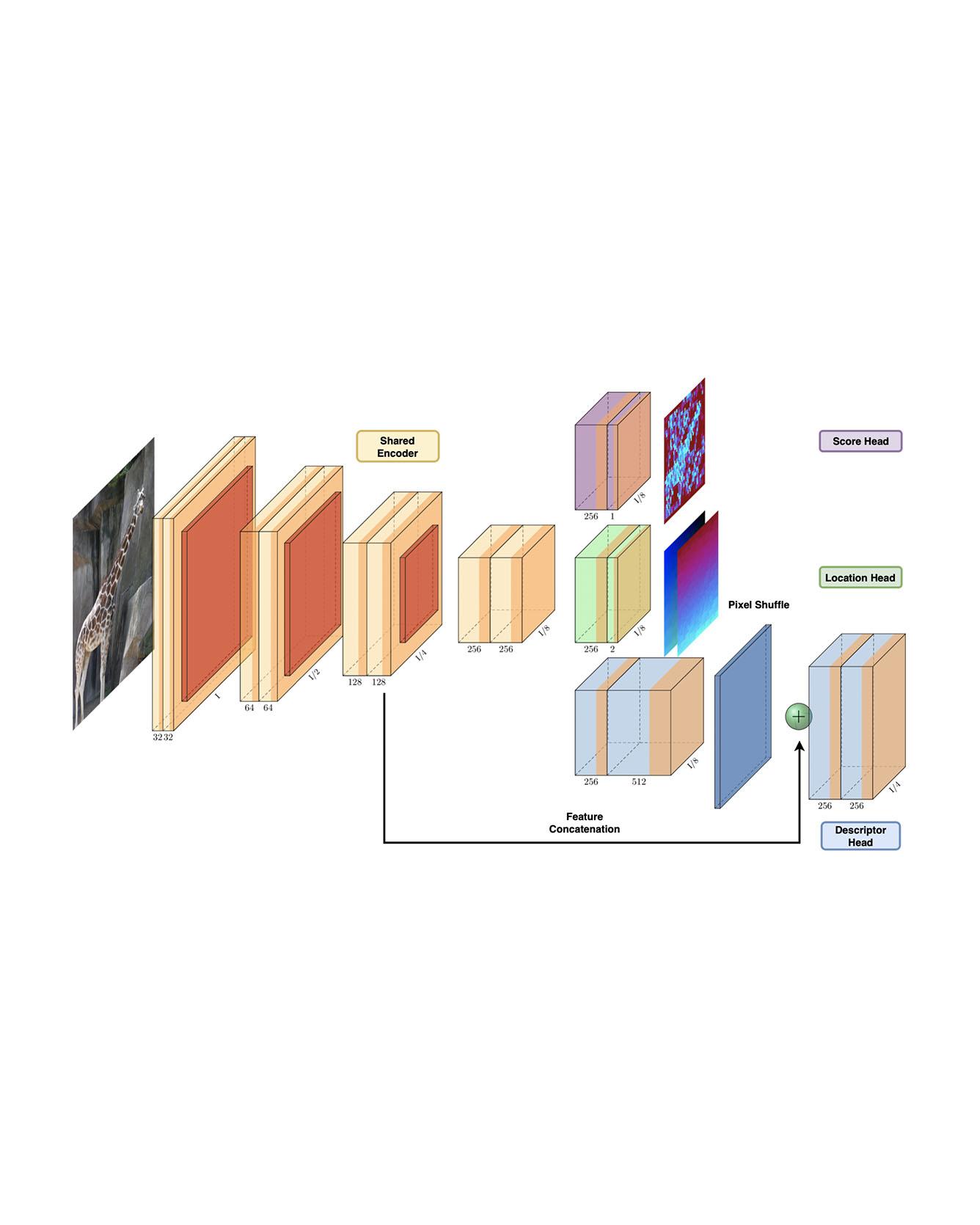

Identifying salient points in images is a crucial component for visual odometry, Structure-from-Motion or SLAM algorithms. Recently, several learned keypoint methods have demonstrated compelling performance on challenging benchmarks. However, generating consistent and accurate training data for interest-point detection in natural images still remains challenging, especially for human annotators. We introduce IO-Net (i.e. InlierOutlierNet), a novel proxy task for the self-supervision of keypoint detection, description and matching. By making the sampling of inlier-outlier sets from point-pair correspondences fully differentiable within the keypoint learning framework, we show that are able to simultaneously self-supervise keypoint description and improve keypoint matching. Second, we introduce KeyPointNet, a keypoint-network architecture that is especially amenable to robust keypoint detection and description. We design the network to allow local keypoint aggregation to avoid artifacts due to spatial discretizations commonly used for this task, and we improve fine-grained keypoint descriptor performance by taking advantage of efficient sub-pixel convolutions to upsample the descriptor feature-maps to a higher operating resolution. Through extensive experiments and ablative analysis, we show that the proposed self-supervised keypoint learning method greatly improves the quality of feature matching and homography estimation on challenging benchmarks over the state-of-the-art. Read More

Citation: Tang, Jiexiong, Hanme Kim, Vitor Guizilini, Sudeep Pillai, and Rares Ambrus. "Neural Outlier Rejection for Self-Supervised Keypoint Learning." ICLR 2020.

TRI Authors: Vitor Guizilini, Jie Li, Rares Ambrus, Adrien Gaidon

All Authors: Vitor Guizilini, Rui Hou, Jie Li, Rares Ambrus, Adrien Gaidon Self-supervised learning is showing great promise for monocular depth estimation, using geometry as the only source of supervision. Depth networks are indeed capable of learning representations that relate visual appearance to 3D properties by implicitly leveraging category-level patterns. In this work we investigate how to leverage more directly this semantic structure to guide geometric representation learning, while remaining in the self-supervised regime. Instead of using semantic labels and proxy losses in a multi-task approach, we propose a new architecture leveraging fixed pretrained semantic segmentation networks to guide self-supervised representation learning via pixel-adaptive convolutions. Furthermore, we propose a two-stage training process to overcome a common semantic bias on dynamic objects via resampling. Our method improves upon the state of the art for self-supervised monocular depth prediction over all pixels, fine-grained details, and per semantic categories. Read more

Citation: Guizilini, Vitor, Rui Hou, Jie Li, Rares Ambrus, and Adrien Gaidon. "Semantically-Guided Representation Learning for Self-Supervised Monocular Depth." ICLR 2020

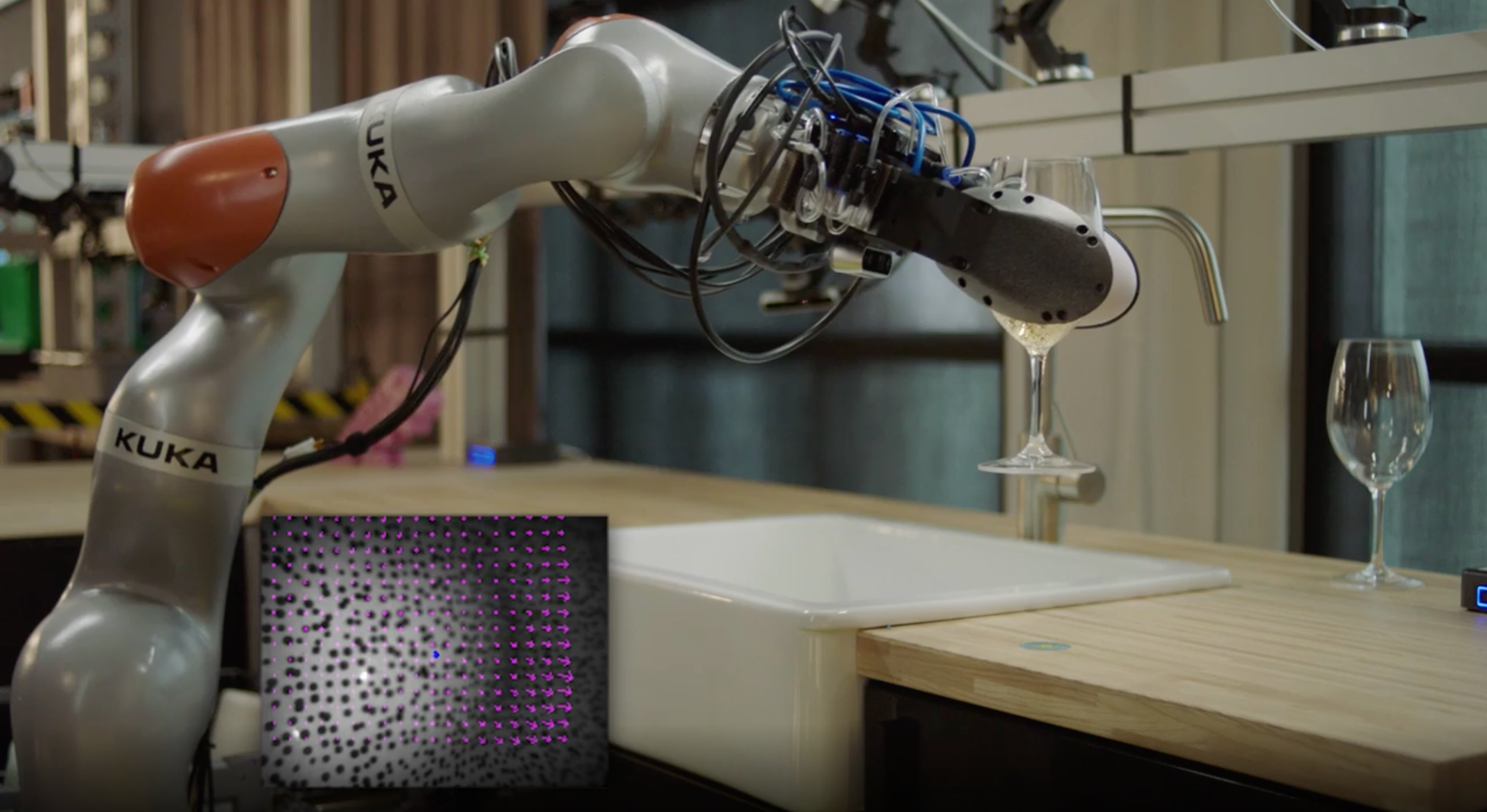

TRI Authors: Kuppuswamy, Naveen*, Alex Alspach, Avinash Uttamchandani, Sam Creasey, Takuya Ikeda, Russ Tedrake.

All Authors: Kuppuswamy, Naveen*, Alex Alspach, Avinash Uttamchandani, Sam Creasey, Takuya Ikeda, Russ Tedrake.

Citation: Kuppuswamy, Naveen*, Alex Alspach, Avinash Uttamchandani, Sam Creasey, Takuya Ikeda, Russ Tedrake. "Soft-bubbles grippers for robust and perceptive manipulation." 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems.

TRI Authors: Naveen Kuppuswamy, Alejandro Castro, Calder Phillips-Grafflin, Alex Alspach, Russ Tedrake

All Authors: Naveen Kuppuswamy, Alejandro Castro, Calder Phillips-Grafflin, Alex Alspach, Russ Tedrake

Modeling deformable contact is a well-known problem in soft robotics and is particularly challenging for compliant interfaces that permit large deformations. We present a model for the behavior of a highly deformable dense geometry sensor in its interaction with objects; the forward model predicts the elastic deformation of a mesh given the pose and geometry of a contacting rigid object. We use this model to develop a fast approximation to solve the inverse problem: estimating the contact patch when the sensor is deformed by arbitrary objects. This inverse model can be easily identified through experiments and is formulated as a sparse Quadratic Program (QP) that can be solved efficiently online. The proposed model serves as the first stage of a pose estimation pipeline for robot manipulation. We demonstrate the proposed inverse model through real-time estimation of contact patches on a contact-rich manipulation problem in which oversized fingers screw a nut onto a bolt, and as part of a complete pipeline for pose-estimation and tracking based on the Iterative Closest Point (ICP) algorithm. Our results demonstrate a path towards realizing soft robots with highly compliant surfaces that perform complex real-world manipulation tasks. Read More

Citation: Kuppuswamy, Naveen, Alejandro Castro, Calder Phillips-Grafflin, Alex Alspach, and Russ Tedrake. "Fast model-based contact patch and pose estimation for highly deformable dense-geometry tactile sensors." IEEE Robotics and Automation Letters (2019).

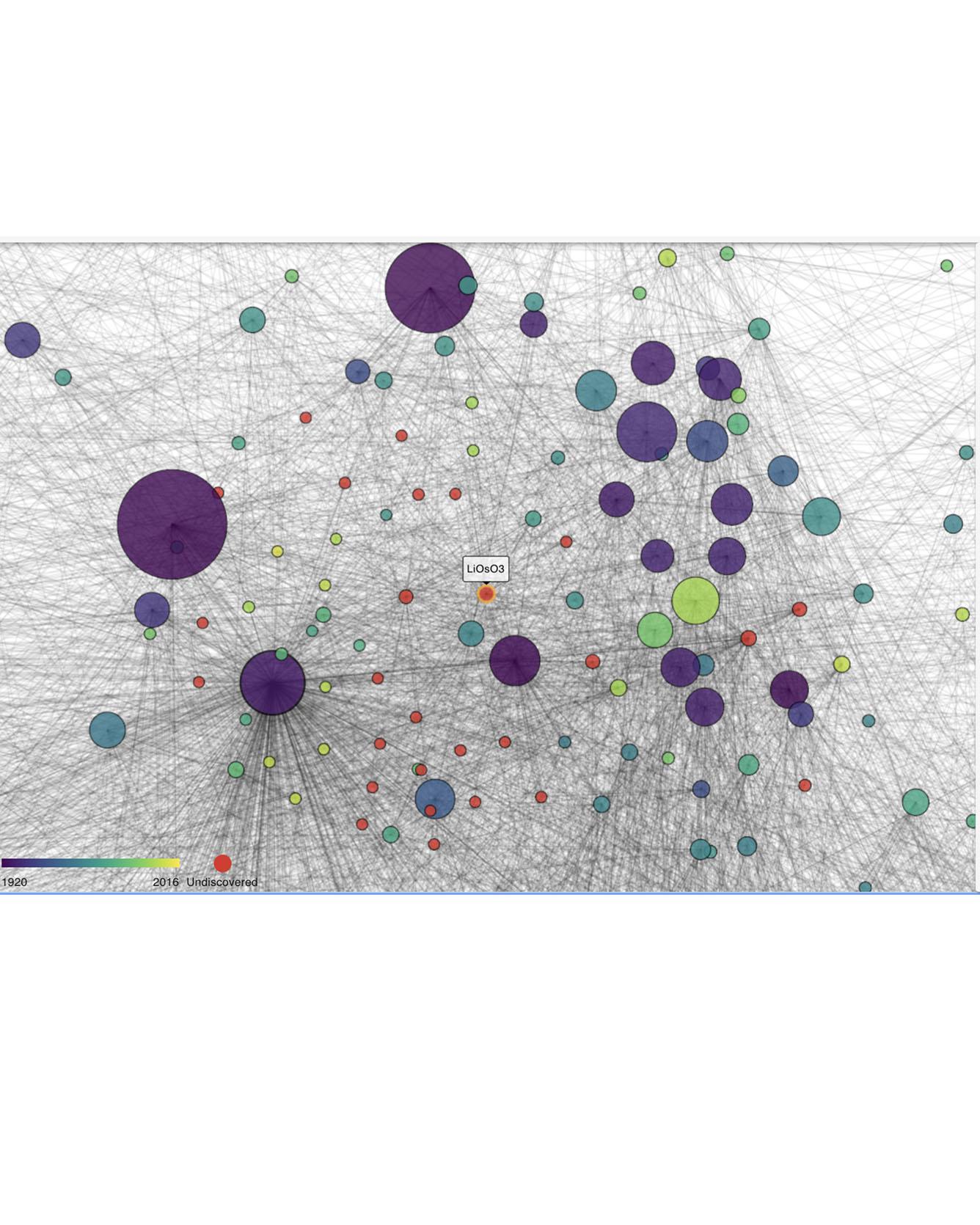

TRI Authors: Muratahan Aykol, Joseph Montoya, Jens Hummelshøj

All Authors: Roni Choudhury, Muratahan Aykol, Samuel Gratzl, Joseph Montoya, Jens Hummelshøj

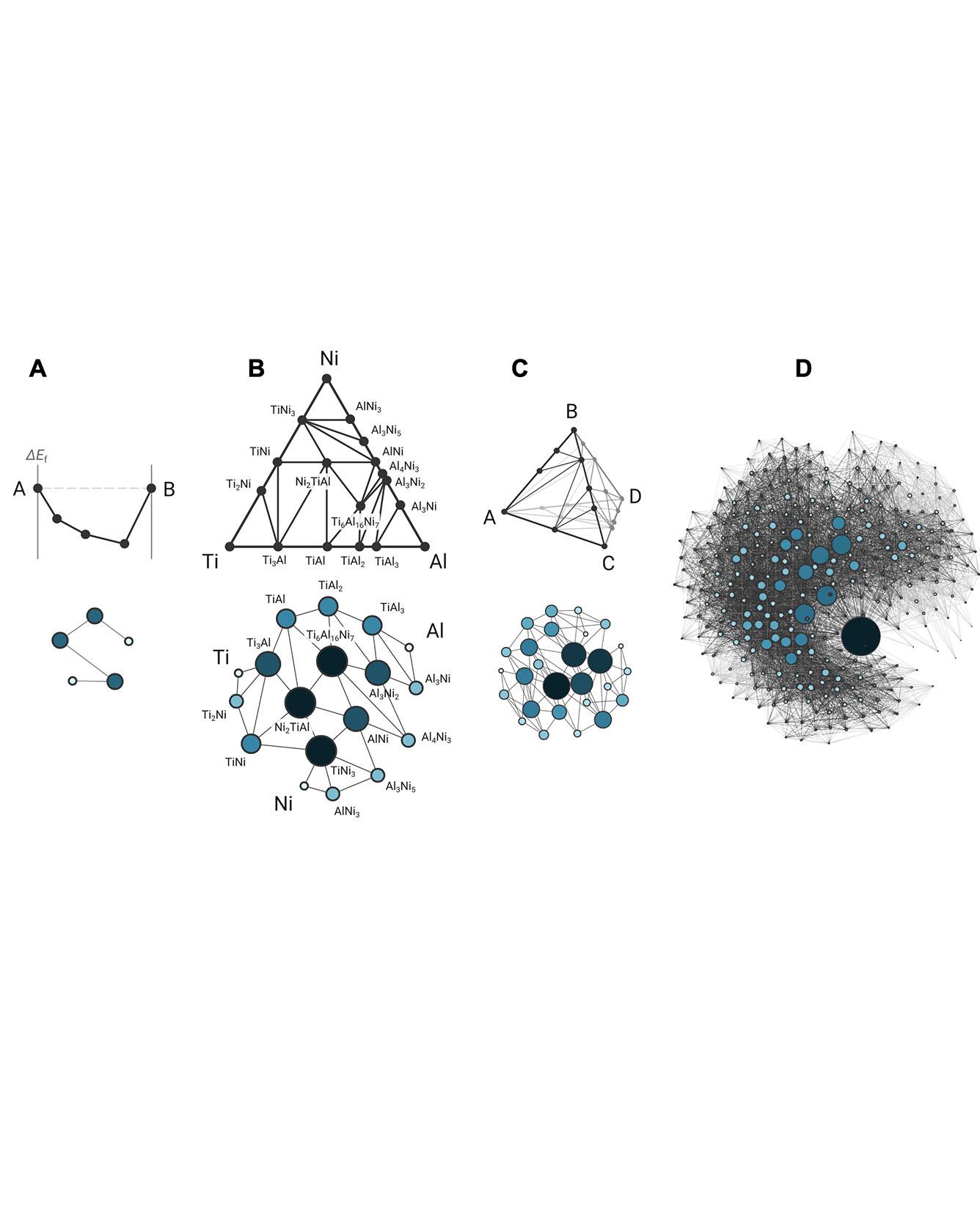

Materials science research deals primarily with understanding the relationship between the structure and properties of materials. With recent advances in computational power and automation of simulation techniques, material structure and property databases have emerged (Curtarolo et al., 2012; Jain et al., 2013; Kirklin et al., 2015), allowing a more data-driven approach to carrying out materials research. Recent studies have demonstrated that representing these databases as material networks can enable extraction of new materials knowledge (Hegde, Aykol, Kirklin, & Wolverton, 2018; Isayev et al., 2015) or help tackle challenges like predictive synthesis (Aykol, Hegde, et al., 2019) that require relational information between materials. Materials databases have become very popular because they enable their users to do rapid prototyping by searching near globally for figures of merit for their target application. However, both scientists and engineers have little in the way of visualization of aggregates from these databases, that is, intuitive layouts that help understand which materials are related and how they are related. The need for a tool that does this is particularly crucial in materials science because properties like phase stability and crystal structure similarity are themselves functions of a material dataset, rather than of individual materials. Read More

Citation: Choudhury, Roni, Muratahan Aykol, Samuel Gratzl, Joseph Montoya, and Jens Hummelshøj. "MaterialNet: A web-based graph explorer for materials science data." Journal of Open Source Software 5, no. 47 (2020): 2105.

TRI Authors: Muratahan Aykol*

All Authors: Vinay Hegde, Muratahan Aykol*, Scott Kirklin, Chris Wolverton*

One of the holy grails of materials science, unlocking structure-property relationships, has largely been pursued via bottom-up investigations of how the arrangement of atoms and interatomic bonding in a material determine its macroscopic behavior. Here, we consider a complementary approach, a top-down study of the organizational structure of networks of materials, based on the interaction between materials themselves. We unravel the complete “phase stability network of all inorganic materials” as a densely connected complex network of 21,000 thermodynamically stable compounds (nodes) interlinked by 41 million tie line (edges) defining their two-phase equilibria, as computed by high-throughput density functional theory. Analyzing the topology of this network of materials has the potential to uncover previously unidentified characteristics inaccessible from traditional atoms-to-materials paradigms. Using the connectivity of nodes in the phase stability network, we derive a rational, data-driven metric for material reactivity, the “nobility index,” and quantitatively identify the noblest materials in nature. Read More

Citation: Hegde, Vinay I., Muratahan Aykol, Scott Kirklin, and Chris Wolverton. "The phase stability network of all inorganic materials." Science Advances 6, no. 9 (2020): eaay5606.