Featured Publications

All Publications

TRI Authors: Alex Alspach, Kunimatsu Hashimoto, Naveen Kuppuswarny and Russ Tedrake

All Authors: A. Alspach, K. Hashimoto, N. Kuppuswarny and R. Tedrake

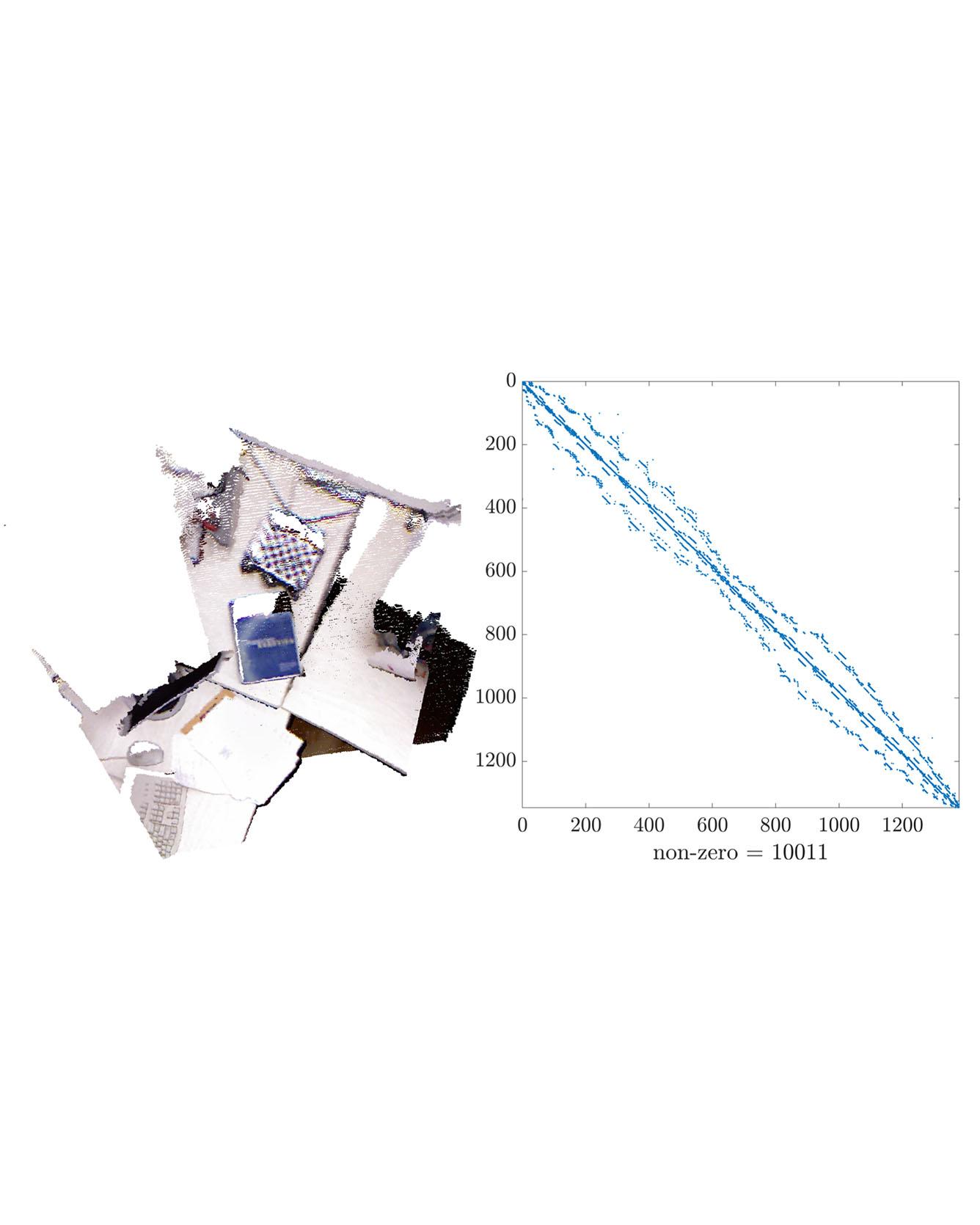

Incorporating effective tactile sensing and mechanical compliance is key towards enabling robust and safe operation of robots in unknown, uncertain and cluttered environments. Towards realizing this goal, we present a lightweight, easy-to-build, highly compliant dense geometry sensor and end effector that comprises an inflated latex membrane with a depth sensor behind it. We present the motivations and the hardware design for this Soft-bubble and demonstrate its capabilities through example tasks including tactile-object classification, pose estimation and tracking, and nonprehensile object manipulation. We also present initial experiments to show the importance of high-resolution geometry sensing for tactile tasks and discuss applications in robust manipulation. Read More

Citation: Alspach, Alex, Kunimatsu Hashimoto, Naveen Kuppuswarny, and Russ Tedrake. "Soft-bubble: A highly compliant dense geometry tactile sensor for robot manipulation." In 2019 2nd IEEE International Conference on Soft Robotics (RoboSoft), pp. 597-604. IEEE, 2019.

TRI Authors: Astrid Jackson, Brandon D. Northcutt

All Authors: Astrid Jackson, Brandon D. Northcutt, Gita Sukthankar

One of the advantages of teaching robots by demonstration is that it can be more intuitive for users to demonstrate rather than describe the desired robot behavior. However, when the human demonstrates the task through an interface, the training data may inadvertently acquire artifacts unique to the interface, not the desired execution of the task. Being able to use one's own body usually leads to more natural demonstrations, but those examples can be more difficult to translate to robot control policies. This paper quantifies the benefits of using a virtual reality system that allows human demonstrators to use their own body to perform complex manipulation tasks. We show that our system generates superior demonstrations for a deep neural network without introducing a correspondence problem. The effectiveness of this approach is validated by comparing the learned policy to that of a policy learned from data collected via a conventional gaming system, where the user views the environment on a monitor screen, using a Sony Play Station 3 (PS3) DualShock 3 wireless controller as input. Read more

Citation: Jackson, Astrid, Brandon D. Northcutt, and Gita Sukthankar. "The benefits of immersive demonstrations for teaching robots." In 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), pp. 326-334. IEEE, 2019.

TRI Author: Jens Hummelshøj

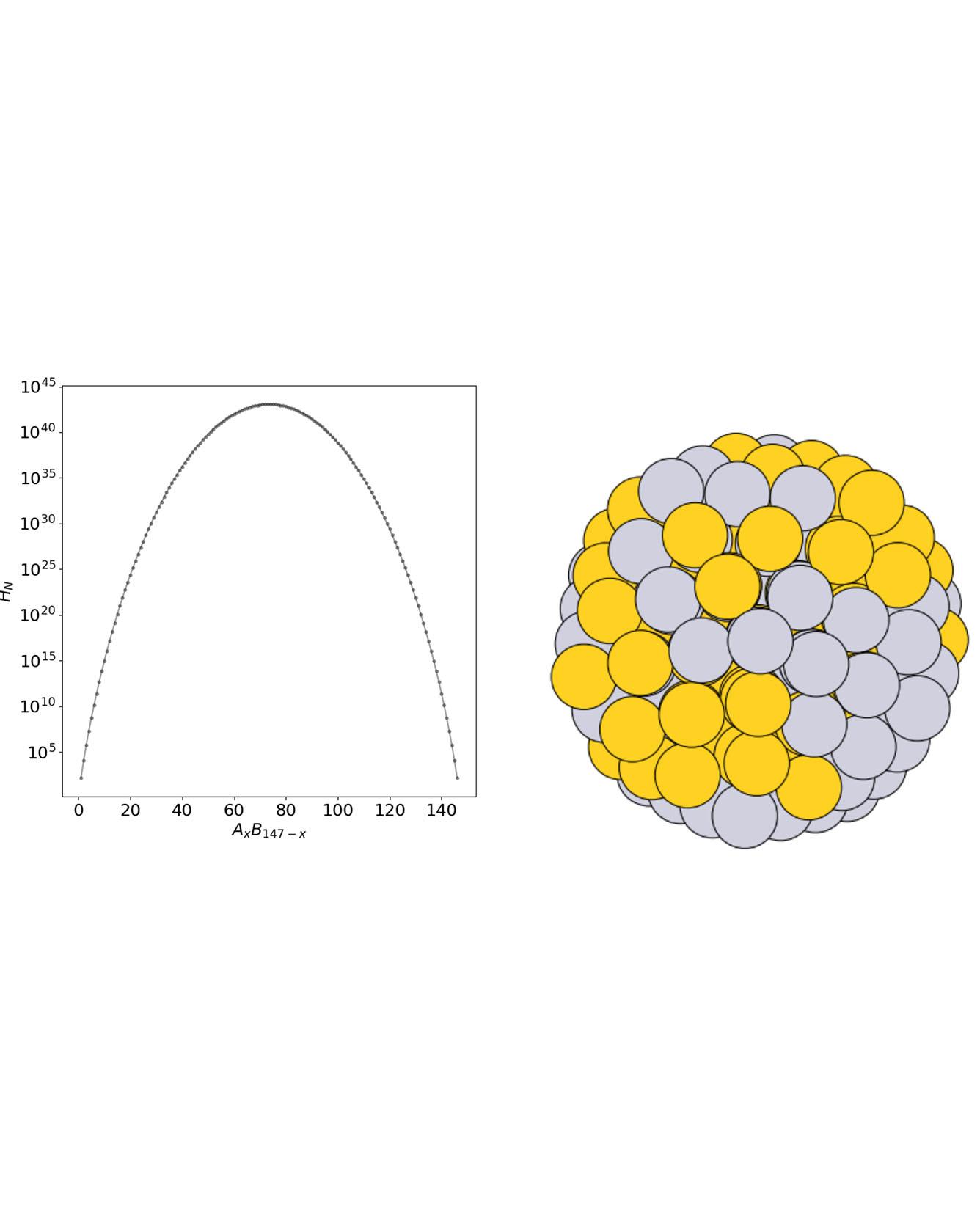

All Authors: Paul C Jennings, Steen Lysgaard, Jens Strabo Hummelshøj, Tejs Vegge, Thomas Bligaard

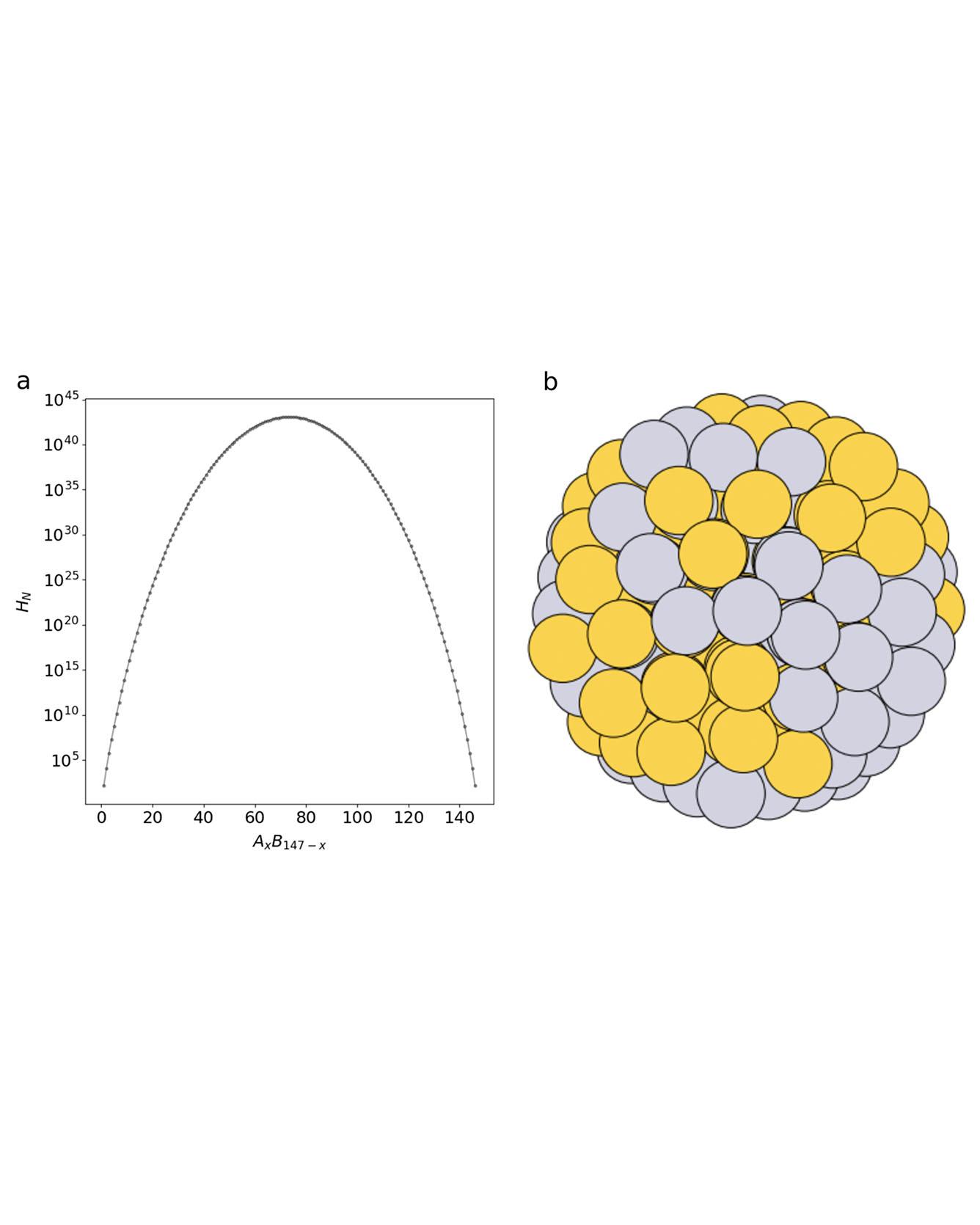

Materials discovery is increasingly being impelled by machine learning methods that rely on pre-existing datasets. Where datasets are lacking, unbiased data generation can be achieved with genetic algorithms. Here a machine learning model is trained on-the-fly as a computationally inexpensive energy predictor before analyzing how to augment convergence in genetic algorithm-based approaches by using the model as a surrogate. This leads to a machine learning accelerated genetic algorithm combining robust qualities of the genetic algorithm with rapid machine learning. The approach is used to search for stable, compositionally variant, geometrically similar nanoparticle alloys to illustrate its capability for accelerated materials discovery, e.g., nanoalloy catalysts. The machine learning accelerated approach, in this case, yields a 50-fold reduction in the number of required energy calculations compared to a traditional “brute force” genetic algorithm. This makes searching through the space of all homotops and compositions of a binary alloy particle in a given structure feasible, using density functional theory calculations. Read More

Citation: Jennings, Paul C., Steen Lysgaard, Jens Strabo Hummelshøj, Tejs Vegge, and Thomas Bligaard. "Genetic algorithms for computational materials discovery accelerated by machine learning." npj Computational Materials 5, no. 1 (2019): 1-6.

TRI Author: Ryan M. Eustice

All Authors: Maani Ghaffari Jadidi, William Clark, Anthony Bloch, Ryan M. Eustice, and Jessy W. Grizzle

This paper reports on a novel formulation and evaluation of visual odometry from RGB-D images. Assuming a static scene, the developed theoretical framework generalizes the widely used direct energy formulation (photometric error minimization) technique for obtaining a rigid body transformation that aligns two overlapping RGB-D images to a continuous formulation. The continuity is achieved through functional treatment of the problem and representing the process models over RGB-D images in a reproducing kernel Hilbert space; consequently, the registration is not limited to the specific image resolution and the framework is fully analytical with a closed-form derivation of the gradient. We solve the problem by maximizing the inner product between two functions defined over RGB-D images, while the continuous action of the rigid body motion Lie group is captured through the integration of the flow in the corresponding Lie algebra. Energy-based approaches have been extremely successful and the developed framework in this paper shares many of their desired properties such as the parallel structure on both CPUs and GPUs, sparsity, semi-dense tracking, avoiding explicit data association which is computationally expensive, and possible extensions to the simultaneous localization and mapping frameworks. The evaluations on experimental data and comparison with the equivalent energy-based formulation of the problem confirm the effectiveness of the proposed technique, especially, when the lack of structure and texture in the environment is evident. Read More

Citation: Ghaffari, Maani, William Clark, Anthony Bloch, Ryan M. Eustice, and Jessy W. Grizzle. "Continuous direct sparse visual odometry from RGB-D images." In RSS 2019. arXiv preprint arXiv:1904.02266 (2019).

TRI Authors: Muratahan Aykol, Patrick K. Herring

All Authors: Kristen A. Severson, Peter M. Attia, Norman Jin, Nicholas Perkins, Benben Jiang, Zi Yang, Michael H. Chen, Muratahan Aykol, Patrick K. Herring, Dimitrios Fraggedakis, Martin Z. Bazant, Stephen J. Harris, William C. Chueh & Richard D. Braatz

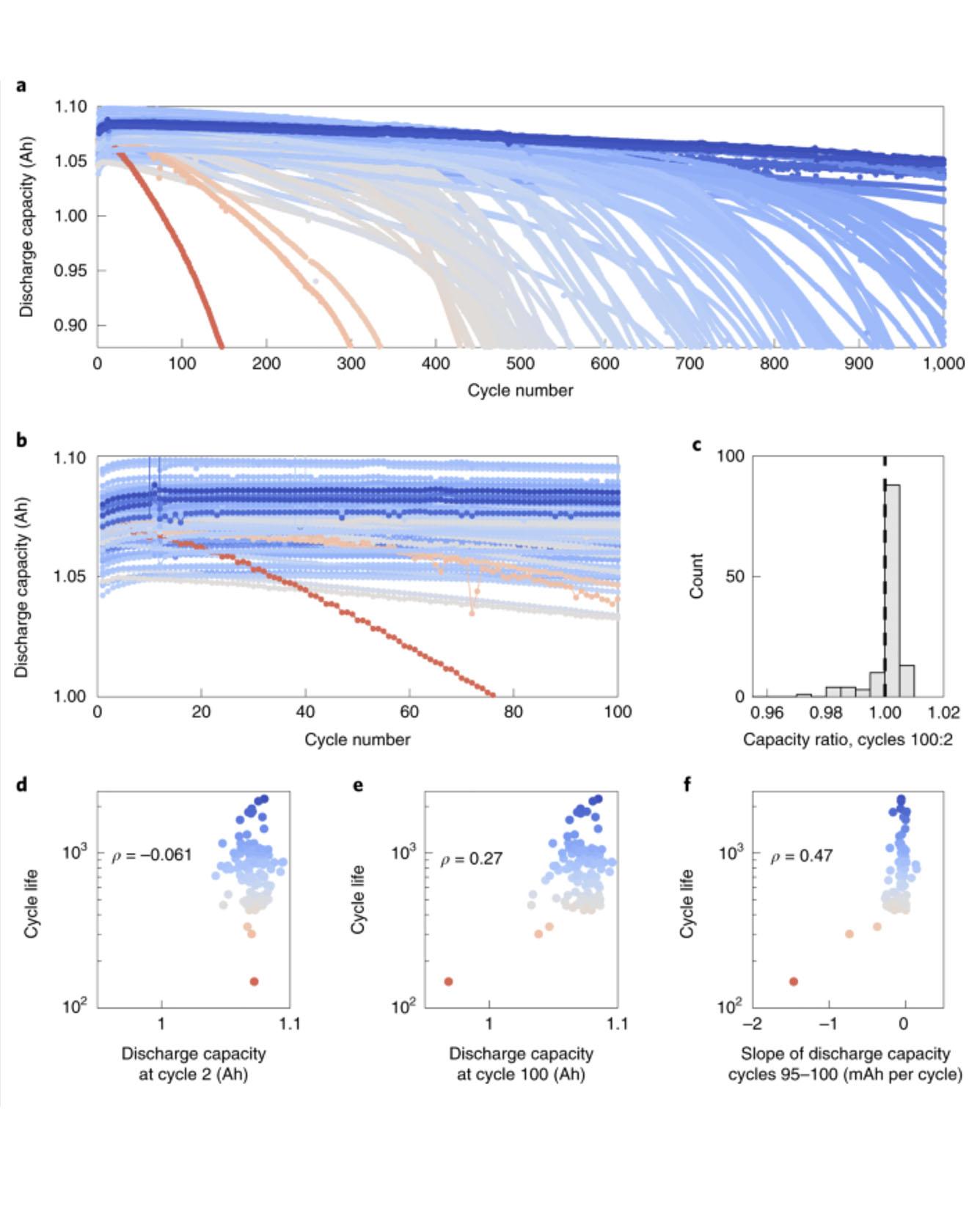

Accurately predicting the lifetime of complex, nonlinear systems such as lithium-ion batteries is critical for accelerating technology development. However, diverse aging mechanisms, significant device variability and dynamic operating conditions have remained major challenges. We generate a comprehensive dataset consisting of 124 commercial lithium iron phosphate/graphite cells cycled under fast-charging conditions, with widely varying cycle lives ranging from 150 to 2,300 cycles. Using discharge voltage curves from early cycles yet to exhibit capacity degradation, we apply machine-learning tools to both predict and classify cells by cycle life. Our best models achieve 9.1% test error for quantitatively predicting cycle life using the first 100 cycles (exhibiting a median increase of 0.2% from initial capacity) and 4.9% test error using the first 5 cycles for classifying cycle life into two groups. This work highlights the promise of combining deliberate data generation with data-driven modelling to predict the behaviour of complex dynamical systems. Read More

Citation: Severson, Kristen A., Peter M. Attia, Norman Jin, Nicholas Perkins, Benben Jiang, Zi Yang, Michael H. Chen et al. "Data-driven prediction of battery cycle life before capacity degradation." Nature Energy 4, no. 5 (2019): 383-391.

TRI Author: Kuan-Hui Lee

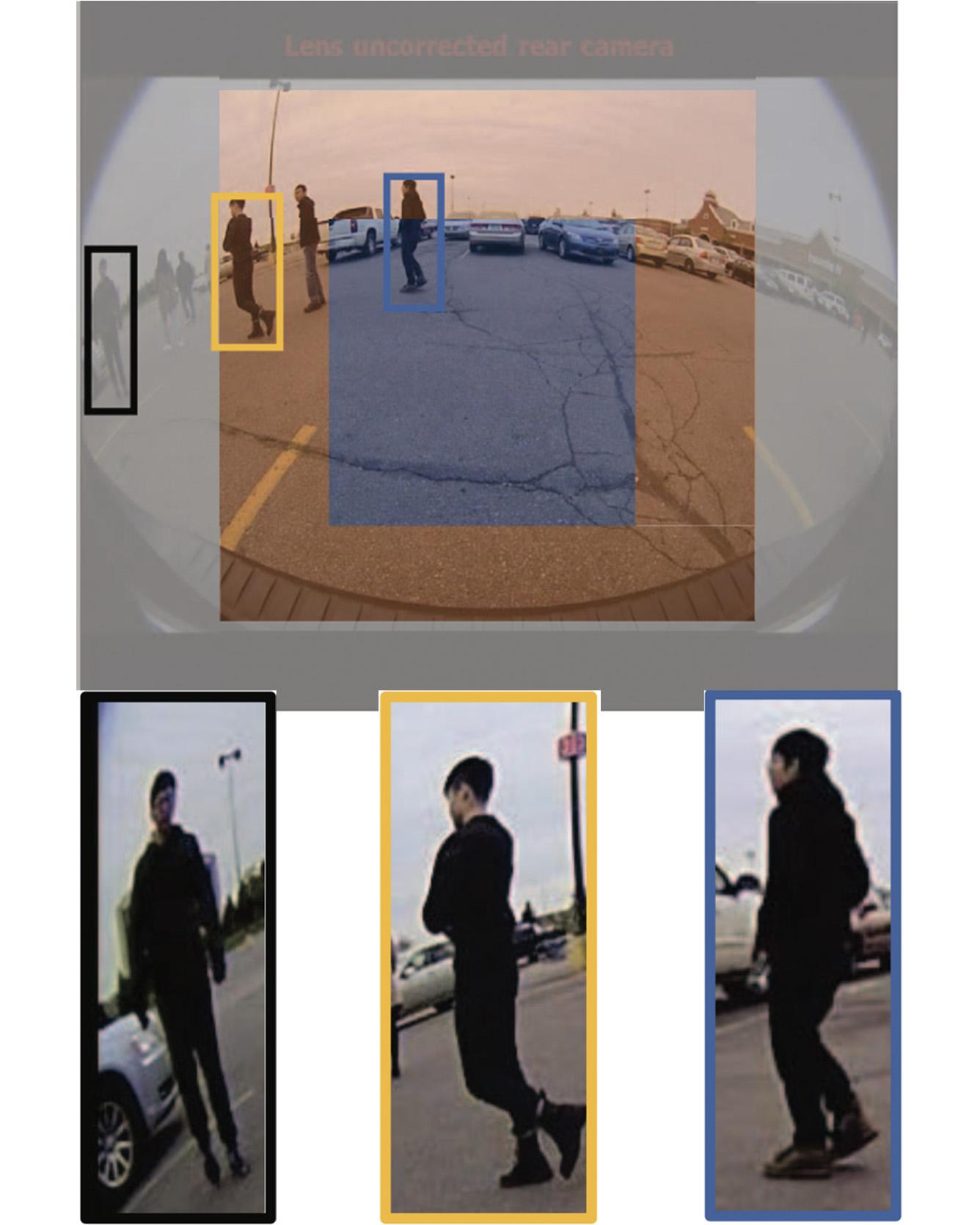

All Authors: Zheng Tang, Yen-Shuo Lin, Kuan-Hui Lee, Jenq-Neng Hwang, Jen-Hui Chuang

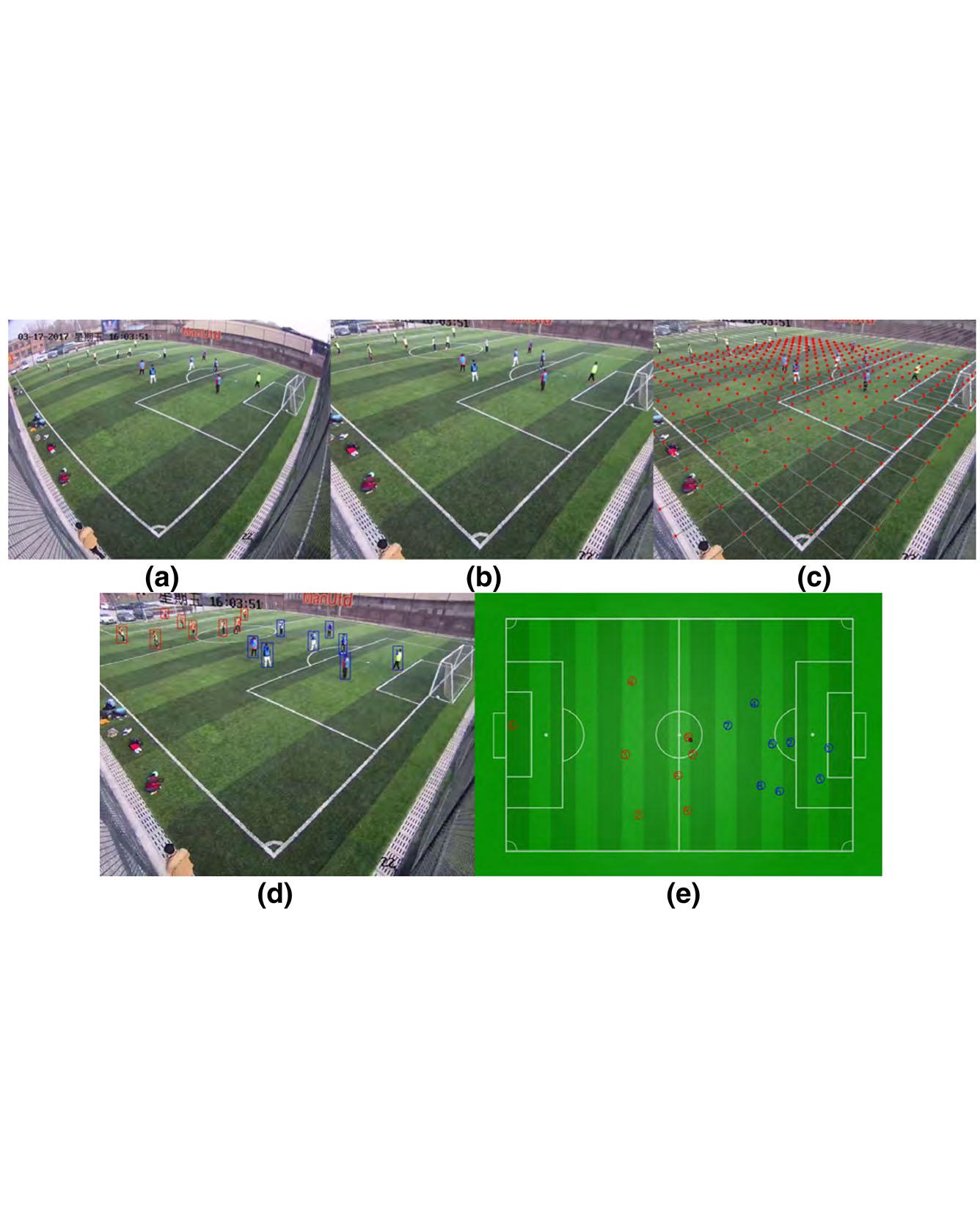

Camera calibration and radial distortion correction are the crucial prerequisites for many applications in image big data and computer vision. Many existing works rely on the Manhattan world assumption to estimate the camera parameters automatically; however, they may perform poorly when there was lack of man-made structure in the scene. As walking humans are the common objects in video surveillance, they have also been used for camera self-calibration, but the main challenges include the noise reduction for the estimation of vanishing points, the relaxation of assumptions on unknown camera parameters, and the radial distortion correction. In this paper, we present a novel framework for camera self-calibration and automatic radial distortion correction. Our approach starts with the reliable human body segmentation that is facilitated by robust object tracking. Mean shift clustering and Laplace linear regression are, respectively, introduced in the estimation of the vertical vanishing point and the horizon line. The estimation of distribution algorithm, an evolutionary optimization scheme, is then utilized to optimize the camera parameters and the distortion coefficients, in which all the unknowns in camera projection can be fine-tuned simultaneously. Experiments on the three public benchmarks and our own captured dataset demonstrate the robustness of the proposed method. The superiority of this algorithm is also verified by the capability of reliably converting 2D object tracking into 3D space. Read more

Citation: Tang, Zheng, Yen-Shuo Lin, Kuan-Hui Lee, Jenq-Neng Hwang, and Jen-Hui Chuang. "ESTHER: Joint camera self-calibration and automatic radial distortion correction from tracking of walking humans." IEEE Access 7 (2019): 10754-10766.

TRI Author: Simon Stent

All Authors: Xishuai Peng, YiLu Murphey, Simon Stent, Yuanxiang Li, Zihao Zhao

Objects in the periphery of fisheye images can become extremely distorted. This distortion can cause false positives and missed detections for automated object detection systems. This is problematic, not only for systems which have been trained on perspective images, but also for those that have been explicitly trained on fisheye data. In this paper we propose a new cost function for training object detectors on fisheye images. We model fisheye image distortion as an imbalanced domain problem and develop a domain association loss function to approach it with deep learning. We define separate domains based on the level of distortion within the image plane and propose a new objective function, inspired by the recently introduced focal loss for object detection, which we call a spatial focal loss. Our proposed loss incorporates a domain-modulating term which re-weights samples from different domains to encourage the learning of domain-invariant features. We implement spatial focal loss function in the YOLOv2 architecture and evaluate it on the task of pedestrian detection in a fisheye dataset captured by a 360 camera system mounted on a moving vehicle and labeled with over 11,000 pedestrian instances. Our experiments demonstrate that spatial focal loss can improve model performance in the highly distorted image periphery versus existing loss functions, including focal loss, without sacrificing performance in the less distorted image center, with no adaptations to network architectures required. By analyzing the locations of missed detections, we show further evidence that our loss function can improve the learning of domain-invariant features. Read More

Citation: Peng, Xishuai, Yi Murphey, Simon Stent, Yuanxiang Li, and Zihao Zhao. "Spatial focal loss for pedestrian detection in fisheye imagery." In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 561-569. IEEE, 2019.

TRI Author: Jens Hummelshøj

All Authors: Steen Lysgaard, Paul C Jennings, Jens Strabo Hummelshøj, Thomas Bligaard, Tejs Vegge

A machine learning model is used as a surrogate fitness evaluator in a genetic algorithm (GA) optimization of the atomic distribution of Pt-Au nanoparticles. The machine learning accelerated genetic algorithm (MLaGA) yields a 50-fold reduction of required energy calculations compared to a traditional GA. Read More

Citation: Lysgaard, Steen, Paul C. Jennings, Jens Strabo Hummelshøj, Thomas Bligaard, and Tejs Vegge. "Machine Learning Accelerated Genetic Algorithms for Computational Materials Search." In ChemRxiv(2018).

TRI Authors: Jonathan DeCastro, Russ Tedrake

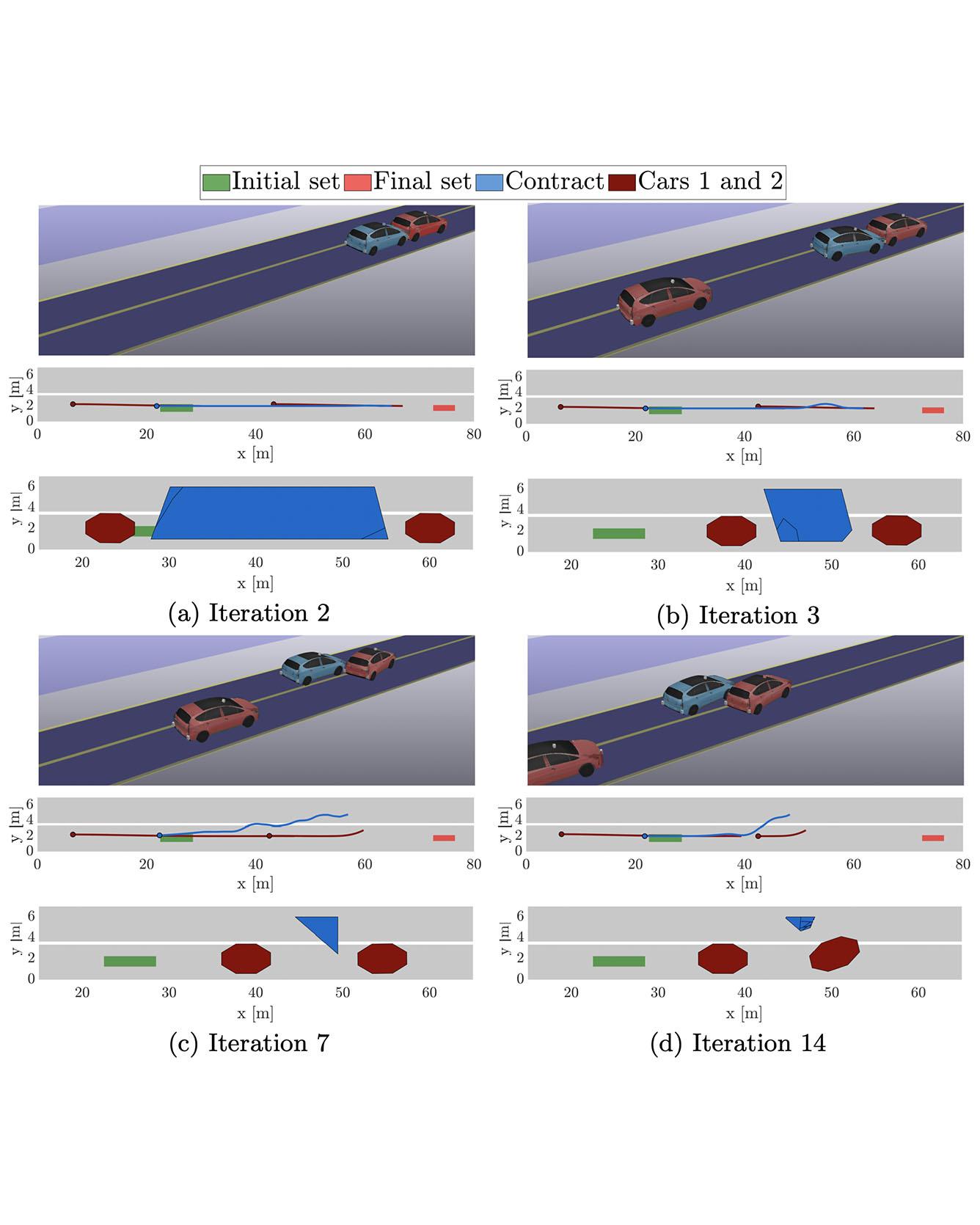

All Authors: J. DeCastro, L. Liebenwein, C.-I. Vasile, R. Tedrake, S. Karaman and D. Rus

Ensuring the safety of autonomous vehicles is paramount for their successful deployment. However, formally verifying autonomous driving decisions systems is difficult. In this paper, we propose a framework for constructing a set of safety contracts that serve as design requirements for controller synthesis for a given scenario. The contracts guarantee that the controlled system will remain safe with respect to probabilistic models of traffic behavior, and, furthermore, that it will follow rules of the road. We create contracts using an iterative approach that alternates between falsification and reachable set computation. Counterexamples to collision-free behavior are found by solving a gradientbased trajectory optimization problem. We treat these counterexamples as obstacles in a reach-avoid problem that quantifies the set of behaviors an ego vehicle can make while avoiding the counterexample. Contracts are then derived directly from the reachable set. We demonstrate that the resulting design requirements are able to separate safe from unsafe behaviors in an interacting multi-car traffic scenario, and further illustrate their utility in analyzing the safety impact of relaxing traffic rules. Read More

Citation: DeCastro, Jonathan, Lucas Liebenwein, Cristian-Ioan Vasile, Russ Tedrake, Sertac Karaman, and Daniela Rus. "Counterexample-guided safety contracts for autonomous driving." In International Workshop on the Algorithmic Foundations of Robotics, 2018.

TRI Authors: Jie Li, Allan Raventos, Arjun Bhargava, Takaaki Tagawa, Adrien Gaidon

All Authors: Jie Li, Allan Raventos, Arjun Bhargava, Takaaki Tagawa, Adrien Gaidon

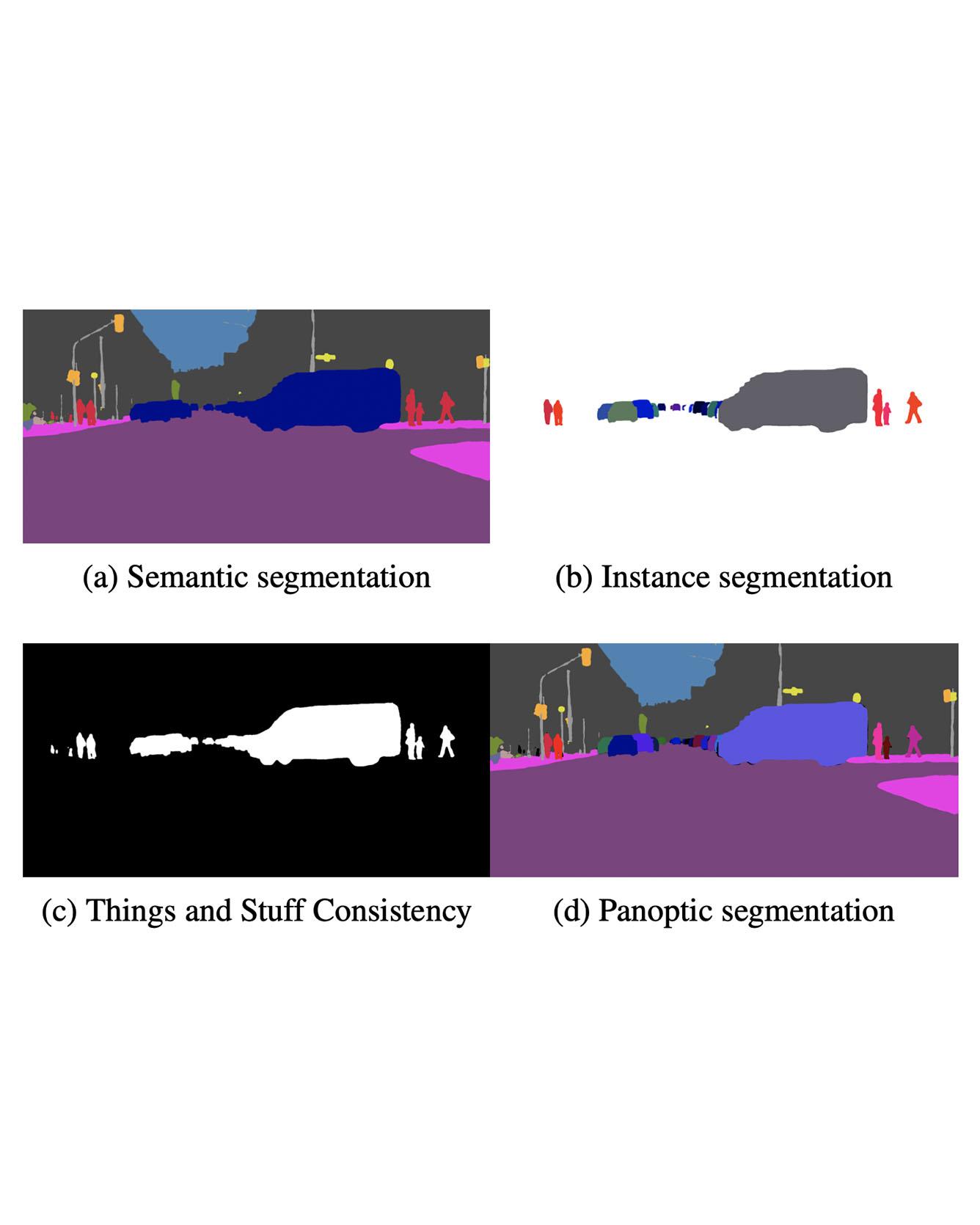

We propose an end-to-end learning approach for panoptic segmentation, a novel task unifying instance (things) and semantic (stuff) segmentation. Our model, TASCNet, uses feature maps from a shared backbone network to predict in a single feed-forward pass both things and stuff segmentations. We explicitly constrain these two output distributions through a global things and stuff binary mask to enforce cross-task consistency. Our proposed unified network is competitive with the state of the art on several benchmarks for panoptic segmentation as well as on the individual semantic and instance segmentation tasks. Read more

Citation: Li, Jie, Allan Raventos, Arjun Bhargava, Takaaki Tagawa, and Adrien Gaidon. "Learning to fuse things and stuff." arXiv preprint arXiv:1812.01192 (2018).