TRI Authors: Max Bajracharya, James Borders, Dan Helmick, Thomas Kollar, Michael Laskey, John Leighty, Jeremy Ma, Umashankar Nagarajan, Akiyoshi Ochiai, Josh Peterson, Krishna Shankar, Kevin Stone, Yutaka Takaoka

All Authors: Max Bajracharya, James Borders, Dan Helmick, Thomas Kollar, Michael Laskey, John Leighty, Jeremy Ma, Umashankar Nagarajan, Akiyoshi Ochiai, Josh Peterson, Krishna Shankar, Kevin Stone, Yutaka Takaoka

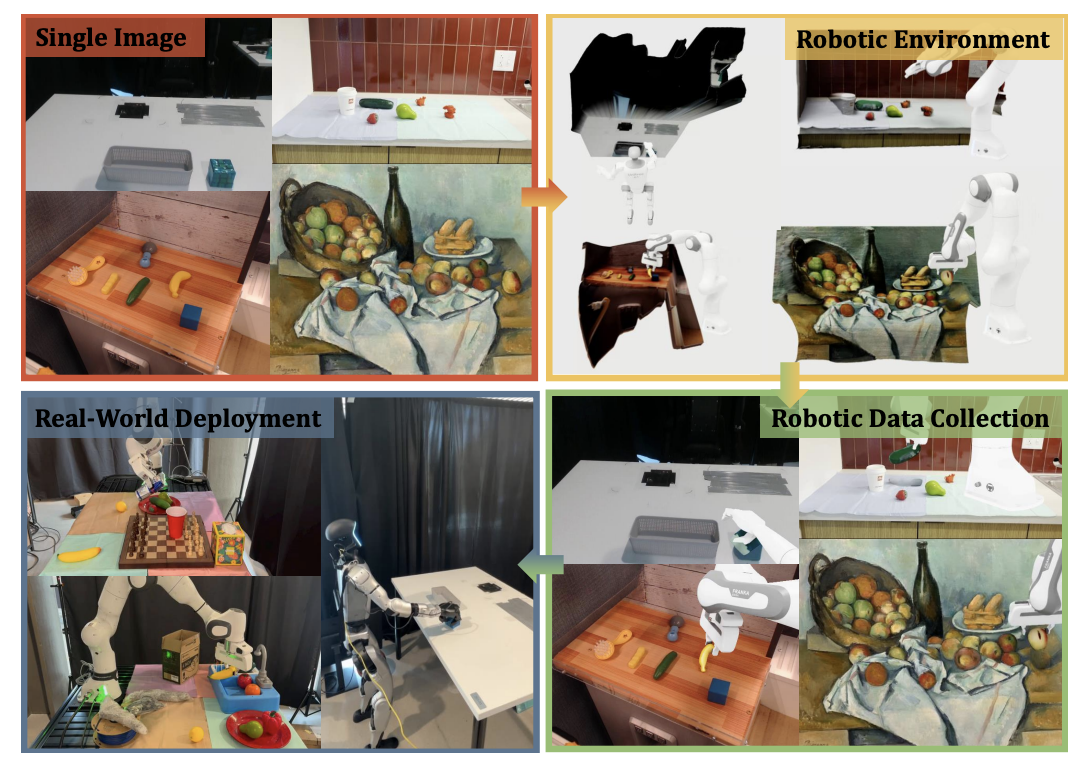

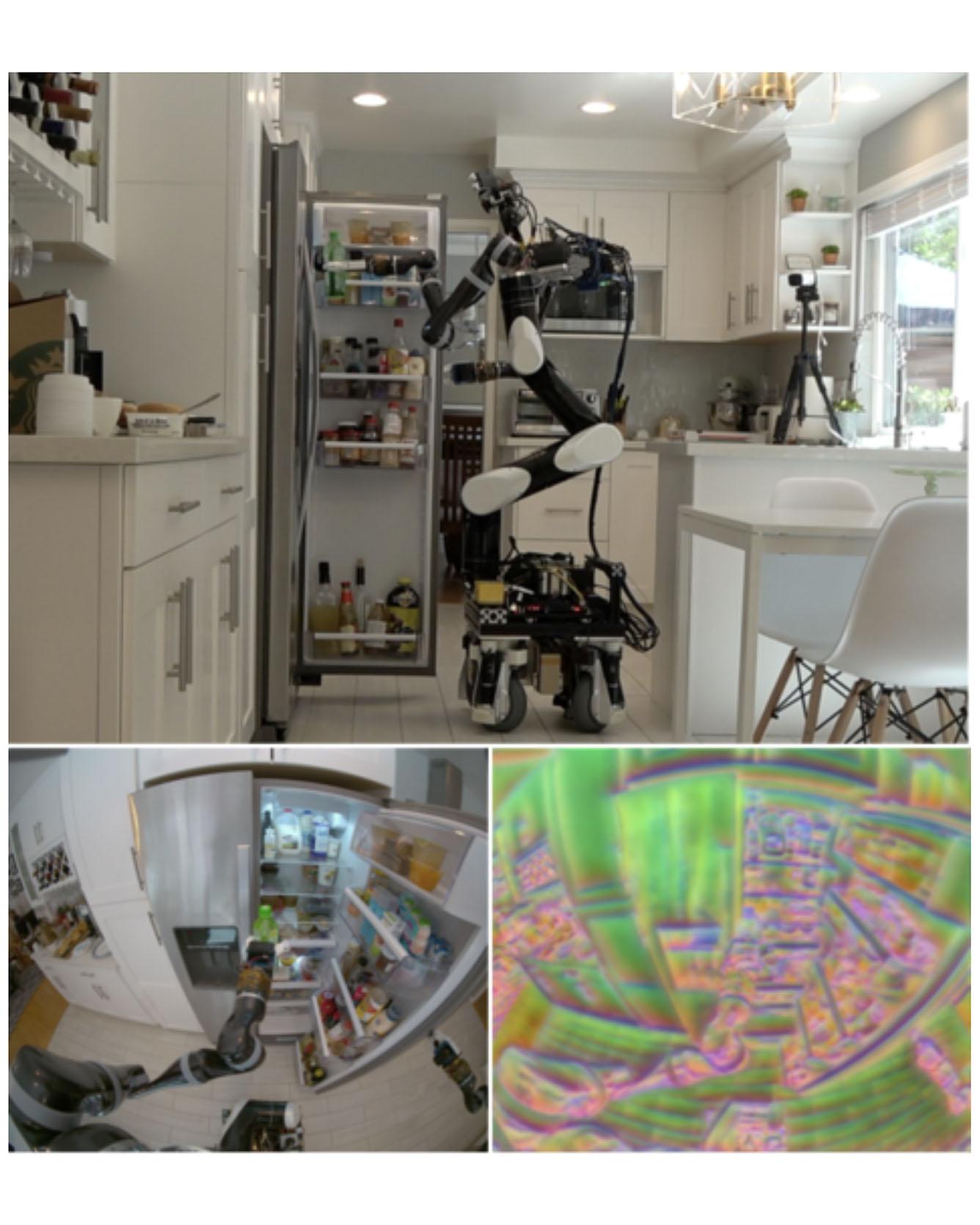

We describe a mobile manipulation hardware and software system capable of autonomously performing complex human-level tasks in real homes, after being taught the task with a single demonstration from a person in virtual reality. This is enabled by a highly capable mobile manipulation robot, whole-body task space hybrid position/force control, teaching of parameterized primitives linked to a robust learned dense visual embeddings representation of the scene, and a task graph of the taught behaviors. We demonstrate the robustness of the approach by presenting results for performing a variety of tasks, under different environmental conditions, in multiple real homes. Our approach achieves 85% overall success rate on three tasks that consist of an average of 45 behaviors each. Read More

Citation: Bajracharya, Max, James Borders, Dan Helmick, Thomas Kollar, Michael Laskey, John Leichty, Jeremy Ma et al. "A Mobile Manipulation System for One-Shot Teaching of Complex Tasks in Homes." arXiv preprint arXiv:1910.00127 (2019).